mirror of

https://github.com/openfaas/faas.git

synced 2025-06-09 08:46:48 +00:00

Remove deprecated guides

The guides are either moved due to being out of date, or being surpassed by the workshop or main documentation site. Signed-off-by: Alex Ellis (OpenFaaS Ltd) <alexellis2@gmail.com>

This commit is contained in:

parent

5a4cc68a98

commit

25a9f4aab7

@ -1,4 +0,0 @@

|

||||

## OpenFaaS Documentation

|

||||

|

||||

The OpenFaaS documentation site is available at [docs.openfaas.com](https://docs.openfaas.com/)

|

||||

|

||||

@ -1,88 +0,0 @@

|

||||

OpenFaaS guides & documentation

|

||||

================

|

||||

|

||||

## This page is deprecated.

|

||||

|

||||

Please see the [OpenFaaS docs site](https://docs.openfaas.com/).

|

||||

|

||||

## For archival purposes

|

||||

|

||||

This page is a collection of our key blog posts, tutorials and guides while we prepare a [dedicated site](https://github.com/openfaas/faas/issues/253) for documentation. For other queries please get in touch for a Slack invite or ping [@openfaas](https://twitter.com/openfaas) on Twitter.

|

||||

|

||||

Suggestions for new topics are welcome. Please also check the [Issue tracker](https://github.com/openfaas/faas/issues).

|

||||

|

||||

## Deployment guides (start here)

|

||||

|

||||

### A foreword on security

|

||||

|

||||

These instructions are for a development environment. If you plan to expose OpenFaaS on the public Internet you need to enable basic authentication with a proxy such as Kong or Traefik at a minimum. TLS is also highly recomended and freely available with LetsEncrypt.org. [Kong guide](https://github.com/openfaas/faas/blob/master/guide/kong_integration.md) [Traefik guide](https://github.com/openfaas/faas/blob/master/guide/traefik_integration.md).

|

||||

|

||||

> Note: We are also looking to [automate authentication "out the box"](https://github.com/openfaas/faas/issues/349) to cover edge cases.

|

||||

|

||||

* [Kubernetes deployment](deployment_k8s.md)

|

||||

|

||||

* [Docker Swarm deployment](deployment_swarm.md)

|

||||

|

||||

* [DigitalOcean deployment (with Swarm)](deployment_digitalocean.md)

|

||||

|

||||

## Intermediate

|

||||

|

||||

* [Workflows / Chaining functions](chaining_functions.md)

|

||||

|

||||

* [Interacting with other containers/services](interactions.md)

|

||||

|

||||

* [Troubleshooting](troubleshooting.md)

|

||||

|

||||

* [Asynchronous functions with NATS Streaming](asynchronous.md)

|

||||

|

||||

* [Submit a function to the OpenFaaS Function Store](https://github.com/openfaas/store)

|

||||

|

||||

* [Secrets with OpenFaaS and Docker Swarm](https://github.com/openfaas/faas/blob/master/guide/secure_secret_management.md)

|

||||

|

||||

* [Hardening OpenFaaS with Kong & TLS](kong_integration.md)

|

||||

|

||||

* [Reference documentation for Function Watchdog](../watchdog/)

|

||||

|

||||

* [An Introduction to Serverless DevOps with OpenFaaS](https://hackernoon.com/an-introduction-to-serverless-devops-with-openfaas-b978ab0eb2b)

|

||||

|

||||

* WIP [Debugging Functions](https://github.com/openfaas/faas/issues/223)

|

||||

|

||||

## Blog posts and tutorials

|

||||

|

||||

### Hands-on with Node.js / Go / Python

|

||||

|

||||

* [Morning coffee with the OpenFaaS CLI](https://blog.alexellis.io/quickstart-openfaas-cli/)

|

||||

|

||||

* [Build a Serverless Golang Function with OpenFaaS](https://blog.alexellis.io/serverless-golang-with-openfaas/)

|

||||

|

||||

* [Your first serverless Python function with OpenFaaS](https://blog.alexellis.io/first-faas-python-function/)

|

||||

|

||||

### Project background, design decisions and architecture:

|

||||

|

||||

* [Introducing Functions as a Service (OpenFaaS)](https://blog.alexellis.io/introducing-functions-as-a-service/)

|

||||

|

||||

* [OpenFaaS presents to CNCF Serverless workgroup](https://blog.alexellis.io/openfaas-cncf-workgroup/)

|

||||

|

||||

* [An Introduction to Serverless DevOps with OpenFaaS](https://hackernoon.com/an-introduction-to-serverless-devops-with-openfaas-b978ab0eb2b)

|

||||

|

||||

### Hands-on with containers as functions

|

||||

|

||||

* [Serverless sorcery with ImageMagick](https://blog.alexellis.io/serverless-imagemagick/)

|

||||

|

||||

### High-throughput

|

||||

|

||||

* [Next-generation Watchdog (of-watchdog)](http://docs.openfaas.com/architecture/watchdog/#of-watchdog)

|

||||

|

||||

### Raspberry Pi & ARM

|

||||

|

||||

[Your Serverless Raspberry Pi cluster with Docker](https://blog.alexellis.io/your-serverless-raspberry-pi-cluster/)

|

||||

|

||||

## Extend OpenFaaS

|

||||

|

||||

* [Build a third-party provider](backends.md)

|

||||

|

||||

### Deprecated

|

||||

|

||||

Afterburn is replaced by the of-watchdog listed above.

|

||||

|

||||

* [OpenFaaS accelerates serverless Java with AfterBurn](https://blog.alexellis.io/openfaas-serverless-acceleration/)

|

||||

@ -1,77 +0,0 @@

|

||||

# Guide on Asynchronous processing

|

||||

|

||||

Asynchronous function calls can be queued up using the following route:

|

||||

|

||||

```

|

||||

$ curl --data "message" http://gateway:8080/async-function/{function_name}

|

||||

```

|

||||

|

||||

Summary of modes for calling functions via API Gateway:

|

||||

|

||||

| Mode | Method | URL | Body | Headers | Query string

|

||||

| -------------|--------|------------------------------------------------|------|--------- |------------------- |

|

||||

| Synchronous | POST | http://gateway:8080/function/{function_name} | Yes | Yes | Yes |

|

||||

| Synchronous | GET | http://gateway:8080/function/{function_name} | Yes | Yes | Yes |

|

||||

| Asynchronous | POST | http://gateway:8080/async-function/{function_name} | Yes | Yes | Yes [#369](https://github.com/openfaas/faas/issues/369) |

|

||||

| Asynchronous | GET | Not supported | - | - | - |

|

||||

|

||||

> Note: This work was originally carried out under [PR #131](https://github.com/openfaas/faas/pull/131).

|

||||

|

||||

*Logical flow for synchronous functions:*

|

||||

|

||||

|

||||

|

||||

## Why use Asynchronous processing?

|

||||

|

||||

* Enable longer time-outs

|

||||

|

||||

* Process work whenever resources are available rather than immediately

|

||||

|

||||

* Consume a large batch of work within a few seconds and let it process at its own pace

|

||||

|

||||

## How does async work?

|

||||

|

||||

Here is a conceptual diagram

|

||||

|

||||

<img width="1440" alt="screen shot 2017-10-26 at 15 55 19" src="https://user-images.githubusercontent.com/6358735/32060206-047eb75c-ba66-11e7-94d3-1343ea1811db.png">

|

||||

|

||||

You can also use asynchronous calls with a callback URL

|

||||

|

||||

<img width="1440" alt="screen shot 2017-10-26 at 15 55 06" src="https://user-images.githubusercontent.com/6358735/32060205-04545692-ba66-11e7-9e6d-b800a07b9bf5.png">

|

||||

|

||||

The reference implementation for asychronous processing uses NATS Streaming, but you are free to extend OpenFaaS and write your own [queue-worker](https://github.com/open-faas/nats-queue-worker).

|

||||

|

||||

> The asynchronous stack is now built-in by default. Previously it was an optional configuration.

|

||||

|

||||

## Call a function

|

||||

|

||||

Functions do not need to be modified to work asynchronously, just use this alternate route:

|

||||

|

||||

```

|

||||

http://gateway:8080/async-function/{function_name}

|

||||

```

|

||||

|

||||

If you want the function to call another function or a different endpoint when it is finished then pass the `X-Callback-Url` header. This is optional.

|

||||

|

||||

```

|

||||

$ curl http://gateway:8080/async-function/{function_name} --data-binary @sample.json -H "X-Callback-Url: http://gateway:8080/function/send2slack"

|

||||

```

|

||||

|

||||

You can also use the following site to setup a public endpoint for testing HTTP callbacks: [requestb.in](https://requestb.in)

|

||||

|

||||

## Extend function timeouts

|

||||

|

||||

Functions have three timeouts configurable by environmental variables expressed in seconds:

|

||||

|

||||

HTTP:

|

||||

|

||||

* read_timeout

|

||||

* write_timeout

|

||||

|

||||

Hard timeout:

|

||||

|

||||

* exec_timeout

|

||||

|

||||

To make use of these just add them to your Dockerfile when needed as ENV variables.

|

||||

|

||||

> [Function watchdog reference](https://github.com/openfaas/faas/tree/master/watchdog)

|

||||

@ -1,107 +0,0 @@

|

||||

## OpenFaaS backends guide

|

||||

|

||||

OpenFaaS is a framework for building serverless functions with containers and running them at scale.

|

||||

|

||||

> Bring Serverless OpenFaaS functions to your favourite container platform.

|

||||

|

||||

We support two orchestration platforms or "backends" within the OpenFaaS project.

|

||||

|

||||

* Docker Swarm

|

||||

* Kubernetes

|

||||

|

||||

There are several other backends available with community-support as documented in the [community guide](https://github.com/openfaas/faas/blob/master/community.md#openfaas-providers).

|

||||

|

||||

The Docker Swarm support is built-into the faas repo, but the Kubernetes support is provided by a microservice in the [faas-netes](https://github.com/openfaas/faas-netes) repo.

|

||||

|

||||

If you're thinking of writing a new back-end we'd love to hear about it and help you, so please get in touch with alex@openfaas.com. Existing implementations (like OpenFaaS) are written in Golang and this provides a level of consistency across the projects.

|

||||

|

||||

### I need a backend for X

|

||||

|

||||

This project is focusing on Docker Swarm and Kubernetes, but we're open to support from third parties and vendors for other backends:

|

||||

|

||||

Here are some ideas:

|

||||

|

||||

* Nomad

|

||||

* Marathon Mesos

|

||||

* AWS ECS

|

||||

* Hyper.sh

|

||||

|

||||

If you would like to write your own back-end for `X` then you can write your own microservice that conforms to the [Swagger API](https://github.com/openfaas/faas/tree/master/api-docs) here.

|

||||

|

||||

### How does my back-end work?

|

||||

|

||||

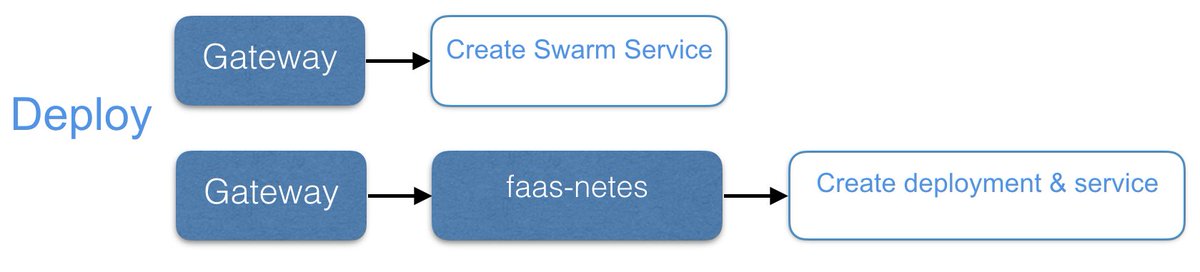

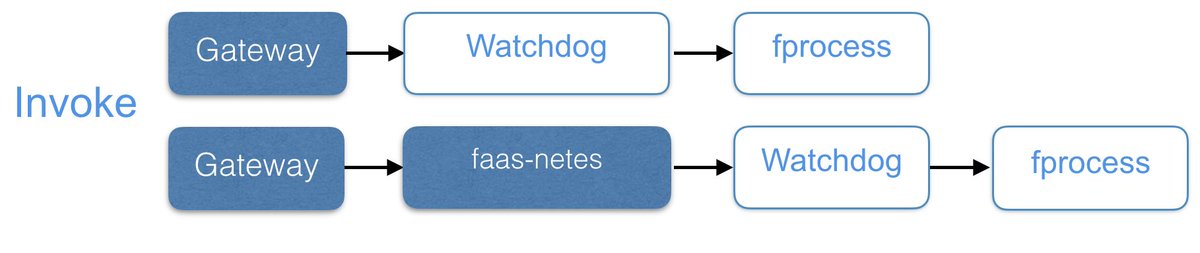

In order to support a new back end you will write a new "external_provider" and configure this on the API Gateway. The API Gateway will then proxy any requests to your new microservice. The first "external_provider" was the Kubernetes implementation [faas-netes](https://github.com/openfaas/faas-netes):

|

||||

|

||||

|

||||

|

||||

Deploy a function - through the built-in Swarm support or through faas-netes

|

||||

|

||||

|

||||

|

||||

Invoke your function - through the built-in Swarm or via faas-netes

|

||||

|

||||

|

||||

|

||||

Find out more about the [watchdog here](https://github.com/openfaas/faas/tree/master/watchdog).

|

||||

|

||||

### Automatically compatible OpenFaaS

|

||||

|

||||

The following are fully compatible with any additional back-ends:

|

||||

|

||||

* API Gateway

|

||||

* Promethes metrics (tracked through API Gateway)

|

||||

* The built-in UI portal (hosted on the API Gateway)

|

||||

* The Function Watchdog and any existing OpenFaaS functions

|

||||

* The [CLI](https://github.com/openfaas/faas-cli)

|

||||

* Asynchronous function invocation

|

||||

|

||||

Dependent on back-end:

|

||||

|

||||

* Secrets or environmental variable support

|

||||

* Windows Containers function runtimes (i.e. via W2016 and Docker)

|

||||

* Scaling - dependent on underlying API (available in Docker & Kubernetes)

|

||||

|

||||

#### Backend endpoints:

|

||||

|

||||

* List / Create / Delete a function

|

||||

|

||||

`/system/functions`

|

||||

|

||||

Method(s): GET / POST / DELETE

|

||||

|

||||

* Get a specific function

|

||||

|

||||

`/system/function/{name:[-a-zA-Z_0-9]+}`

|

||||

|

||||

Method(s): GET

|

||||

|

||||

* Scale a specific function:

|

||||

|

||||

`/system/scale-function/{name:[-a-zA-Z_0-9]+}`

|

||||

|

||||

Method(s): POST

|

||||

|

||||

* Invoke a specific function

|

||||

|

||||

`/function/{name:[-a-zA-Z_0-9]+}`

|

||||

|

||||

Method(s): POST

|

||||

|

||||

|

||||

### Examples / documentation

|

||||

|

||||

* See the [Swagger API](https://github.com/openfaas/faas/tree/master/api-docs) as a starting point.

|

||||

|

||||

#### faas-netes (Kubernetes)

|

||||

|

||||

The Kubernetes integration was written by Alex Ellis and is officially supported by the project.

|

||||

|

||||

* See the [FaaS-netes handlers](https://github.com/openfaas/faas-netes/tree/master/handlers) for examples of how to implement each endpoint.

|

||||

|

||||

#### Rancher / Cattle (community)

|

||||

|

||||

This work is by Ken Fukuyama from Japan.

|

||||

|

||||

* [Blog post](https://medium.com/@kenfdev/openfaas-on-rancher-684650cc078e)

|

||||

|

||||

* [faas-rancher](https://github.com/kenfdev/faas-rancher) implementation in Golang

|

||||

@ -1,93 +0,0 @@

|

||||

# Chaining OpenFaaS functions

|

||||

|

||||

We will discuss client-side piping, server-side piping and the "function director" pattern.

|

||||

|

||||

## Client-side piping

|

||||

|

||||

The easiest way to chain functions is to do it on the client-side via your application code or a `curl`.

|

||||

|

||||

Here is an example:

|

||||

|

||||

We pipe a string or file into the markdown function, then pipe it into a Slack function

|

||||

|

||||

```

|

||||

$ curl -d "# test" localhost:8080/function/markdown | \

|

||||

curl localhost:8080/function/slack --data-binary -

|

||||

```

|

||||

|

||||

You could also do this via code, or through the `faas-cli`:

|

||||

|

||||

```

|

||||

$ echo "test" | faas-cli invoke markdown | \

|

||||

faas-cli invoke slack

|

||||

```

|

||||

|

||||

## Server-side access via gateway

|

||||

|

||||

On the server side you can access any other function by calling it on the gateway over HTTP.

|

||||

|

||||

### Function A calls B

|

||||

|

||||

Let's say we have two functions:

|

||||

* geolocatecity - gives a city name for a lat/lon combo in JSON format

|

||||

* findiss - finds the location of the International Space Station then pretty-prints the city name by using the `geolocatecity` function

|

||||

|

||||

findiss Python 2.7 handler:

|

||||

|

||||

```

|

||||

import requests

|

||||

|

||||

def get_space_station_location():

|

||||

return {"lat": 0.51112, "lon": -0.1234}

|

||||

|

||||

def handler(st):

|

||||

location = get_space_station_location()

|

||||

r = requests.post("http://gateway:8080/function/geolocatecity", location)

|

||||

|

||||

print("The ISS is over the following city: " + r.content)

|

||||

```

|

||||

|

||||

|

||||

### Function Director pattern

|

||||

|

||||

In the Function Director pattern - we create a "wrapper function" which can then either pipes the result of function call A into function call B or compose the results of A and B before returning a result. This approach saves on bandwidth and latency vs. client-side piping and means you can version both your connector and the functions involved.

|

||||

|

||||

Take our previous example:

|

||||

|

||||

```

|

||||

$ curl -d "# test" localhost:8080/function/markdown | \

|

||||

curl localhost:8080/function/slack --data-binary -

|

||||

```

|

||||

|

||||

markdown2slack Python 2.7 handler:

|

||||

|

||||

```

|

||||

import requests

|

||||

|

||||

def handler(req):

|

||||

|

||||

markdown = requests.post("http://gateway:8080/function/markdown", req)

|

||||

slack_result = requests.post("http://gateway:8080/function/slack", markdown.content)

|

||||

|

||||

print("Slack result: " + str(slack_result.status_code))

|

||||

```

|

||||

|

||||

Practical example:

|

||||

|

||||

GitHub sends a "star" event to tweetfanclub function, tweetfanclub uses get-avatar to download the user's profile picture - stores that in an S3 bucket, then invokes tweetstargazer which tweets the image. A polaroid effect is added by a "polaroid" function.

|

||||

|

||||

This example uses a mix of regular binaries such as ImageMagick and Python handlers generated with the FaaS-CLI.

|

||||

|

||||

* [GitHub to Twitter Fanclub](https://github.com/alexellis/faas-twitter-fanclub/blob/master/README.md)

|

||||

|

||||

## Asynchronous call-backs

|

||||

|

||||

If you invoke a function asynchronously you have two options for getting the result back:

|

||||

|

||||

* Update the function

|

||||

|

||||

You can update your code to call another function / store state in another service

|

||||

|

||||

* X-Callback-Url

|

||||

|

||||

If you set a header for `X-Callback-Url` then that will be invoked after the function has run, [read more](https://github.com/openfaas/faas/blob/1aa6270fcc274cc36d90e0a9e4caa3eb71912ae0/guide/asynchronous.md#call-a-function)

|

||||

@ -1,161 +0,0 @@

|

||||

# Deployment guide for DigitalOcean

|

||||

|

||||

In this guide we will be using the `docker-machine` tool to provision a number of Docker Swarm nodes then we'll connect them together and deploy OpenFaaS. Before you get started - sign up to [Digital Ocean here to get free credits](https://m.do.co/c/8d4e75e9886f). Once you've signed up come back to the tutorial.

|

||||

|

||||

### A foreword on security

|

||||

|

||||

These instructions are for a development environment. If you plan to expose OpenFaaS on the public Internet you need to enable basic authentication with a proxy such as Kong or Traefik at a minimum. TLS is also highly recomended and freely available with LetsEncrypt.org. [Kong guide](https://github.com/openfaas/faas/blob/master/guide/kong_integration.md) [Traefik guide](https://github.com/openfaas/faas/blob/master/guide/traefik_integration.md).

|

||||

|

||||

## Create DigitalOcean API Access Token

|

||||

|

||||

Follow the [DigitalOcean instructions here](https://www.digitalocean.com/community/tutorials/how-to-use-the-digitalocean-api-v2#how-to-generate-a-personal-access-token) to create a Personal Access Token with **Read** and **Write** permissions, give the token a descriptive name for example `openfaas-getting-started`.

|

||||

|

||||

Now set an environment variable with the new token value.

|

||||

|

||||

```

|

||||

$ export DOTOKEN=738cb0cd2jfhu84c33hu...

|

||||

```

|

||||

|

||||

> If you want to make this permanent, you can insert the value into your `~/.bash_profile` file.

|

||||

|

||||

## Install Docker Machine

|

||||

|

||||

Type in `docker-machine` to see if you already have the tool installed this is normally bundled with Docker for Mac/Windows. If not then you can download [Docker Machine here](https://docs.docker.com/machine/install-machine/).

|

||||

|

||||

## Create Docker Nodes

|

||||

|

||||

Use Docker Machine to create Docker hosts or nodes. On Digital Ocean your hosts or VMs (Virtual Machines) are called *Droplets* and will run a full version of Linux. Note: you'll be able to connect to any of your droplets with `ssh` later on.

|

||||

|

||||

The example below creates 3 droplets in the NYC3 zone, if you want to deploy only one Droplet change `"1 2 3"` to `"1"`.

|

||||

|

||||

This process will take a few minutes as Droplets are created and Docker installed.

|

||||

```

|

||||

for i in 1 2 3; do

|

||||

docker-machine create \

|

||||

--driver digitalocean \

|

||||

--digitalocean-image ubuntu-17-10-x64 \

|

||||

--digitalocean-tags openfaas-getting-started \

|

||||

--digitalocean-region=nyc3 \

|

||||

--digitalocean-access-token $DOTOKEN \

|

||||

node-$i;

|

||||

done

|

||||

```

|

||||

|

||||

List the newly created Docker nodes.

|

||||

|

||||

```

|

||||

$ docker-machine ls

|

||||

|

||||

NAME ACTIVE DRIVER STATE URL SWARM DOCKER ERRORS

|

||||

node-1 - digitalocean Running tcp://104.131.69.233:2376 v17.07.0-ce

|

||||

node-2 - digitalocean Running tcp://104.131.115.146:2376 v17.07.0-ce

|

||||

node-3 - digitalocean Running tcp://159.203.168.121:2376 v17.07.0-ce

|

||||

```

|

||||

|

||||

Refer to the [documentation](https://docs.docker.com/machine/drivers/digital-ocean/) for more detailed information on the DigitalOcean options for docker-machine.

|

||||

|

||||

# Create your Docker Swarm

|

||||

|

||||

A Docker Swarm can contain as little as a single master node and begins by running the `docker swarm init` command. It's important if you have more than one node that you specify an `--advertise-addr` value.

|

||||

|

||||

Intialize Docker Swarm on `node-1`.

|

||||

|

||||

```

|

||||

$ docker-machine ssh node-1 -- docker swarm init --advertise-addr $(docker-machine ip node-1)

|

||||

```

|

||||

|

||||

> If you opted to deploy a single node, then skip to the next section.

|

||||

|

||||

When deploying more than a single Docker host take a note of the command to add a worker to the Swarm. This output contains your *join token*.

|

||||

|

||||

> If you lose it you can get a new one any time with the command: `docker swarm join-token worker` or `manager`.

|

||||

|

||||

```

|

||||

Swarm initialized: current node (je5vne1f974fea60ca75q2cac) is now a manager.

|

||||

|

||||

To add a worker to this swarm, run the following command:

|

||||

|

||||

docker swarm join --token SWMTKN-1-239v0epdnhuol2ldguttncoaleovy29hnwyglde0kba1owc9ng-9488z5we2invwcn69f5flq7uu 104.131.69.233:2377

|

||||

|

||||

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

|

||||

```

|

||||

|

||||

Add `node-2` to the Swarm, using the `docker swarm join..` command returned when initializing the master.

|

||||

```

|

||||

$ docker-machine ssh node-2 -- docker swarm join --token SWMTKN-1-239v0epdnhuol2ldguttncoaleovy29hnwyglde0kba1owc9ng-9488z5we2invwcn69f5flq7uu 104.131.69.233:2377

|

||||

```

|

||||

|

||||

Repeat for `node-3`.

|

||||

|

||||

```

|

||||

$ docker-machine ssh node-3 -- docker swarm join --token SWMTKN-1-239v0epdnhuol2ldguttncoaleovy29hnwyglde0kba1owc9ng-9488z5we2invwcn69f5flq7uu 104.131.69.233:2377

|

||||

```

|

||||

|

||||

## Configure Docker CLI to use remote Swarm

|

||||

|

||||

Run this command each time you open a new shell, this tells Docker where your remote Swarm is.

|

||||

|

||||

```

|

||||

eval $(docker-machine env node-1)

|

||||

```

|

||||

|

||||

## Deploy the OpenFaaS Stack

|

||||

|

||||

This command clones the OpenFaaS Github repository then checkouts out a stable release before deploying a Docker stack. Docker Swarm will automatically distribute your functions and OpenFaaS services across the cluster.

|

||||

|

||||

```

|

||||

$ git clone https://github.com/alexellis/faas && \

|

||||

cd faas && \

|

||||

git checkout 0.8.0 && \

|

||||

./deploy_stack.sh

|

||||

```

|

||||

|

||||

## Test the UI

|

||||

|

||||

Within a few seconds (or minutes if on a poor WiFi connection) the API gateway and sample functions will be deployed to the Swarm cluster running on DigitalOcean.

|

||||

|

||||

Access the Gateway UI via the IP address returned by `docker-machine ip node-1` (you can also access via `node-2` and `node-3`):

|

||||

|

||||

```

|

||||

$ echo http://$(docker-machine ip node-1):8080

|

||||

```

|

||||

|

||||

Prometheus metrics can be viewed on port 9090 on a master. Fetch the IP like this:

|

||||

|

||||

```

|

||||

$ echo http://$(docker-machine ip node-1):9090

|

||||

```

|

||||

|

||||

## Deleting OpenFaaS Droplets

|

||||

|

||||

You can use `docker-machine` to delete any created Droplets if are finished with your OpenFaaS deployment.

|

||||

|

||||

```

|

||||

docker-machine rm node-1 node-2 node-3

|

||||

```

|

||||

|

||||

## Advanced

|

||||

|

||||

### Create a Load Balancer

|

||||

|

||||

Digital Ocean provide their own *Load Balancers* which mean you only need to share or map one IP address to your DNS records or internal applications. They can also apply health-checks which ensure traffic is only routed to healthy nodes.

|

||||

|

||||

From the DigitalOcean console Networking page, open the Load Balancers tab and click *Create Load Balancer*.

|

||||

|

||||

Give the balancer a name and select the Droplets which will be balanced using the `openfaas-getting-started` tag and `NYC3` region (these were values passed to docker-machine when creating the nodes).

|

||||

|

||||

|

||||

|

||||

Update the forwarding rules to point at the Gateway on `8080` and Prometheus dashboard on `9090`:

|

||||

|

||||

|

||||

Expand the Advanced section and update the health check to use port `8080`.

|

||||

|

||||

|

||||

Click `Create Load Balancer` and after a few minutes your balancer will be available.

|

||||

|

||||

|

||||

|

||||

You can now access the OpenFaaS Gateway, Prometheus dashboard and all functions via the load balanced IP address. For example from the balancer above:

|

||||

- Gateway: http://45.55.124.29:8080

|

||||

- Prometheus: http://45.55.124.29:9090

|

||||

@ -1,5 +0,0 @@

|

||||

# Deployment guide for Kubernetes

|

||||

|

||||

This page has moved to the official documentation site:

|

||||

|

||||

https://docs.openfaas.com/deployment/kubernetes/

|

||||

@ -1,5 +0,0 @@

|

||||

# Deployment guide for Docker Swarm

|

||||

|

||||

This page has moved to the official documentation site:

|

||||

|

||||

https://docs.openfaas.com/deployment/docker-swarm/

|

||||

@ -1,211 +0,0 @@

|

||||

# Deployment guide for Docker Swarm on ARM

|

||||

|

||||

> Note: The best place to start if you're new to OpenFaaS is the README file in the [openfaas/faas](https://github.com/openfaas/faas/blob/master/README.md) repository.

|

||||

|

||||

## 32-bit ARM (armhf) - i.e. Raspberry Pi 2 or 3

|

||||

|

||||

**OpenFaaS and Cloud Native Services**

|

||||

|

||||

Special Docker images are required for hardware other than a 64-bit PC, most projects do not yet provide these so we have custom versions of the following:

|

||||

|

||||

* Prometheus

|

||||

* AlertManager

|

||||

|

||||

The OpenFaaS containers are built manually on a Raspberry Pi 2 or 3:

|

||||

|

||||

* Gateway

|

||||

* Faas-netesd

|

||||

|

||||

NATS Streaming is now provided as a multi-architecture image.

|

||||

|

||||

The function watchdog is cross-compiled through our CI process on a 64-bit PC.

|

||||

|

||||

**Functions on armhf**

|

||||

|

||||

When running OpenFaaS on ARM a key consideration is that we need to use ARM base Docker images for our functions. This typically means swapping out the `FROM` instruction and the function code can stay the same, but more of the official base images are becoming multi-architecture (e.g. `apline:3.7`) which means that the build stage will pull the appropriate image for the hardware performing the build.

|

||||

|

||||

### Initialize Swarm Mode

|

||||

|

||||

You can create a single-host Docker Swarm on your ARM device with a single command. You don't need any additional software to Docker 17.06 or greater.

|

||||

|

||||

This is how you initialize your master node:

|

||||

|

||||

```

|

||||

# docker swarm init

|

||||

```

|

||||

|

||||

If you have more than one IP address you may need to pass a string like `--advertise-addr eth0` to this command.

|

||||

|

||||

Take a note of the join token

|

||||

|

||||

* Join any workers you need

|

||||

|

||||

Log into any worker nodes and type in the output from `docker swarm init` noted earlier. If you've lost this info then type `docker swarm join-token worker` on the master and then enter the output on the worker.

|

||||

|

||||

It's also important to pass the `--advertise-addr` string to any hosts which have a public IP address.

|

||||

|

||||

> Note: check whether you need to enable firewall rules for the [Docker Swarm ports listed here](https://docs.docker.com/engine/swarm/swarm-tutorial/).

|

||||

|

||||

### Deploy the stack

|

||||

|

||||

Clone OpenFaaS and then checkout the latest stable release:

|

||||

|

||||

```sh

|

||||

$ git clone https://github.com/openfaas/faas && \

|

||||

cd faas && \

|

||||

git checkout 0.7.8 && \

|

||||

./deploy_stack.armhf.sh

|

||||

```

|

||||

|

||||

`./deploy_stack.armhf.sh` can be run at any time and includes a set of sample functions. You can read more about these in the [TestDrive document](https://github.com/openfaas/faas/blob/master/TestDrive.md)

|

||||

|

||||

### Test out the UI

|

||||

|

||||

Within a few seconds (or minutes if on a poor WiFi connection) the API gateway and sample functions will be pulled into your local Docker library and you will be able to access the UI at:

|

||||

|

||||

http://localhost:8080

|

||||

|

||||

> If you find that `localhost` times out then try to force an IPv4 address such as http://127.0.0.1:8080.

|

||||

|

||||

### Grab the CLI

|

||||

|

||||

The FaaS-CLI is an OpenFaaS client through which you can build, push, deploy and invoke your functions. One command is all you need download and install the FaaS-CLI appropriate to your architecture:

|

||||

|

||||

```sh

|

||||

$ curl -sL https://cli.openfaas.com | sudo sh

|

||||

```

|

||||

|

||||

To quickly test the FaaS-CLI check the version:

|

||||

|

||||

```sh

|

||||

$ faas-cli version

|

||||

```

|

||||

A successful installation should yield a response similar to this:

|

||||

```

|

||||

___ _____ ____

|

||||

/ _ \ _ __ ___ _ __ | ___|_ _ __ _/ ___|

|

||||

| | | | '_ \ / _ \ '_ \| |_ / _` |/ _` \___ \

|

||||

| |_| | |_) | __/ | | | _| (_| | (_| |___) |

|

||||

\___/| .__/ \___|_| |_|_| \__,_|\__,_|____/

|

||||

|_|

|

||||

|

||||

Commit: 8c7ebc3e7304873ba42b8d8e95d492499935f278

|

||||

Version: 0.6.4

|

||||

```

|

||||

|

||||

### Using the CLI

|

||||

|

||||

As mentioned at the start of the guide, when running OpenFaaS on ARM a key consideration is the use of ARM base images for our functions. Full templates are provided for ARM supported languages meaning that the ARM template variant needs to be specified when using `new` to create a new function.

|

||||

|

||||

We'll adapt the content of an [earlier blog](https://blog.alexellis.io/quickstart-openfaas-cli/) to demonstrate.

|

||||

|

||||

Create a work area for your functions:

|

||||

```

|

||||

$ mkdir -p ~/functions && \

|

||||

cd ~/functions

|

||||

```

|

||||

|

||||

Next use the CLI to create a new function skeleton:

|

||||

|

||||

```

|

||||

$ faas-cli new callme --lang node-armhf

|

||||

Folder: callme created.

|

||||

___ _____ ____

|

||||

/ _ \ _ __ ___ _ __ | ___|_ _ __ _/ ___|

|

||||

| | | | '_ \ / _ \ '_ \| |_ / _` |/ _` \___ \

|

||||

| |_| | |_) | __/ | | | _| (_| | (_| |___) |

|

||||

\___/| .__/ \___|_| |_|_| \__,_|\__,_|____/

|

||||

|_|

|

||||

|

||||

Function created in folder: callme

|

||||

Stack file written: callme.yml

|

||||

```

|

||||

|

||||

Now we'll have the following structure:

|

||||

```sh

|

||||

├── callme

|

||||

│ ├── handler.js

|

||||

│ └── package.json

|

||||

├── callme.yml

|

||||

└── template

|

||||

```

|

||||

|

||||

At the time of writing the Go, Node and Python templates can all be specified using with an `-armhf` suffix.

|

||||

|

||||

Having used the ARM template we are almost ready to build as we normally would. Before we do, quickly edit `callme.yml` changing the image line to reflect your username and tag in the architecture - `alexellis/callme:armhf`

|

||||

|

||||

```

|

||||

$ faas-cli build -f callme.yml

|

||||

Building: callme.

|

||||

Clearing temporary build folder: ./build/callme/

|

||||

Preparing ./callme/ ./build/callme/function

|

||||

Building: alexellis/callme:armhf with node template. Please wait..

|

||||

docker build -t alexellis/callme:armhf .

|

||||

Sending build context to Docker daemon 8.704kB

|

||||

Step 1/16 : FROM alpine:3.8

|

||||

---> 16566b7ed19e

|

||||

...

|

||||

|

||||

Step 16/16 : CMD fwatchdog

|

||||

---> Running in 53d04c1631aa

|

||||

---> f5e1266b0d32

|

||||

Removing intermediate container 53d04c1631aa

|

||||

Successfully built 9dff89fae926

|

||||

Successfully tagged alexellis/callme:armhf

|

||||

Image: alexellis/callme:armhf built.

|

||||

[0] < Builder done.

|

||||

```

|

||||

|

||||

Now use the CLI to push the newly built function to Docker Hub:

|

||||

|

||||

```

|

||||

$ faas-cli push -f callme.yml

|

||||

```

|

||||

|

||||

If you have a single-node Swarm you won't need to push your image to the Docker Hub.

|

||||

|

||||

### Deploy

|

||||

|

||||

Deploy your function using the CLI. The CLI will call into the RESTful API on the OpenFaaS Gateway.

|

||||

|

||||

```

|

||||

$ faas-cli deploy -f callme.yml

|

||||

Deploying: callme.

|

||||

No existing service to remove

|

||||

Deployed.

|

||||

200 OK

|

||||

URL: http://localhost:8080/function/callme

|

||||

```

|

||||

|

||||

**Timing out?**

|

||||

If you find that `localhost` times out, or the response mentions `[::1]` then try to force an IPv4 address such as http://127.0.0.1:8080:

|

||||

|

||||

```

|

||||

$ faas-cli deploy -f callme.yml --gateway http://127.0.0.1:8080

|

||||

```

|

||||

|

||||

Alternatively edit your /etc/hosts file and remove the entry for [::1] and localhost.

|

||||

|

||||

### Invoke the function

|

||||

|

||||

Test your newly deploy function on ARM:

|

||||

|

||||

```

|

||||

$ faas-cli invoke callme

|

||||

Reading from STDIN - hit (Control + D) to stop.

|

||||

This is my message

|

||||

|

||||

{"status":"done"}

|

||||

```

|

||||

|

||||

## Deploy on Swarm with 64-bit ARM (aarch64)

|

||||

|

||||

The work for For 64-bit ARM or the `aarch64` architecture is currently in testing/development.

|

||||

|

||||

See the equivalent files as above with the arm64 suffix.

|

||||

|

||||

```

|

||||

$ docker stack deploy -c docker-compose.arm64.yml

|

||||

```

|

||||

|

||||

Currently the node is supported with a node-arm64 template.

|

||||

@ -1,30 +0,0 @@

|

||||

## Interacting with other containers and services

|

||||

|

||||

Here are three ways to interact with other containers or services within your Docker Swarm or Kubernetes cluster.

|

||||

|

||||

### Option 1 - host port

|

||||

|

||||

Bind a port to the host and use the host's IP / DNS-entry in the function.

|

||||

|

||||

This method is agnostic to Kubernetes or Docker Swarm and works for existing appliactions which may not even use containers. It's especially useful for existing services or databases such as MySQL, redis or Mongo.

|

||||

|

||||

If you refer to an IP or DNS entry, it would be best to use an environmental variable in your function to configure the address.

|

||||

|

||||

### Option 2 - Swarm service

|

||||

|

||||

If you are creating a new container such as MySQL then you can create a Swarm service (or Kubernetes deployment) and simply specify the network as an additional parameter. That makes it resolvable via DNS and doesn't require a host to be exposed on the host.

|

||||

|

||||

```

|

||||

docker service create --name redis --network=func_functions redis:latest

|

||||

```

|

||||

|

||||

Using a service (or Kubernetes deployment) offers the advantages of orchestration and scheduling and high-availability.

|

||||

|

||||

### Option 3 - use an attachable network

|

||||

|

||||

For Docker Swarm:

|

||||

|

||||

Go to your docker-compose YAML file and uncomment the last line which says "attachable". Now delete and re-deploy OpenFaaS (this must remove func_functions from `docker network ls`). Now when you re-create you can use `docker run --name redis --net=func_functions redis:latest`.

|

||||

|

||||

This can be useful for running privileged containers such as when you need to bind-mount a Docker socket for doing builds or accessing system devices.

|

||||

|

||||

@ -1,226 +0,0 @@

|

||||

## Status: deprecated

|

||||

|

||||

This guide was written in September 2017 is not maintained by the project or community.

|

||||

|

||||

If someone wants to maintain it, feel free to raise an issue and volunteer to do that.

|

||||

|

||||

Official documentation is at [https://docs.openfaas.com/](https://docs.openfaas.com/)

|

||||

|

||||

# Guide: Integrate Kong with your OpenFaaS cluster on Docker Swarm

|

||||

|

||||

[Kong](https://getkong.org) is an API gateway that provides features such as security, logging, and rate limiting. By putting this in front of OpenFaaS you can quickly get access to these things and a lot more via [the many other plugins written](https://getkong.org/plugins/) for it.

|

||||

|

||||

Below is a demo of how you could use Kong as an authentication layer for OpenFaaS.

|

||||

|

||||

## Setup OpenFaaS

|

||||

|

||||

If you haven't already setup OpenFaaS then you can follow one of the deployment guides available here:

|

||||

|

||||

* [Docker Swarm](https://github.com/openfaas/faas/blob/master/guide/deployment_swarm.md)

|

||||

* [Kubernetes](https://github.com/openfaas/faas/blob/master/guide/deployment_k8s.md)

|

||||

|

||||

## Setup Kong

|

||||

|

||||

Kong stores its configuration in Postgres, so we'll create a Postgres and Kong service then run a one-off migration too.

|

||||

|

||||

Deploy Postgres and optionally set the `POSTGRES_PASSWORD`

|

||||

|

||||

```

|

||||

$ docker service create --name kong-database \

|

||||

--network func_functions --detach=false \

|

||||

--constraint 'node.role == manager' \

|

||||

-e "POSTGRES_USER=kong" \

|

||||

-e "POSTGRES_DB=kong" \

|

||||

-e "POSTGRES_PASSWORD=secretpassword" \

|

||||

postgres:9.5

|

||||

```

|

||||

|

||||

Now we will use the Kong image to populate default configuration in the Postgres database:

|

||||

|

||||

```

|

||||

$ docker service create --name=kong-migrations \

|

||||

--network func_functions --detach=false --restart-condition=none \

|

||||

-e "KONG_DATABASE=postgres" \

|

||||

-e "KONG_PG_HOST=kong-database" \

|

||||

-e "KONG_PG_PASSWORD=secretpassword" \

|

||||

kong:latest kong migrations up

|

||||

```

|

||||

|

||||

The last service is Kong itself:

|

||||

|

||||

```

|

||||

$ docker service create --name kong \

|

||||

--network func_functions --detach=false \

|

||||

--constraint 'node.role == manager' \

|

||||

-e "KONG_DATABASE=postgres" \

|

||||

-e "KONG_PG_HOST=kong-database" \

|

||||

-e "KONG_PG_PASSWORD=secretpassword" \

|

||||

-e "KONG_PROXY_ACCESS_LOG=/dev/stdout" \

|

||||

-e "KONG_ADMIN_ACCESS_LOG=/dev/stdout" \

|

||||

-e "KONG_PROXY_ERROR_LOG=/dev/stderr" \

|

||||

-e "KONG_ADMIN_ERROR_LOG=/dev/stderr" \

|

||||

-p 8000:8000 \

|

||||

-p 8443:8443 \

|

||||

kong:latest

|

||||

```

|

||||

|

||||

**Doing things the right way**

|

||||

|

||||

Kong has an admin port with you can expose by adding `-p 8001:8001`. In this guide we will hide the port from the off-set so that if you do not have a firewall configured yet, there is less risk of someone gaining access.

|

||||

|

||||

Create a `curl` command alias so we can talk to the Kong admin without exposing its ports to the network.

|

||||

|

||||

```

|

||||

$ alias kong_admin_curl='docker exec $(docker ps -q -f name="kong\.") curl'

|

||||

```

|

||||

See that Kong admin is up and running

|

||||

```

|

||||

$ kong_admin_curl -i localhost:8001

|

||||

HTTP/1.1 200

|

||||

...

|

||||

```

|

||||

|

||||

## Use Kong to secure OpenFaaS

|

||||

|

||||

Proxy OpenFaaS's functions through Kong

|

||||

```

|

||||

$ kong_admin_curl -X POST \

|

||||

--url http://localhost:8001/apis/ \

|

||||

--data 'name=function' \

|

||||

--data 'uris=/function' \

|

||||

--data 'upstream_url=http://gateway:8080/function'

|

||||

|

||||

$ curl localhost:8000/function/func_echoit -d 'hello world'

|

||||

hello world

|

||||

```

|

||||

|

||||

Also enable routing for async invocation of functions

|

||||

```

|

||||

$ kong_admin_curl -X POST \

|

||||

--url http://localhost:8001/apis/ \

|

||||

--data 'name=async-function' \

|

||||

--data 'uris=/async-function' \

|

||||

--data 'upstream_url=http://gateway:8080/async-function'

|

||||

|

||||

$ curl localhost:8000/async-function/func_echoit -d 'hello world'

|

||||

hello world

|

||||

```

|

||||

|

||||

### Require basic authentication

|

||||

|

||||

Enable the basic-auth plugin in Kong

|

||||

|

||||

```

|

||||

$ kong_admin_curl -X POST http://localhost:8001/plugins \

|

||||

--data "name=basic-auth" \

|

||||

--data "config.hide_credentials=true"

|

||||

```

|

||||

|

||||

Create a consumer with credentials

|

||||

|

||||

```

|

||||

$ kong_admin_curl -d "username=aladdin" http://localhost:8001/consumers/

|

||||

|

||||

$ kong_admin_curl -X POST http://localhost:8001/consumers/aladdin/basic-auth \

|

||||

--data "username=aladdin" \

|

||||

--data "password=OpenSesame"

|

||||

```

|

||||

|

||||

Verify that authentication works

|

||||

|

||||

```

|

||||

$ curl localhost:8000/function/func_echoit -d 'hello world'

|

||||

{"message":"Unauthorized"}

|

||||

|

||||

$ curl localhost:8000/function/func_echoit -d 'hello world' \

|

||||

-H 'Authorization: Basic xxxxxx'

|

||||

{"message":"Invalid authentication credentials"}

|

||||

|

||||

$ echo -n aladdin:OpenSesame | base64

|

||||

YWxhZGRpbjpPcGVuU2VzYW1l

|

||||

|

||||

$ curl localhost:8000/function/func_echoit -d 'hello world' \

|

||||

-H 'Authorization: Basic YWxhZGRpbjpPcGVuU2VzYW1l'

|

||||

hello world

|

||||

```

|

||||

|

||||

Now lets expose the /ui directory so we can securely use the web GUI

|

||||

|

||||

```

|

||||

$ kong_admin_curl -i -X POST \

|

||||

--url http://localhost:8001/apis/ \

|

||||

--data 'name=ui' \

|

||||

--data 'uris=/ui' \

|

||||

--data 'upstream_url=http://gateway:8080/ui'

|

||||

```

|

||||

|

||||

Additionally we need to expose /system/functions since the UI makes Ajax requests to it

|

||||

|

||||

```

|

||||

$ kong_admin_curl -i -X POST \

|

||||

--url http://localhost:8001/apis/ \

|

||||

--data 'name=system-functions' \

|

||||

--data 'uris=/system/functions' \

|

||||

--data 'upstream_url=http://gateway:8080/system/functions'

|

||||

```

|

||||

|

||||

Verify that the UI is secure

|

||||

|

||||

```

|

||||

$ curl -i localhost:8000/ui/ \

|

||||

-H 'Authorization: Basic YWxhZGRpbjpPcGVuU2VzYW1l'

|

||||

|

||||

HTTP/1.1 200 OK

|

||||

Content-Type: text/html; charset=utf-8

|

||||

...

|

||||

```

|

||||

|

||||

Now visit http://localhost:8000/ui/ in your browser where you will be asked for credentials.

|

||||

|

||||

### Add SSL

|

||||

|

||||

Basic authentication does not protect from man in the middle attacks, so lets add SSL to encrypt the communication.

|

||||

|

||||

Create a cert. Here in the demo, we are creating selfsigned certs, but in production you should skip this step and use your existing certificates (or get some from Lets Encrypt).

|

||||

```

|

||||

$ openssl req -x509 -nodes -days 365 -newkey rsa:2048 \

|

||||

-keyout /tmp/selfsigned.key -out /tmp/selfsigned.pem \

|

||||

-subj "/C=US/ST=CA/L=L/O=OrgName/OU=IT Department/CN=example.com"

|

||||

```

|

||||

|

||||

Add cert to Kong

|

||||

|

||||

```

|

||||

$ kong_admin_curl -X POST http://localhost:8001/certificates \

|

||||

-F "cert=$(cat /tmp/selfsigned.pem)" \

|

||||

-F "key=$(cat /tmp/selfsigned.key)" \

|

||||

-F "snis=example.com"

|

||||

|

||||

HTTP/1.1 201 Created

|

||||

...

|

||||

```

|

||||

|

||||

Put the cert in front OpenFaaS

|

||||

|

||||

```

|

||||

$ kong_admin_curl -i -X POST http://localhost:8001/apis \

|

||||

-d "name=ssl-api" \

|

||||

-d "upstream_url=http://gateway:8080" \

|

||||

-d "hosts=example.com"

|

||||

HTTP/1.1 201 Created

|

||||

...

|

||||

```

|

||||

|

||||

Verify that the cert is now in use. Note the '-k' parameter is just here to work around the fact that we are using self signed certs.

|

||||

```

|

||||

$ curl -k https://localhost:8443/function/func_echoit \

|

||||

-d 'hello world' -H 'Host: example.com '\

|

||||

-H 'Authorization: Basic YWxhZGRpbjpPcGVuU2VzYW1l'

|

||||

hello world

|

||||

```

|

||||

|

||||

## Configure your firewall

|

||||

|

||||

Between OpenFaaS and Kong a lot of ports are exposed on your host machine. Most importantly you should hide port 8080 since that is where OpenFaaS's functions live which you were trying to secure in the first place. In the end it is best to only expose either 8000 or 8443 out of your network depending if you added SSL or not.

|

||||

|

||||

Another option concerning port 8000 is to expose both 8000 and 8443 and enable [https_only](https://getkong.org/docs/latest/proxy/#the-https_only-property) which is used to notify clients to upgrade to https from http.

|

||||

@ -1,3 +0,0 @@

|

||||

## Notes on load-testing or performance testing

|

||||

|

||||

This page has [moved to the OpenFaaS docs](https://docs.openfaas.com/architecture/performance/).

|

||||

@ -1,116 +0,0 @@

|

||||

# Secrets with OpenFaaS and Docker Swarm

|

||||

|

||||

OpenFaaS deploys functions as Docker Swarm Services, as result there are several features that we can leverage to simplify the development and subsquent deployment of functions to hardened production environments.

|

||||

|

||||

## Using Environment Variables

|

||||

|

||||

First, and least secure, is the ability to set environment variables at deploy time. For example, you might want to set the `NODE_ENV` or `DEBUG` variable. Setting the `NODE_ENV` in the stack file `samples.yml`

|

||||

|

||||

```yaml

|

||||

provider:

|

||||

name: openfaas

|

||||

gateway: http://localhost:8080

|

||||

|

||||

functions:

|

||||

nodehelloenv:

|

||||

lang: Dockerfile

|

||||

skip_build: true

|

||||

image: functions/nodehelloenv:latest

|

||||

environment:

|

||||

NODE_ENV: production

|

||||

```

|

||||

|

||||

You can then deploy and invoke the function via the `faas-cli` using

|

||||

|

||||

```sh

|

||||

$ faas-cli deploy -f ./samples.yml

|

||||

$ faas-cli invoke nodehelloenv

|

||||

Hello from a production machine

|

||||

```

|

||||

|

||||

Notice that it is using the value of `NODE_ENV` from the stack file, the default is is `dev`.

|

||||

|

||||

## Using Swarm Secrets

|

||||

|

||||

_Note_: Secrets are mounted as files to `/var/openfaas/secrets`, prior to version `0.8.2` secrets were mounted to `/run/secrets`. The example functions demonstrate a smooth upgrade implementation.

|

||||

|

||||

For sensitive value we can leverage the [Docker Swarm Secrets](https://docs.docker.com/engine/swarm/secrets/) feature to safely store and give our functions access to the needed values. Using secrets is a two step process. Take the [ApiKeyProtected](../sample-functions/ApiKeyProtected) example function, when we deploy this function we provide a secret key that it uses to authenticate requests to it. First we must add a secret to the swarm

|

||||

|

||||

```sh

|

||||

docker secret create secret_api_key ~/secrets/secret_api_key.txt

|

||||

```

|

||||

|

||||

where `~/secrets/secret_api_key.txt` is a simple text file that might look like this

|

||||

|

||||

```txt

|

||||

R^YqzKzSJw51K9zPpQ3R3N

|

||||

```

|

||||

|

||||

Equivalently, you can pipe the value to docker via stdin like this

|

||||

|

||||

```sh

|

||||

echo "R^YqzKzSJw51K9zPpQ3R3N" | docker secret create secret_api_key -

|

||||

```

|

||||

|

||||

Now, with the secret defined, we can deploy the function like this

|

||||

|

||||

```sh

|

||||

$ echo "R^YqzKzSJw51K9zPpQ3R3N" | docker secret create secret_api_key -

|

||||

$ faas-cli deploy -f ./samples.yml --secret secret_api_key

|

||||

$ curl -H "Content-Type: application/json" \

|

||||

-X POST \

|

||||

-H "X-Api-Key: R^YqzKzSJw51K9zPpQ3R3N" \

|

||||

-d '{}' \

|

||||

http://localhost:8080/function/protectedapi

|

||||

|

||||

Unlocked the function!

|

||||

|

||||

$ curl -H "Content-Type: application/json" \

|

||||

-X POST \

|

||||

-H "X-Api-Key: wrong_key" \

|

||||

-d '{}' \

|

||||

http://localhost:8080/function/protectedapi

|

||||

|

||||

Access denied!

|

||||

```

|

||||

|

||||

Your `samples.yml` stack file looks like this

|

||||

|

||||

```yaml

|

||||

provider:

|

||||

name: openfaas

|

||||

gateway: http://localhost:8080

|

||||

|

||||

functions:

|

||||

protectedapi:

|

||||

lang: Dockerfile

|

||||

skip_build: true

|

||||

image: functions/api-key-protected:latest

|

||||

```

|

||||

|

||||

Note that unlike the `envVars` in the first example, we do not provide the secret value, just a list of names: `"secrets": ["secret_api_key"]`. The secret value has already been securely stored in the Docker swarm. One really great result of this type of configuration is that you can simplify your function code by always referencing the same secret name, no matter the environment, the only change is how the environments are configured.

|

||||

|

||||

<!-- ## Advanced Swarm Secrets

|

||||

|

||||

For various reasons, you might add a secret to the Swarm under a different name than you want to use in your function, e.g. if you are rotating a secret key. The Docker Swarm secret specification allows us some advanced configuration of secrets [by supplying a comma-separated value specifying the secret](https://docs.docker.com/engine/reference/commandline/service_create/#create-a-service-with-secrets). The is best show in an example. Let's change the api key on our example function.

|

||||

|

||||

First add a new secret key

|

||||

|

||||

```sh

|

||||

echo "newqzKzSJw51K9zPpQ3R3N" | docker secret create secret_api_key_2 -

|

||||

```

|

||||

|

||||

Then, remove our old function and redeploy it with the new secret mounted in the same place as the old secret

|

||||

|

||||

```sh

|

||||

$ faas-cli deploy -f ./samples.yml --secret source=secret_api_key_2,target=secret_api_key --replace

|

||||

$ curl -H "Content-Type: application/json" \

|

||||

-X POST \

|

||||

-H "X-Api-Key: newqzKzSJw51K9zPpQ3R3N" \

|

||||

-d '{}' \

|

||||

http://localhost:8080/function/protectedapi

|

||||

|

||||

Unlocked the function!

|

||||

```

|

||||

|

||||

We reuse the sample stack file as in the previous section. -->

|

||||

@ -1,137 +0,0 @@

|

||||

# Use a self-hosted registry with OpenFaaS

|

||||

|

||||

If you're using OpenFaaS on single host, then you don't need to push your images to a registry. They will just be used from the local Docker library.

|

||||

|

||||

If you are using a remote server or a multi-node cluster then you can push your function's image to a registry or the Docker Hub.

|

||||

|

||||

This describes how to use OpenFaaS in a swarm with your own local registry for hosting function images.

|

||||

|

||||

## Set up a swarm

|

||||

|

||||

For this example lets presume you want to create a swarm of 3 nodes. Use node1 as the manager.

|

||||

|

||||

This is adapted from the [Swarm deployment guide](https://github.com/openfaas/faas/blob/master/guide/deployment_swarm.md).

|

||||

|

||||

```

|

||||

$ docker swarm init --advertise-addr $(hostname -i)

|

||||

```

|

||||

|

||||

Now in node2 and node3 paste the output from the above command.

|

||||

```

|

||||

$ docker swarm join --token ...

|

||||

```

|

||||

|

||||

## Install OpenFaaS

|

||||

```

|

||||

$ git clone https://github.com/openfaas/faas && \

|

||||

cd faas && \

|

||||

./deploy_stack.sh

|

||||

```

|

||||

|

||||

## Start a registry

|

||||

|

||||

Add it to the swarm and use the same network as OpenFaaS.

|

||||

|

||||

```

|

||||

docker service create -d -p 5000:5000 \

|

||||

--network func_functions --detach=false \

|

||||

--name registry registry:2

|

||||

```

|

||||

|

||||

Here we are using a basic local registry. You can deploy it elsewhere and use volumes depending on your persistence needs. If you would like to [use authentication with your registry, this guide may be helpful](https://github.com/openfaas/faas/blob/master/docs/managing-images.md#deploy-your-own-private-docker-registry).

|

||||

|

||||

|

||||

## Install the CLI

|

||||

|

||||

This is a helper for using and deploying functions to OpenFaaS.

|

||||

|

||||

On Linux

|

||||

|

||||

```

|

||||

$ curl -sSL https://cli.openfaas.com | sh

|

||||

```

|

||||

|

||||

On a Mac if you're using brew then you can type in

|

||||

```

|

||||

$ brew install faas-cli

|

||||

```

|

||||

|

||||

## Create a function

|

||||

|

||||

Generate function from a template

|

||||

|

||||

```

|

||||

$ mkdir functions && cd ~/functions

|

||||

$ faas-cli new hello-python --lang=python --gateway=http://localhost:8080

|

||||

```

|

||||

|

||||

Update the print method in *hello-python/handler.py*

|

||||

```

|

||||

import socket

|

||||

def handle(req):

|

||||

print("Hello world from " + socket.gethostname())

|

||||

```

|

||||

|

||||

Update the image in *hello-python.yml* to read

|

||||

```

|

||||

image: localhost:5000/hello-python

|

||||

```

|

||||

|

||||

Let's build the function

|

||||

```

|

||||

$ faas-cli build -f hello-python.yml

|

||||

```

|

||||

|

||||

Upload the function to our registry

|

||||

```

|

||||

$ faas-cli push -f hello-python.yml

|

||||

```

|

||||

|

||||

Check that the image made it to the registry

|

||||

```

|

||||

$ curl localhost:5000/v2/hello-python/tags/list

|

||||

{"name":"hello-python","tags":["latest"]}

|

||||

```

|

||||

|

||||

Now we will delete the local image to be sure the deployment happens from the registry

|

||||

```

|

||||

$ docker rmi localhost:5000/hello-python

|

||||

$ docker images | grep hello | wc -l

|

||||

0

|

||||

```

|

||||

|

||||

Deploy the function from the registry

|

||||

```

|

||||

$ faas-cli deploy -f hello-python.yml

|

||||

Deploying: hello-python.

|

||||

|

||||

Deployed. 200 OK.

|

||||

URL: http://localhost:8080/function/hello-python

|

||||

```

|

||||

|

||||

See that the function works

|

||||

```

|

||||

$ curl -X POST localhost:8080/function/hello-python

|

||||

Hello world from 281c2858c673

|

||||

```

|

||||

|

||||

## Update the function

|

||||

|

||||

hello-python/handler.py:

|

||||

```

|

||||

import socket

|

||||

def handle(req):

|

||||

print("Hello EARTH from " + socket.gethostname())

|

||||

```

|

||||

Now we can rebuild, push and deploy it to the swarm.

|

||||

```

|

||||

$ faas-cli build -f hello-python.yml && \

|

||||

faas-cli push -f hello-python.yml && \

|

||||

faas-cli deploy -f hello-python.yml

|

||||

```

|

||||

|

||||

See that the update works

|

||||

```

|

||||

$ curl -X POST localhost:8080/function/hello-python

|

||||

Hello EARTH from 9dacd2333c1c

|

||||

```

|

||||

@ -1,180 +0,0 @@

|

||||

# Integrate Traefik with your OpenFaaS cluster

|

||||

|

||||

> Træfik (pronounced like traffic) is a modern HTTP reverse proxy and

|

||||

> load balancer made to deploy microservices with ease.

|

||||

> - traefik.io

|

||||

|

||||

In addition, [Traefik](https://traefik.io) offers Basic Authentication and easy SSL setup, using LetsEncrypt. It

|

||||

supports several backends, such as Docker Swarm and Kubernetes.

|

||||

|

||||

## Setup OpenFaaS

|

||||

|

||||

OpenFaaS setup and configuration instructions can be found here:

|

||||

|

||||

* [Docker Swarm](https://github.com/alexellis/faas/blob/master/guide/deployment_swarm.md)

|

||||

* [Kubernetes](https://github.com/alexellis/faas/blob/master/guide/deployment_k8s.md)

|

||||

|

||||

To quickly setup with Docker Swarm:

|

||||

```

|

||||

$ docker swarm init --advertise-addr $(hostname -i)

|

||||

|

||||

$ git clone https://github.com/alexellis/faas

|

||||

$ cd faas

|

||||

$ ./deploy_stack.sh

|

||||

```

|

||||

|

||||

## Configure Traefik for Basic Authentication

|

||||

|

||||

### Generate an MD5 hashed password

|

||||

|

||||

Use htpasswd to generate a hashed password

|

||||

```

|

||||

$ htpasswd -c ./password.txt user

|

||||

```

|

||||

Add a new password when prompted. The new credentials can be found in

|

||||

the `password.txt` file.

|

||||

|

||||

### Add Traefik configuration to docker-compose.yml

|

||||

|

||||

Add an entry under `services` with the Traefik configuration

|

||||

```

|

||||

# docker-compose.yml

|

||||

version: "3.2"

|

||||

services:

|

||||

traefik:

|

||||

image: traefik:v1.3

|

||||

command: -c --docker=true

|

||||

--docker.swarmmode=true

|

||||

--docker.domain=traefik

|

||||

--docker.watch=true

|

||||

--web=true

|

||||

--debug=true

|

||||

--defaultEntryPoints='http'

|

||||

--entryPoints='Name:http Address::80'

|

||||

ports:

|

||||

- 80:80

|

||||

- 8080:8080

|

||||

volumes:

|

||||

- "/var/run/docker.sock:/var/run/docker.sock"

|

||||

networks:

|

||||

- functions

|

||||

deploy:

|

||||

placement:

|

||||

constraints: [node.role == manager]

|

||||

```

|

||||

|

||||

### Update the Gateway service

|

||||

|

||||

Traefik requires some service labels to discover the gateway service.

|

||||

Update the gateway configuration to remove the port property and add

|

||||

the appropriate labels.

|

||||

```

|

||||

# docker-compose.yml

|

||||

...

|

||||

gateway:

|

||||

...

|

||||

# ports:

|

||||

# - 8080:8080

|

||||

...

|

||||

deploy:

|

||||

labels:

|

||||

- traefik.port=8080

|

||||

- traefik.frontend.rule=PathPrefix:/ui,/system,/function

|

||||

- traefik.frontend.auth.basic=user:$$apr1$$MU....4XHRJ3. #copy/paste the contents of password.txt here

|

||||

...

|

||||

```

|

||||

Rather than publicly exposing port 8080, the added `traefik.port` label will

|

||||

make the gateway service available to Traefik on port 8080, but not

|

||||

publicly. Requests will now pass through Traefik and be forwarded on. The

|

||||