mirror of

https://github.com/openfaas/faas.git

synced 2025-06-08 16:26:47 +00:00

Remove sample-functions in favour of newer examples

Signed-off-by: Alex Ellis (OpenFaaS Ltd) <alex@openfaas.com>

This commit is contained in:

parent

1ee7db994c

commit

40bb3581b7

@ -1,15 +0,0 @@

|

||||

FROM --platform=${TARGETPLATFORM:-linux/amd64} ghcr.io/openfaas/classic-watchdog:0.1.4 as watchdog

|

||||

|

||||

FROM --platform=${TARGETPLATFORM:-linux/amd64} alpine:3.16.0

|

||||

|

||||

COPY --from=watchdog /fwatchdog /usr/bin/fwatchdog

|

||||

RUN chmod +x /usr/bin/fwatchdog

|

||||

|

||||

# Populate example here

|

||||

# ENV fprocess="wc -l"

|

||||

|

||||

RUN addgroup -g 1000 -S app && adduser -u 1000 -S app -G app

|

||||

USER 1000

|

||||

|

||||

HEALTHCHECK --interval=5s CMD [ -e /tmp/.lock ] || exit 1

|

||||

CMD ["fwatchdog"]

|

||||

@ -1,7 +0,0 @@

|

||||

## AlpineFunction

|

||||

|

||||

This is a base image for Alpine Linux which already has the watchdog added and configured with a healthcheck.

|

||||

|

||||

This image is published on the Docker hub as `functions/alpine:latest`.

|

||||

|

||||

In order to deploy it - make sure you specify an "fprocess" value at runtime i.e. `sha512sum` or `wc -l`. See the docker-compose.yml file for more details on usage.

|

||||

@ -1,3 +0,0 @@

|

||||

#!/bin/sh

|

||||

|

||||

docker build -f Dockerfile.armhf -t functions/alpine:latest-armhf .

|

||||

@ -1,7 +0,0 @@

|

||||

### Api-Key-Protected sample

|

||||

|

||||

Please see [apikey-secret](../apikey-secret/README.md)

|

||||

|

||||

See the [secure secret management guide](../../guide/secure_secret_management.md) for instructions on how to use this function.

|

||||

|

||||

When calling via the gateway pass the additional header "X-Api-Key", if it matches the `secret_api_key` value then the function will give access, otherwise access denied.

|

||||

@ -1,19 +0,0 @@

|

||||

FROM ghcr.io/openfaas/classic-watchdog:0.2.0 as watchdog

|

||||

|

||||

FROM artemklevtsov/r-alpine:latest

|

||||

|

||||

COPY --from=watchdog /fwatchdog /usr/bin/fwatchdog

|

||||

RUN chmod +x /usr/bin/fwatchdog

|

||||

|

||||

WORKDIR /application/

|

||||

|

||||

COPY handler.R .

|

||||

|

||||

ENV fprocess="Rscript handler.R"

|

||||

|

||||

RUN addgroup -g 1000 -S app && adduser -u 1000 -S app -G app

|

||||

USER 1000

|

||||

|

||||

HEALTHCHECK --interval=1s CMD [ -e /tmp/.lock ] || exit 1

|

||||

|

||||

CMD ["fwatchdog"]

|

||||

@ -1,42 +0,0 @@

|

||||

---

|

||||

title: "BaseFunction for R"

|

||||

output: github_document

|

||||

---

|

||||

|

||||

```{r setup, include=FALSE}

|

||||

knitr::opts_chunk$set(echo = TRUE)

|

||||

```

|

||||

|

||||

Use this OpenFaaS function using R.

|

||||

|

||||

**Deploy the base R function**

|

||||

|

||||

(Make sure you have already deployed OpenFaaS with ./deploy_stack.sh in the root of this Github repository.

|

||||

|

||||

* Option 1 - click *Create a new function* on the FaaS UI

|

||||

|

||||

* Option 2 - use the [faas-cli](https://github.com/openfaas/faas-cli/) (experimental)

|

||||

|

||||

```

|

||||

# curl -sSL https://get.openfaas.com | sudo sh

|

||||

|

||||

# faas-cli -action=deploy -image=functions/base:R-3.4.1-alpine -name=baser

|

||||

200 OK

|

||||

URL: http://localhost:8080/function/baser

|

||||

```

|

||||

|

||||

**Say Hi with input**

|

||||

|

||||

`curl` is good to test function.

|

||||

|

||||

```

|

||||

$ curl http://localhost:8080/function/baser -d "test"

|

||||

```

|

||||

|

||||

**Customize the transformation**

|

||||

|

||||

If you want to customise the transformation then edit the Dockerfile or the fprocess variable and create a new function.

|

||||

|

||||

**Remove the function**

|

||||

|

||||

You can remove the function with `docker service rm baser`.

|

||||

@ -1,34 +0,0 @@

|

||||

BaseFunction for R

|

||||

================

|

||||

|

||||

Use this FaaS function using R.

|

||||

|

||||

**Deploy the base R function**

|

||||

|

||||

(Make sure you have already deployed FaaS with ./deploy\_stack.sh in the root of this Github repository.

|

||||

|

||||

- Option 1 - click *Create a new function* on the FaaS UI

|

||||

|

||||

- Option 2 - use the [faas-cli](https://github.com/openfaas/faas-cli/) (experimental)

|

||||

|

||||

<!-- -->

|

||||

|

||||

# curl -sSL https://get.openfaas.com | sudo sh

|

||||

|

||||

# faas-cli -action=deploy -image=functions/base:R-3.4.1-alpine -name=baser

|

||||

200 OK

|

||||

URL: http://localhost:8080/function/baser

|

||||

|

||||

**Say Hi with input**

|

||||

|

||||

`curl` is good to test function.

|

||||

|

||||

$ curl http://localhost:8080/function/baser -d "test"

|

||||

|

||||

**Customize the transformation**

|

||||

|

||||

If you want to customise the transformation then edit the Dockerfile or the fprocess variable and create a new function.

|

||||

|

||||

**Remove the function**

|

||||

|

||||

You can remove the function with `docker service rm baser`.

|

||||

@ -1,5 +0,0 @@

|

||||

#!/bin/sh

|

||||

|

||||

echo "Building functions/base:R-3.4.1-alpine"

|

||||

docker build -t functions/base:R-3.4.1-alpine .

|

||||

|

||||

@ -1,7 +0,0 @@

|

||||

#!/usr/bin/env Rscript

|

||||

|

||||

f <- file("stdin")

|

||||

open(f)

|

||||

line<-readLines(f, n=1, warn = FALSE)

|

||||

|

||||

write(paste0("Hi ", line), stderr())

|

||||

@ -1,16 +0,0 @@

|

||||

## Base function examples

|

||||

|

||||

Examples of base functions are provided here.

|

||||

|

||||

Each one will read the request from the watchdog then print it back resulting in an HTTP 200.

|

||||

|

||||

| Language | Docker image | Notes |

|

||||

|------------------------|-----------------------------------------|----------------------------------------|

|

||||

| Node.js | functions/base:node-6.9.1-alpine | Node.js built on Alpine Linux |

|

||||

| Coffeescript | functions/base:node-6.9.1-alpine | Coffeescript/Nodejs built on Alpine Linux |

|

||||

| Golang | functions/base:golang-1.7.5-alpine | Golang compiled on Alpine Linux |

|

||||

| Python | functions/base:python-2.7-alpine | Python 2.7 built on Alpine Linux |

|

||||

| Java | functions/base:openjdk-8u121-jdk-alpine | OpenJDK built on Alpine Linux |

|

||||

| Dotnet Core | functions/base:dotnet-sdk | Microsoft dotnet core SDK |

|

||||

| Busybox / shell | functions/alpine:latest | Busybox contains useful binaries which can be turned into a FaaS function such as `sha512sum` or `cat` |

|

||||

| R | functions/base:R-3.4.1-alpine | R lang ready on Alpine Linux |

|

||||

@ -1,17 +0,0 @@

|

||||

FROM toricls/gnucobol:latest

|

||||

|

||||

RUN apt-get update && apt-get install -y curl \

|

||||

&& curl -sL https://github.com/openfaas/faas/releases/download/0.13.0/fwatchdog > /usr/bin/fwatchdog \

|

||||

&& chmod +x /usr/bin/fwatchdog \

|

||||

&& rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*

|

||||

|

||||

WORKDIR /root/

|

||||

|

||||

COPY handler.cob .

|

||||

RUN cobc -x handler.cob

|

||||

ENV fprocess="./handler"

|

||||

|

||||

HEALTHCHECK --interval=1s CMD [ -e /tmp/.lock ] || exit 1

|

||||

|

||||

CMD ["fwatchdog"]

|

||||

|

||||

@ -1,8 +0,0 @@

|

||||

GNUCobol

|

||||

=========

|

||||

|

||||

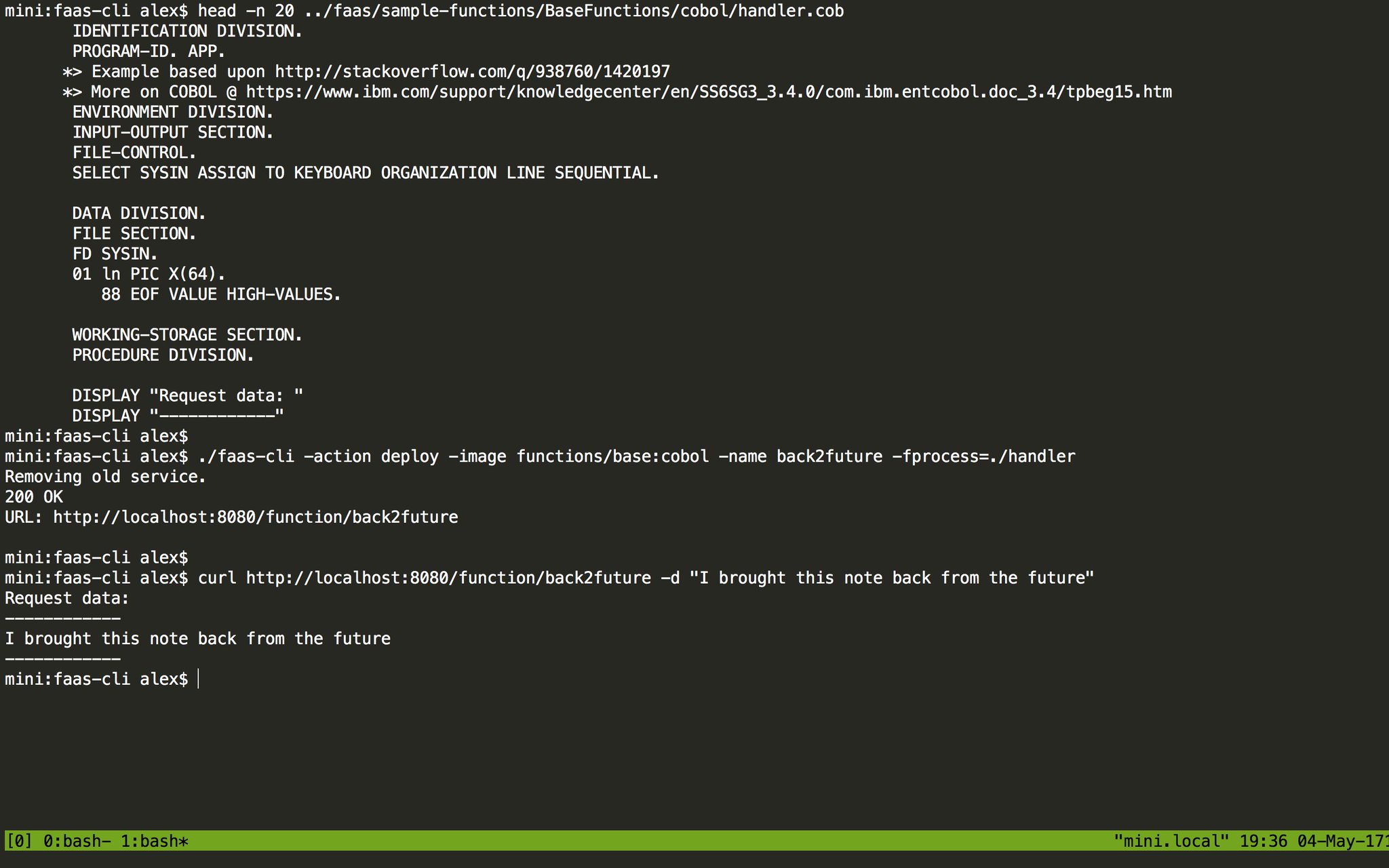

Any binary can become a FaaS process, even Cobol through GNUCobol.

|

||||

|

||||

Here's an example of deploying this base function to FaaS which reads from stdin (called SYSIN in Cobol) and prints it to the screen.

|

||||

|

||||

|

||||

@ -1,4 +0,0 @@

|

||||

#!/bin/sh

|

||||

|

||||

echo "Building functions/base:cobol"

|

||||

docker build -t functions/base:cobol .

|

||||

@ -1,38 +0,0 @@

|

||||

IDENTIFICATION DIVISION.

|

||||

PROGRAM-ID. APP.

|

||||

*> Example based upon http://stackoverflow.com/q/938760/1420197

|

||||

*> More on COBOL @ https://www.ibm.com/support/knowledgecenter/en/SS6SG3_3.4.0/com.ibm.entcobol.doc_3.4/tpbeg15.htm

|

||||

ENVIRONMENT DIVISION.

|

||||

INPUT-OUTPUT SECTION.

|

||||

FILE-CONTROL.

|

||||

SELECT SYSIN ASSIGN TO KEYBOARD ORGANIZATION LINE SEQUENTIAL.

|

||||

|

||||

DATA DIVISION.

|

||||

FILE SECTION.

|

||||

FD SYSIN.

|

||||

01 ln PIC X(64).

|

||||

88 EOF VALUE HIGH-VALUES.

|

||||

|

||||

WORKING-STORAGE SECTION.

|

||||

PROCEDURE DIVISION.

|

||||

|

||||

DISPLAY "Request data: "

|

||||

DISPLAY "------------"

|

||||

|

||||

OPEN INPUT SYSIN

|

||||

READ SYSIN

|

||||

AT END SET EOF TO TRUE

|

||||

END-READ

|

||||

PERFORM UNTIL EOF

|

||||

|

||||

|

||||

DISPLAY ln

|

||||

|

||||

READ SYSIN

|

||||

AT END SET EOF TO TRUE

|

||||

END-READ

|

||||

END-PERFORM

|

||||

CLOSE SYSIN

|

||||

|

||||

DISPLAY "------------"

|

||||

STOP RUN.

|

||||

@ -1,6 +0,0 @@

|

||||

IDENTIFICATION DIVISION.

|

||||

PROGRAM-ID. HELLO-WORLD.

|

||||

PROCEDURE DIVISION.

|

||||

DISPLAY 'FaaS running COBOL in a container!'.

|

||||

STOP RUN.

|

||||

|

||||

@ -1,23 +0,0 @@

|

||||

FROM ghcr.io/openfaas/classic-watchdog:0.2.0 as watchdog

|

||||

|

||||

FROM node:6.9.1-alpine

|

||||

|

||||

COPY --from=watchdog /fwatchdog /usr/bin/fwatchdog

|

||||

RUN chmod +x /usr/bin/fwatchdog

|

||||

|

||||

WORKDIR /application/

|

||||

|

||||

COPY package.json .

|

||||

|

||||

RUN npm install -g coffee-script && \

|

||||

npm i

|

||||

|

||||

COPY handler.coffee .

|

||||

|

||||

ENV fprocess="coffee handler.coffee"

|

||||

|

||||

HEALTHCHECK --interval=1s CMD [ -e /tmp/.lock ] || exit 1

|

||||

|

||||

USER 1000

|

||||

|

||||

CMD ["fwatchdog"]

|

||||

@ -1,5 +0,0 @@

|

||||

#!/bin/sh

|

||||

|

||||

echo "Building functions/base:coffeescript-1.12-alpine"

|

||||

docker build -t functions/base:coffeescript-1.12-alpine .

|

||||

|

||||

@ -1,7 +0,0 @@

|

||||

getStdin = require 'get-stdin'

|

||||

|

||||

handler = (req) -> console.log req

|

||||

|

||||

getStdin()

|

||||

.then (val) -> handler val

|

||||

.catch (e) -> console.error e.stack

|

||||

@ -1,16 +0,0 @@

|

||||

{

|

||||

"name": "NodejsBase",

|

||||

"version": "1.0.0",

|

||||

"description": "",

|

||||

"main": "faas_index.js",

|

||||

"scripts": {

|

||||

"test": "echo \"Error: no test specified\" && exit 1"

|

||||

},

|

||||

"keywords": [],

|

||||

"author": "",

|

||||

"license": "ISC",

|

||||

"dependencies": {

|

||||

"coffeescript": "^1.12.4",

|

||||

"get-stdin": "^5.0.1"

|

||||

}

|

||||

}

|

||||

@ -1,21 +0,0 @@

|

||||

FROM ghcr.io/openfaas/classic-watchdog:0.2.0 as watchdog

|

||||

|

||||

FROM mcr.microsoft.com/dotnet/core/sdk:2.1 as build

|

||||

|

||||

COPY --from=watchdog /fwatchdog /usr/bin/fwatchdog

|

||||

RUN chmod +x /usr/bin/fwatchdog

|

||||

|

||||

ENV DOTNET_CLI_TELEMETRY_OPTOUT 1

|

||||

|

||||

WORKDIR /application/

|

||||

COPY src src

|

||||

WORKDIR /application/src

|

||||

RUN dotnet restore

|

||||

RUN dotnet build

|

||||

|

||||

FROM build as runner

|

||||

RUN groupadd -g 1000 -r faas && useradd -r -g faas -u 1000 faas -m

|

||||

USER 1000

|

||||

ENV fprocess="dotnet ./bin/Debug/netcoreapp2.1/root.dll"

|

||||

EXPOSE 8080

|

||||

CMD ["fwatchdog"]

|

||||

@ -1,9 +0,0 @@

|

||||

# DnCore Example

|

||||

DotNet seems to have an issue where the following message can bee seen on STDOUT:

|

||||

```

|

||||

realpath(): Permission denied

|

||||

realpath(): Permission denied

|

||||

realpath(): Permission denied

|

||||

```

|

||||

|

||||

This messages can be ignored and the issue can be followed at: https://github.com/dotnet/core-setup/issues/4038

|

||||

@ -1,5 +0,0 @@

|

||||

bin/

|

||||

obj/

|

||||

.nuget/

|

||||

.dotnet/

|

||||

.templateengine/

|

||||

@ -1,25 +0,0 @@

|

||||

using System;

|

||||

using System.Text;

|

||||

|

||||

namespace root

|

||||

{

|

||||

class Program

|

||||

{

|

||||

private static string getStdin() {

|

||||

StringBuilder buffer = new StringBuilder();

|

||||

string s;

|

||||

while ((s = Console.ReadLine()) != null)

|

||||

{

|

||||

buffer.AppendLine(s);

|

||||

}

|

||||

return buffer.ToString();

|

||||

}

|

||||

|

||||

static void Main(string[] args)

|

||||

{

|

||||

string buffer = getStdin();

|

||||

|

||||

Console.WriteLine(buffer);

|

||||

}

|

||||

}

|

||||

}

|

||||

@ -1,8 +0,0 @@

|

||||

<Project Sdk="Microsoft.NET.Sdk">

|

||||

|

||||

<PropertyGroup>

|

||||

<OutputType>Exe</OutputType>

|

||||

<TargetFramework>netcoreapp2.1</TargetFramework>

|

||||

</PropertyGroup>

|

||||

|

||||

</Project>

|

||||

@ -1,23 +0,0 @@

|

||||

FROM ghcr.io/openfaas/classic-watchdog:0.2.0 as watchdog

|

||||

|

||||

FROM golang:1.13-alpine

|

||||

ENV CGO_ENABLED=0

|

||||

|

||||

MAINTAINER alexellis2@gmail.com

|

||||

ENTRYPOINT []

|

||||

|

||||

WORKDIR /go/src/github.com/openfaas/faas/sample-functions/golang

|

||||

COPY . /go/src/github.com/openfaas/faas/sample-functions/golang

|

||||

|

||||

RUN go install

|

||||

|

||||

COPY --from=watchdog /fwatchdog /usr/bin/fwatchdog

|

||||

RUN chmod +x /usr/bin/fwatchdog

|

||||

|

||||

ENV fprocess "/go/bin/golang"

|

||||

HEALTHCHECK --interval=1s CMD [ -e /tmp/.lock ] || exit 1

|

||||

|

||||

RUN addgroup -g 1000 -S app && adduser -u 1000 -S app -G app

|

||||

USER 1000

|

||||

|

||||

CMD [ "fwatchdog"]

|

||||

@ -1,16 +0,0 @@

|

||||

FROM golang:1.9.7-windowsservercore

|

||||

MAINTAINER alexellis2@gmail.com

|

||||

ENTRYPOINT []

|

||||

|

||||

WORKDIR /go/src/github.com/openfaas/faas/sample-functions/golang

|

||||

COPY . /go/src/github.com/openfaas/faas/sample-functions/golang

|

||||

RUN go build

|

||||

|

||||

ADD https://github.com/openfaas/faas/releases/download/0.13.0/fwatchdog.exe /watchdog.exe

|

||||

|

||||

EXPOSE 8080

|

||||

ENV fprocess="golang.exe"

|

||||

|

||||

ENV suppress_lock="true"

|

||||

|

||||

CMD ["watchdog.exe"]

|

||||

@ -1,10 +0,0 @@

|

||||

BaseFunction for Golang

|

||||

=========================

|

||||

|

||||

You will find a Dockerfile for Linux and one for Windows so that you can run serverless functions in a mixed-OS swarm.

|

||||

|

||||

Dockerfile for Windows

|

||||

* [Dockerfile.win](https://github.com/openfaas/faas/blob/master/sample-functions/BaseFunctions/golang/Dockerfile.win)

|

||||

|

||||

This function reads STDIN then prints it back - you can use it to create an "echo service" or as a basis to write your own function.

|

||||

|

||||

@ -1,4 +0,0 @@

|

||||

#!/bin/sh

|

||||

|

||||

echo "Building functions/base:golang-1.7.5-alpine"

|

||||

docker build -t functions/base:golang-1.7.5-alpine .

|

||||

@ -1,16 +0,0 @@

|

||||

package main

|

||||

|

||||

import (

|

||||

"fmt"

|

||||

"io/ioutil"

|

||||

"log"

|

||||

"os"

|

||||

)

|

||||

|

||||

func main() {

|

||||

input, err := ioutil.ReadAll(os.Stdin)

|

||||

if err != nil {

|

||||

log.Fatalf("Unable to read standard input: %s", err.Error())

|

||||

}

|

||||

fmt.Println(string(input))

|

||||

}

|

||||

@ -1 +0,0 @@

|

||||

*.class

|

||||

@ -1,21 +0,0 @@

|

||||

FROM ghcr.io/openfaas/classic-watchdog:0.2.0 as watchdog

|

||||

|

||||

FROM openjdk:8u121-jdk-alpine

|

||||

|

||||

COPY --from=watchdog /fwatchdog /usr/bin/fwatchdog

|

||||

RUN chmod +x /usr/bin/fwatchdog

|

||||

|

||||

WORKDIR /application/

|

||||

|

||||

COPY Handler.java .

|

||||

RUN javac Handler.java

|

||||

|

||||

ENV fprocess="java Handler"

|

||||

|

||||

RUN addgroup -g 1000 -S app && adduser -u 1000 -S app -G app

|

||||

USER 1000

|

||||

|

||||

HEALTHCHECK --interval=1s CMD [ -e /tmp/.lock ] || exit 1

|

||||

|

||||

CMD ["fwatchdog"]

|

||||

|

||||

@ -1,30 +0,0 @@

|

||||

import java.io.BufferedReader;

|

||||

import java.io.IOException;

|

||||

import java.io.InputStreamReader;

|

||||

|

||||

public class Handler {

|

||||

|

||||

public static void main(String[] args) {

|

||||

try {

|

||||

|

||||

String input = readStdin();

|

||||

System.out.print(input);

|

||||

|

||||

} catch(IOException e) {

|

||||

e.printStackTrace();

|

||||

}

|

||||

}

|

||||

|

||||

private static String readStdin() throws IOException {

|

||||

BufferedReader br = new BufferedReader(new InputStreamReader(System.in));

|

||||

String input = "";

|

||||

while(true) {

|

||||

String line = br.readLine();

|

||||

if(line==null) {

|

||||

break;

|

||||

}

|

||||

input = input + line + "\n";

|

||||

}

|

||||

return input;

|

||||

}

|

||||

}

|

||||

@ -1,4 +0,0 @@

|

||||

#!/bin/sh

|

||||

|

||||

echo "Building functions/base:openjdk-8u121-jdk-alpine"

|

||||

docker build -t functions/base:openjdk-8u121-jdk-alpine .

|

||||

@ -1,22 +0,0 @@

|

||||

FROM ghcr.io/openfaas/classic-watchdog:0.2.0 as watchdog

|

||||

|

||||

FROM node:6.9.1-alpine

|

||||

|

||||

COPY --from=watchdog /fwatchdog /usr/bin/fwatchdog

|

||||

RUN chmod +x /usr/bin/fwatchdog

|

||||

|

||||

WORKDIR /application/

|

||||

|

||||

COPY package.json .

|

||||

|

||||

RUN npm i

|

||||

COPY handler.js .

|

||||

|

||||

USER 1000

|

||||

|

||||

ENV fprocess="node handler.js"

|

||||

|

||||

HEALTHCHECK --interval=1s CMD [ -e /tmp/.lock ] || exit 1

|

||||

|

||||

CMD ["fwatchdog"]

|

||||

|

||||

@ -1,5 +0,0 @@

|

||||

#!/bin/sh

|

||||

|

||||

echo "Building functions/base:node-6.9.1-alpine"

|

||||

docker build -t functions/base:node-6.9.1-alpine .

|

||||

|

||||

@ -1,13 +0,0 @@

|

||||

"use strict"

|

||||

|

||||

let getStdin = require('get-stdin');

|

||||

|

||||

let handle = (req) => {

|

||||

console.log(req);

|

||||

};

|

||||

|

||||

getStdin().then(val => {

|

||||

handle(val);

|

||||

}).catch(e => {

|

||||

console.error(e.stack);

|

||||

});

|

||||

@ -1,15 +0,0 @@

|

||||

{

|

||||

"name": "NodejsBase",

|

||||

"version": "1.0.0",

|

||||

"description": "",

|

||||

"main": "faas_index.js",

|

||||

"scripts": {

|

||||

"test": "echo \"Error: no test specified\" && exit 1"

|

||||

},

|

||||

"keywords": [],

|

||||

"author": "",

|

||||

"license": "ISC",

|

||||

"dependencies": {

|

||||

"get-stdin": "^5.0.1"

|

||||

}

|

||||

}

|

||||

@ -1,20 +0,0 @@

|

||||

FROM ghcr.io/openfaas/classic-watchdog:0.2.0 as watchdog

|

||||

|

||||

FROM python:2.7-alpine

|

||||

|

||||

COPY --from=watchdog /fwatchdog /usr/bin/fwatchdog

|

||||

RUN chmod +x /usr/bin/fwatchdog

|

||||

|

||||

WORKDIR /application/

|

||||

|

||||

COPY handler.py .

|

||||

|

||||

ENV fprocess="python handler.py"

|

||||

|

||||

RUN addgroup -g 1000 -S app && adduser -u 1000 -S app -G app

|

||||

USER 1000

|

||||

|

||||

HEALTHCHECK --interval=1s CMD [ -e /tmp/.lock ] || exit 1

|

||||

|

||||

CMD ["fwatchdog"]

|

||||

|

||||

@ -1,5 +0,0 @@

|

||||

#!/bin/sh

|

||||

|

||||

echo "Building functions/base:python-2.7-alpine"

|

||||

docker build -t functions/base:python-2.7-alpine .

|

||||

|

||||

@ -1,11 +0,0 @@

|

||||

import sys

|

||||

|

||||

def get_stdin():

|

||||

buf = ""

|

||||

for line in sys.stdin:

|

||||

buf = buf + line

|

||||

return buf

|

||||

|

||||

if __name__ == "__main__":

|

||||

st = get_stdin()

|

||||

print(st)

|

||||

3

sample-functions/CHelloWorld/.gitignore

vendored

3

sample-functions/CHelloWorld/.gitignore

vendored

@ -1,3 +0,0 @@

|

||||

template

|

||||

build

|

||||

master.zip

|

||||

@ -1,20 +0,0 @@

|

||||

Hello World in C

|

||||

===================

|

||||

|

||||

This is hello world in C using GCC and Alpine Linux.

|

||||

|

||||

It also makes use of a multi-stage build and a `scratch` container for the runtime.

|

||||

|

||||

```

|

||||

$ faas-cli build -f ./stack.yml

|

||||

```

|

||||

|

||||

If pushing to a remote registry change the name from `alexellis` to your own Hub account.

|

||||

|

||||

```

|

||||

$ faas-cli push -f ./stack.yml

|

||||

$ faas-cli deploy -f ./stack.yml

|

||||

```

|

||||

|

||||

Then invoke via `curl`, `faas-cli` or the UI.

|

||||

|

||||

@ -1,32 +0,0 @@

|

||||

FROM ghcr.io/openfaas/classic-watchdog:0.2.0 as watchdog

|

||||

|

||||

FROM alpine:3.16.0 as builder

|

||||

COPY --from=watchdog /fwatchdog /usr/bin/fwatchdog

|

||||

RUN chmod +x /usr/bin/fwatchdog

|

||||

|

||||

WORKDIR /application/

|

||||

|

||||

RUN addgroup -g 1000 -S app && adduser -u 1000 -S app -G app

|

||||

|

||||

RUN apk add --no-cache gcc \

|

||||

musl-dev

|

||||

COPY main.c .

|

||||

|

||||

RUN gcc main.c -static -o /main \

|

||||

&& chmod +x /main \

|

||||

&& /main

|

||||

|

||||

FROM scratch

|

||||

|

||||

COPY --from=builder /main /

|

||||

COPY --from=builder /usr/bin/fwatchdog /

|

||||

|

||||

ENV fprocess="/main"

|

||||

ENV suppress_lock=true

|

||||

|

||||

COPY --from=builder /etc/passwd /etc/passwd

|

||||

|

||||

USER 1000

|

||||

|

||||

CMD ["fwatchdog"]

|

||||

|

||||

@ -1,9 +0,0 @@

|

||||

#include<stdio.h>

|

||||

|

||||

int main() {

|

||||

|

||||

printf("Hello world\n");

|

||||

|

||||

return 0;

|

||||

}

|

||||

|

||||

@ -1,9 +0,0 @@

|

||||

provider:

|

||||

name: openfaas

|

||||

gateway: http://127.0.0.1:8080

|

||||

|

||||

functions:

|

||||

helloc:

|

||||

lang: Dockerfile

|

||||

handler: ./src

|

||||

image: alexellis/helloc:0.1

|

||||

@ -1,21 +0,0 @@

|

||||

FROM ghcr.io/openfaas/classic-watchdog:0.2.0 as watchdog

|

||||

|

||||

FROM alpine:3.16.0 as ship

|

||||

RUN apk --update add nodejs npm

|

||||

|

||||

COPY --from=watchdog /fwatchdog /usr/bin/fwatchdog

|

||||

RUN chmod +x /usr/bin/fwatchdog

|

||||

|

||||

WORKDIR /application/

|

||||

|

||||

COPY package.json .

|

||||

COPY handler.js .

|

||||

COPY parser.js .

|

||||

COPY sample.json .

|

||||

|

||||

RUN npm i

|

||||

ENV fprocess="node handler.js"

|

||||

|

||||

USER 1000

|

||||

|

||||

CMD ["fwatchdog"]

|

||||

@ -1,48 +0,0 @@

|

||||

"use strict"

|

||||

let fs = require('fs');

|

||||

let sample = require("./sample.json");

|

||||

let cheerio = require('cheerio');

|

||||

let Parser = require('./parser');

|

||||

var request = require("request");

|

||||

|

||||

const getStdin = require('get-stdin');

|

||||

|

||||

getStdin().then(content => {

|

||||

let request = JSON.parse(content);

|

||||

handle(request, request.request.intent);

|

||||

});

|

||||

|

||||

function tellWithCard(speechOutput) {

|

||||

sample.response.outputSpeech.text = speechOutput

|

||||

sample.response.card.content = speechOutput

|

||||

sample.response.card.title = "Captains";

|

||||

console.log(JSON.stringify(sample));

|

||||

process.exit(0);

|

||||

}

|

||||

|

||||

function handle(request, intent) {

|

||||

createList((sorted) => {

|

||||

let speechOutput = "There are currently " + sorted.length + " Docker captains.";

|

||||

tellWithCard(speechOutput);

|

||||

});

|

||||

}

|

||||

|

||||

let createList = (next) => {

|

||||

let parser = new Parser(cheerio);

|

||||

|

||||

request.get("https://www.docker.com/community/docker-captains", (err, res, text) => {

|

||||

let captains = parser.parse(text);

|

||||

|

||||

let valid = 0;

|

||||

let sorted = captains.sort((x,y) => {

|

||||

if(x.text > y.text) {

|

||||

return 1;

|

||||

}

|

||||

else if(x.text < y.text) {

|

||||

return -1;

|

||||

}

|

||||

return 0;

|

||||

});

|

||||

next(sorted);

|

||||

});

|

||||

};

|

||||

@ -1 +0,0 @@

|

||||

docker build -t captainsintent . ; docker service rm CaptainsIntent ; docker service create --network=functions --name CaptainsIntent captainsintent

|

||||

@ -1,17 +0,0 @@

|

||||

{

|

||||

"name": "CaptainsIntent",

|

||||

"version": "1.0.0",

|

||||

"description": "",

|

||||

"main": "handler.js",

|

||||

"scripts": {

|

||||

"test": "echo \"Error: no test specified\" && exit 1"

|

||||

},

|

||||

"keywords": [],

|

||||

"author": "",

|

||||

"license": "ISC",

|

||||

"dependencies": {

|

||||

"cheerio": "^0.22.0",

|

||||

"get-stdin": "^5.0.1",

|

||||

"request": "^2.79.0"

|

||||

}

|

||||

}

|

||||

@ -1,32 +0,0 @@

|

||||

"use strict"

|

||||

|

||||

|

||||

module.exports = class Parser {

|

||||

|

||||

constructor(cheerio) {

|

||||

this.modules = {"cheerio": cheerio };

|

||||

}

|

||||

|

||||

sanitize(handle) {

|

||||

let text = handle.toLowerCase();

|

||||

if(text[0]== "@") {

|

||||

text = text.substring(1);

|

||||

}

|

||||

if(handle.indexOf("twitter.com") > -1) {

|

||||

text = text.substring(text.lastIndexOf("\/")+1)

|

||||

}

|

||||

return {text: text, valid: text.indexOf("http") == -1};

|

||||

}

|

||||

|

||||

parse(text) {

|

||||

let $ = this.modules.cheerio.load(text);

|

||||

|

||||

let people = $("#captians .twitter_link a");

|

||||

let handles = [];

|

||||

people.each((i, person) => {

|

||||

let handle = person.attribs.href;

|

||||

handles.push(this.sanitize(handle));

|

||||

});

|

||||

return handles;

|

||||

}

|

||||

};

|

||||

@ -1,24 +0,0 @@

|

||||

{

|

||||

"session": {

|

||||

"sessionId": "SessionId.8b812aa4-765a-47b6-9949-b203e63c5480",

|

||||

"application": {

|

||||

"applicationId": "amzn1.ask.skill.72fb1025-aacc-4d05-a582-21344940c023"

|

||||

},

|

||||

"attributes": {},

|

||||

"user": {

|

||||

"userId": "amzn1.ask.account.AETXYOXCBUOCTUZE7WA2ZPZNDUMJRRZQNJ2H6NLQDVTMYXBX7JG2RA7C6PFLIM4PXVD7LIDGERLI6AJVK34KNWELGEOM33GRULMDO6XJRR77HALOUJR2YQS34UG27YCPOUGANQJDT4HMRFOWN4Z5E4VVTQ6Z5FIM6TYWFHQ2ZU6HQ47TBUMNGTQFTBIONEGELUCIEUXISRSEKOI"

|

||||

},

|

||||

"new": true

|

||||

},

|

||||

"request": {

|

||||

"type": "IntentRequest",

|

||||

"requestId": "EdwRequestId.cabcff07-8aaa-4fe6-aca0-94440051fc89",

|

||||

"locale": "en-GB",

|

||||

"timestamp": "2016-12-30T19:57:45Z",

|

||||

"intent": {

|

||||

"name": "CaptainsIntent",

|

||||

"slots": {}

|

||||

}

|

||||

},

|

||||

"version": "1.0"

|

||||

}

|

||||

@ -1,2 +0,0 @@

|

||||

#!/bin/sh

|

||||

cat request.json | node handler.js -

|

||||

@ -1,16 +0,0 @@

|

||||

{

|

||||

"version": "1.0",

|

||||

"response": {

|

||||

"outputSpeech": {

|

||||

"type": "PlainText",

|

||||

"text": "There's currently 6 people in space"

|

||||

},

|

||||

"card": {

|

||||

"content": "There's currently 6 people in space",

|

||||

"title": "People in space",

|

||||

"type": "Simple"

|

||||

},

|

||||

"shouldEndSession": true

|

||||

},

|

||||

"sessionAttributes": {}

|

||||

}

|

||||

@ -1,20 +0,0 @@

|

||||

FROM ghcr.io/openfaas/classic-watchdog:0.2.0 as watchdog

|

||||

|

||||

FROM alpine:3.16.0 as ship

|

||||

RUN apk --update add nodejs npm

|

||||

|

||||

COPY --from=watchdog /fwatchdog /usr/bin/fwatchdog

|

||||

RUN chmod +x /usr/bin/fwatchdog

|

||||

|

||||

WORKDIR /application/

|

||||

|

||||

COPY package.json .

|

||||

RUN npm i

|

||||

COPY handler.js .

|

||||

COPY sendColor.js .

|

||||

COPY sample_response.json .

|

||||

|

||||

USER 1000

|

||||

|

||||

ENV fprocess="node handler.js"

|

||||

CMD ["fwatchdog"]

|

||||

@ -1,29 +0,0 @@

|

||||

{

|

||||

"session": {

|

||||

"sessionId": "SessionId.3f589830-c369-45a3-9c8d-7f5271777dd8",

|

||||

"application": {

|

||||

"applicationId": "amzn1.ask.skill.b32fb0db-f0f0-4e64-b862-48e506f4ea68"

|

||||

},

|

||||

"attributes": {},

|

||||

"user": {

|

||||

"userId": "amzn1.ask.account.AEUHSFGVXWOYRSM2A7SVAK47L3I44TVOG6DBCTY2ACYSCUYQ65MWDZLUBZHLDD3XEMCYRLS4VSA54PQ7QBQW6FZLRJSMP5BOZE2B52YURUOSNOWORL44QGYDRXR3H7A7Y33OP3XKMUSJXIAFH7T2ZA6EQBLYRD34BPLTJXE3PDZE3V4YNFYUECXQNNH4TRG3ZBOYH2BF4BTKIIQ"

|

||||

},

|

||||

"new": true

|

||||

},

|

||||

"request": {

|

||||

"type": "IntentRequest",

|

||||

"requestId": "EdwRequestId.9ddf1ea0-c582-4dd0-8547-359f71639c1d",

|

||||

"locale": "en-GB",

|

||||

"timestamp": "2017-01-28T11:02:59Z",

|

||||

"intent": {

|

||||

"name": "ChangeColorIntent",

|

||||

"slots": {

|

||||

"LedColor": {

|

||||

"name": "LedColor",

|

||||

"value": "blue"

|

||||

}

|

||||

}

|

||||

}

|

||||

},

|

||||

"version": "1.0"

|

||||

}

|

||||

@ -1,29 +0,0 @@

|

||||

{

|

||||

"session": {

|

||||

"sessionId": "SessionId.ce487e6e-9975-4c95-b305-2a0b354f3ee0",

|

||||

"application": {

|

||||

"applicationId": "amzn1.ask.skill.dc0c07b4-e18d-4f6f-a571-90cd1aca3bc6"

|

||||

},

|

||||

"attributes": {},

|

||||

"user": {

|

||||

"userId": "amzn1.ask.account.AEFGBTDIPL5K3HYKXGNAXDHANW2MPMFRRM5QX7FCMUEOJYCGNIVUTVR7IUUHZ2VFPIDNOPIUBWZLGYSSLOBLZ6FR5FRUJMP3OAZKUI3ZZ4ADLR7M4ROICY5H5RFASQLTV5IUNIOTA7OP6N2ZNCXXZDXS7BVGPB6GKIWZAJRHOGUYSBHX2JMSLNPQ6V6HMFKGKZLAWLHKGYEUDBI"

|

||||

},

|

||||

"new": true

|

||||

},

|

||||

"request": {

|

||||

"type": "IntentRequest",

|

||||

"requestId": "EdwRequestId.ee57cb67-a465-4d93-9ea8-29229f2634bc",

|

||||

"locale": "en-GB",

|

||||

"timestamp": "2017-01-28T11:32:04Z",

|

||||

"intent": {

|

||||

"name": "ChangeColorIntent",

|

||||

"slots": {

|

||||

"LedColor": {

|

||||

"name": "LedColor",

|

||||

"value": "green"

|

||||

}

|

||||

}

|

||||

}

|

||||

},

|

||||

"version": "1.0"

|

||||

}

|

||||

@ -1,54 +0,0 @@

|

||||

"use strict"

|

||||

let fs = require('fs');

|

||||

let sample = require("./sample_response.json");

|

||||

let SendColor = require('./sendColor');

|

||||

let sendColor = new SendColor("alexellis.io/officelights")

|

||||

|

||||

const getStdin = require('get-stdin');

|

||||

|

||||

getStdin().then(content => {

|

||||

let request = JSON.parse(content);

|

||||

handle(request, request.request.intent);

|

||||

});

|

||||

|

||||

function tellWithCard(speechOutput, request) {

|

||||

sample.response.session = request.session;

|

||||

sample.response.outputSpeech.text = speechOutput;

|

||||

sample.response.card.content = speechOutput;

|

||||

sample.response.card.title = "Office Lights";

|

||||

|

||||

console.log(JSON.stringify(sample));

|

||||

process.exit(0);

|

||||

}

|

||||

|

||||

function handle(request, intent) {

|

||||

if(intent.name == "TurnOffIntent") {

|

||||

let req = {r:0,g:0,b:0};

|

||||

var speechOutput = "Lights off.";

|

||||

sendColor.sendColor(req, () => {

|

||||

return tellWithCard(speechOutput, request);

|

||||

});

|

||||

} else {

|

||||

let colorRequested = intent.slots.LedColor.value;

|

||||

let req = {r:0,g:0,b:0};

|

||||

if(colorRequested == "red") {

|

||||

req.r = 255;

|

||||

} else if(colorRequested== "blue") {

|

||||

req.b = 255;

|

||||

} else if (colorRequested == "green") {

|

||||

req.g = 255;

|

||||

} else if (colorRequested == "white") {

|

||||

req.r = 255;

|

||||

req.g = 103;

|

||||

req.b = 23;

|

||||

} else {

|

||||

let msg = "I heard "+colorRequested+ " but can only show: red, green, blue and white.";

|

||||

return tellWithCard(msg, request);

|

||||

}

|

||||

|

||||

sendColor.sendColor(req, () => {

|

||||

var speechOutput = "OK, " + colorRequested + ".";

|

||||

return tellWithCard(speechOutput, request);

|

||||

});

|

||||

}

|

||||

}

|

||||

@ -1,29 +0,0 @@

|

||||

{

|

||||

"session": {

|

||||

"sessionId": "SessionId.3f589830-c369-45a3-9c8d-7f5271777dd8",

|

||||

"application": {

|

||||

"applicationId": "amzn1.ask.skill.b32fb0db-f0f0-4e64-b862-48e506f4ea68"

|

||||

},

|

||||

"attributes": {},

|

||||

"user": {

|

||||

"userId": "amzn1.ask.account.AEUHSFGVXWOYRSM2A7SVAK47L3I44TVOG6DBCTY2ACYSCUYQ65MWDZLUBZHLDD3XEMCYRLS4VSA54PQ7QBQW6FZLRJSMP5BOZE2B52YURUOSNOWORL44QGYDRXR3H7A7Y33OP3XKMUSJXIAFH7T2ZA6EQBLYRD34BPLTJXE3PDZE3V4YNFYUECXQNNH4TRG3ZBOYH2BF4BTKIIQ"

|

||||

},

|

||||

"new": true

|

||||

},

|

||||

"request": {

|

||||

"type": "IntentRequest",

|

||||

"requestId": "EdwRequestId.9ddf1ea0-c582-4dd0-8547-359f71639c1d",

|

||||

"locale": "en-GB",

|

||||

"timestamp": "2017-01-28T11:02:59Z",

|

||||

"intent": {

|

||||

"name": "TurnOffIntent",

|

||||

"slots": {

|

||||

"LedColor": {

|

||||

"name": "LedColor",

|

||||

"value": "red"

|

||||

}

|

||||

}

|

||||

}

|

||||

},

|

||||

"version": "1.0"

|

||||

}

|

||||

@ -1 +0,0 @@

|

||||

docker build -t changecolorintent . ; docker service rm ChangeColorIntent ; docker service create --network=functions --name ChangeColorIntent changecolorintent

|

||||

@ -1,16 +0,0 @@

|

||||

{

|

||||

"name": "src",

|

||||

"version": "1.0.0",

|

||||

"description": "",

|

||||

"main": "index.js",

|

||||

"scripts": {

|

||||

"test": "echo \"Error: no test specified\" && exit 1"

|

||||

},

|

||||

"keywords": [],

|

||||

"author": "",

|

||||

"license": "ISC",

|

||||

"dependencies": {

|

||||

"get-stdin": "^5.0.1",

|

||||

"mqtt": "^2.0.1"

|

||||

}

|

||||

}

|

||||

@ -1,29 +0,0 @@

|

||||

{

|

||||

"session": {

|

||||

"sessionId": "SessionId.3f589830-c369-45a3-9c8d-7f5271777dd8",

|

||||

"application": {

|

||||

"applicationId": "amzn1.ask.skill.b32fb0db-f0f0-4e64-b862-48e506f4ea68"

|

||||

},

|

||||

"attributes": {},

|

||||

"user": {

|

||||

"userId": "amzn1.ask.account.AEUHSFGVXWOYRSM2A7SVAK47L3I44TVOG6DBCTY2ACYSCUYQ65MWDZLUBZHLDD3XEMCYRLS4VSA54PQ7QBQW6FZLRJSMP5BOZE2B52YURUOSNOWORL44QGYDRXR3H7A7Y33OP3XKMUSJXIAFH7T2ZA6EQBLYRD34BPLTJXE3PDZE3V4YNFYUECXQNNH4TRG3ZBOYH2BF4BTKIIQ"

|

||||

},

|

||||

"new": true

|

||||

},

|

||||

"request": {

|

||||

"type": "IntentRequest",

|

||||

"requestId": "EdwRequestId.9ddf1ea0-c582-4dd0-8547-359f71639c1d",

|

||||

"locale": "en-GB",

|

||||

"timestamp": "2017-01-28T11:02:59Z",

|

||||

"intent": {

|

||||

"name": "ChangeColorIntent",

|

||||

"slots": {

|

||||

"LedColor": {

|

||||

"name": "LedColor",

|

||||

"value": "red"

|

||||

}

|

||||

}

|

||||

}

|

||||

},

|

||||

"version": "1.0"

|

||||

}

|

||||

@ -1,29 +0,0 @@

|

||||

{

|

||||

"session": {

|

||||

"sessionId": "SessionId.3f589830-c369-45a3-9c8d-7f5271777dd8",

|

||||

"application": {

|

||||

"applicationId": "amzn1.ask.skill.b32fb0db-f0f0-4e64-b862-48e506f4ea68"

|

||||

},

|

||||

"attributes": {},

|

||||

"user": {

|

||||

"userId": "amzn1.ask.account.AEUHSFGVXWOYRSM2A7SVAK47L3I44TVOG6DBCTY2ACYSCUYQ65MWDZLUBZHLDD3XEMCYRLS4VSA54PQ7QBQW6FZLRJSMP5BOZE2B52YURUOSNOWORL44QGYDRXR3H7A7Y33OP3XKMUSJXIAFH7T2ZA6EQBLYRD34BPLTJXE3PDZE3V4YNFYUECXQNNH4TRG3ZBOYH2BF4BTKIIQ"

|

||||

},

|

||||

"new": true

|

||||

},

|

||||

"request": {

|

||||

"type": "IntentRequest",

|

||||

"requestId": "EdwRequestId.9ddf1ea0-c582-4dd0-8547-359f71639c1d",

|

||||

"locale": "en-GB",

|

||||

"timestamp": "2017-01-28T11:02:59Z",

|

||||

"intent": {

|

||||

"name": "ChangeColorIntent",

|

||||

"slots": {

|

||||

"LedColor": {

|

||||

"name": "LedColor",

|

||||

"value": "blue"

|

||||

}

|

||||

}

|

||||

}

|

||||

},

|

||||

"version": "1.0"

|

||||

}

|

||||

@ -1,16 +0,0 @@

|

||||

{

|

||||

"version": "1.0",

|

||||

"response": {

|

||||

"outputSpeech": {

|

||||

"type": "PlainText",

|

||||

"text": "OK, red."

|

||||

},

|

||||

"card": {

|

||||

"content": "OK, red.",

|

||||

"title": "Office Lights",

|

||||

"type": "Simple"

|

||||

},

|

||||

"shouldEndSession": true

|

||||

},

|

||||

"sessionAttributes": {}

|

||||

}

|

||||

@ -1,40 +0,0 @@

|

||||

"use strict"

|

||||

|

||||

var mqtt = require('mqtt');

|

||||

|

||||

class Send {

|

||||

constructor(topic) {

|

||||

this.topic = topic;

|

||||

}

|

||||

|

||||

sendIntensity(req, done) {

|

||||

var ops = { port: 1883, host: "iot.eclipse.org" };

|

||||

|

||||

var client = mqtt.connect(ops);

|

||||

|

||||

client.on('connect', () => {

|

||||

|

||||

let payload = req;

|

||||

let cb = () => {

|

||||

done();

|

||||

};

|

||||

client.publish(this.topic, JSON.stringify(payload), {qos: 1}, cb);

|

||||

});

|

||||

}

|

||||

|

||||

sendColor(req, done) {

|

||||

var ops = { port: 1883, host: "iot.eclipse.org" };

|

||||

|

||||

var client = mqtt.connect(ops);

|

||||

let cb = () => {

|

||||

done();

|

||||

};

|

||||

client.on('connect', () => {

|

||||

|

||||

let payload = req;

|

||||

client.publish(this.topic, JSON.stringify(payload), {qos: 1}, cb);

|

||||

});

|

||||

}

|

||||

}

|

||||

|

||||

module.exports = Send;

|

||||

1

sample-functions/DockerHubStats/.gitignore

vendored

1

sample-functions/DockerHubStats/.gitignore

vendored

@ -1 +0,0 @@

|

||||

DockerHubStats

|

||||

@ -1,27 +0,0 @@

|

||||

FROM ghcr.io/openfaas/classic-watchdog:0.2.0 as watchdog

|

||||

|

||||

FROM golang:1.13-alpine as builder

|

||||

ENV CGO_ENABLED=0

|

||||

|

||||

MAINTAINER alex@openfaas.com

|

||||

ENTRYPOINT []

|

||||

|

||||

WORKDIR /go/src/github.com/openfaas/faas/sample-functions/DockerHubStats

|

||||

COPY . /go/src/github.com/openfaas/faas/sample-functions/DockerHubStats

|

||||

RUN set -ex && apk add make && make install

|

||||

|

||||

FROM alpine:3.16.0 as ship

|

||||

|

||||

# Needed to reach the hub

|

||||

RUN apk --no-cache add ca-certificates

|

||||

|

||||

COPY --from=watchdog /fwatchdog /usr/bin/fwatchdog

|

||||

RUN chmod +x /usr/bin/fwatchdog

|

||||

COPY --from=builder /go/bin/DockerHubStats /usr/bin/DockerHubStats

|

||||

ENV fprocess "/usr/bin/DockerHubStats"

|

||||

|

||||

RUN addgroup -g 1000 -S app && adduser -u 1000 -S app -G app

|

||||

USER 1000

|

||||

|

||||

HEALTHCHECK --interval=5s CMD [ -e /tmp/.lock ] || exit 1

|

||||

CMD ["/usr/bin/fwatchdog"]

|

||||

@ -1,25 +0,0 @@

|

||||

FROM golang:1.9.7-alpine as builder

|

||||

|

||||

MAINTAINER alex@openfaas.com

|

||||

ENTRYPOINT []

|

||||

|

||||

RUN apk --no-cache add make curl \

|

||||

&& curl -sL https://github.com/openfaas/faas/releases/download/0.13.0/fwatchdog-armhf > /usr/bin/fwatchdog \

|

||||

&& chmod +x /usr/bin/fwatchdog

|

||||

|

||||

WORKDIR /go/src/github.com/openfaas/faas/sample-functions/DockerHubStats

|

||||

|

||||

COPY . /go/src/github.com/openfaas/faas/sample-functions/DockerHubStats

|

||||

|

||||

RUN make install

|

||||

|

||||

FROM alpine:3.16.0 as ship

|

||||

|

||||

# Needed to reach the hub

|

||||

RUN apk --no-cache add ca-certificates

|

||||

|

||||

COPY --from=builder /usr/bin/fwatchdog /usr/bin/fwatchdog

|

||||

COPY --from=builder /go/bin/DockerHubStats /usr/bin/DockerHubStats

|

||||

ENV fprocess "/usr/bin/DockerHubStats"

|

||||

|

||||

CMD ["/usr/bin/fwatchdog"]

|

||||

@ -1,5 +0,0 @@

|

||||

|

||||

.PHONY: install

|

||||

|

||||

install:

|

||||

@go install .

|

||||

@ -1,81 +0,0 @@

|

||||

package main

|

||||

|

||||

import (

|

||||

"encoding/json"

|

||||

"fmt"

|

||||

"io/ioutil"

|

||||

"log"

|

||||

"net/http"

|

||||

"os"

|

||||

"strings"

|

||||

)

|

||||

|

||||

type dockerHubOrgStatsType struct {

|

||||

Count int `json:"count"`

|

||||

}

|

||||

|

||||

type dockerHubRepoStatsType struct {

|

||||

PullCount int `json:"pull_count"`

|

||||

}

|

||||

|

||||

func sanitizeInput(input string) string {

|

||||

parts := strings.Split(input, "\n")

|

||||

return strings.Trim(parts[0], " ")

|

||||

}

|

||||

|

||||

func requestStats(repo string) []byte {

|

||||

client := http.Client{}

|

||||

res, err := client.Get("https://hub.docker.com/v2/repositories/" + repo)

|

||||

if err != nil {

|

||||

log.Fatalln("Unable to reach Docker Hub server.")

|

||||

}

|

||||

|

||||

body, err := ioutil.ReadAll(res.Body)

|

||||

if err != nil {

|

||||

log.Fatalln("Unable to parse response from server.")

|

||||

}

|

||||

|

||||

return body

|

||||

}

|

||||

|

||||

func parseOrgStats(response []byte) (dockerHubOrgStatsType, error) {

|

||||

dockerHubOrgStats := dockerHubOrgStatsType{}

|

||||

err := json.Unmarshal(response, &dockerHubOrgStats)

|

||||

return dockerHubOrgStats, err

|

||||

}

|

||||

|

||||

func parseRepoStats(response []byte) (dockerHubRepoStatsType, error) {

|

||||

dockerHubRepoStats := dockerHubRepoStatsType{}

|

||||

err := json.Unmarshal(response, &dockerHubRepoStats)

|

||||

return dockerHubRepoStats, err

|

||||

}

|

||||

|

||||

func main() {

|

||||

input, err := ioutil.ReadAll(os.Stdin)

|

||||

if err != nil {

|

||||

log.Fatal("Unable to read standard input:", err)

|

||||

}

|

||||

request := string(input)

|

||||

if len(input) == 0 {

|

||||

log.Fatalln("A username/organisation or repository is required.")

|

||||

}

|

||||

|

||||

request = sanitizeInput(request)

|

||||

response := requestStats(request)

|

||||

|

||||

if strings.Contains(request, "/") {

|

||||

dockerHubRepoStats, err := parseRepoStats(response)

|

||||

if err != nil {

|

||||

log.Fatalln("Unable to parse response from Docker Hub for repository")

|

||||

} else {

|

||||

fmt.Printf("Repo: %s has been pulled %d times from the Docker Hub", request, dockerHubRepoStats.PullCount)

|

||||

}

|

||||

} else {

|

||||

dockerHubOrgStats, err := parseOrgStats(response)

|

||||

if err != nil {

|

||||

log.Fatalln("Unable to parse response from Docker Hub for user/organisation")

|

||||

} else {

|

||||

fmt.Printf("The organisation or user %s has %d repositories on the Docker hub.\n", request, dockerHubOrgStats.Count)

|

||||

}

|

||||

}

|

||||

}

|

||||

@ -1,16 +0,0 @@

|

||||

FROM functions/alpine:latest

|

||||

|

||||

USER root

|

||||

|

||||

RUN apk --update add nodejs npm

|

||||

|

||||

COPY package.json .

|

||||

COPY handler.js .

|

||||

COPY sample.json .

|

||||

|

||||

RUN npm i

|

||||

|

||||

USER 1000

|

||||

|

||||

ENV fprocess="node handler.js"

|

||||

CMD ["fwatchdog"]

|

||||

@ -1,26 +0,0 @@

|

||||

"use strict"

|

||||

let fs = require('fs');

|

||||

let sample = require("./sample.json");

|

||||

let getStdin = require("get-stdin");

|

||||

|

||||

getStdin().then(content => {

|

||||

let request = JSON.parse(content);

|

||||

handle(request, request.request.intent);

|

||||

});

|

||||

|

||||

function tellWithCard(speechOutput) {

|

||||

sample.response.outputSpeech.text = speechOutput

|

||||

sample.response.card.content = speechOutput

|

||||

sample.response.card.title = "Hostname";

|

||||

console.log(JSON.stringify(sample));

|

||||

process.exit(0);

|

||||

}

|

||||

|

||||

function handle(request, intent) {

|

||||

fs.readFile("/etc/hostname", "utf8", (err, data) => {

|

||||

if(err) {

|

||||

return console.log(err);

|

||||

}

|

||||

tellWithCard("Your hostname is " + data);

|

||||

});

|

||||

};

|

||||

@ -1 +0,0 @@

|

||||

docker build -t hostnameintent . ; docker service rm HostnameIntent ; docker service create --network=functions --name HostnameIntent hostnameintent

|

||||

@ -1,15 +0,0 @@

|

||||

{

|

||||

"name": "HostnameIntent",

|

||||

"version": "1.0.0",

|

||||

"description": "",

|

||||

"main": "handler.js",

|

||||

"scripts": {

|

||||

"test": "echo \"Error: no test specified\" && exit 1"

|

||||

},

|

||||

"keywords": [],

|

||||

"author": "",

|

||||

"license": "ISC",

|

||||

"dependencies": {

|

||||

"get-stdin": "^5.0.1"

|

||||

}

|

||||

}

|

||||

@ -1,16 +0,0 @@

|

||||

{

|

||||

"version": "1.0",

|

||||

"response": {

|

||||

"outputSpeech": {

|

||||

"type": "PlainText",

|

||||

"text": "There's currently 6 people in space"

|

||||

},

|

||||

"card": {

|

||||

"content": "There's currently 6 people in space",

|

||||

"title": "People in space",

|

||||

"type": "Simple"

|

||||

},

|

||||

"shouldEndSession": true

|

||||

},

|

||||

"sessionAttributes": {}

|

||||

}

|

||||

2

sample-functions/MarkdownRender/.gitignore

vendored

2

sample-functions/MarkdownRender/.gitignore

vendored

@ -1,2 +0,0 @@

|

||||

app

|

||||

MarkdownRender

|

||||

@ -1,27 +0,0 @@

|

||||

FROM ghcr.io/openfaas/classic-watchdog:0.2.0 as watchdog

|

||||

|

||||

FROM golang:1.13-alpine as builder

|

||||

ENV CGO_ENABLED=0

|

||||

|

||||

MAINTAINER alex@openfaas.com

|

||||

ENTRYPOINT []

|

||||

|

||||

WORKDIR /go/src/github.com/openfaas/faas/sample-functions/MarkdownRender

|

||||

|

||||

COPY handler.go .

|

||||

COPY vendor vendor

|

||||

|

||||

RUN go install

|

||||

|

||||

FROM alpine:3.16.0 as ship

|

||||

|

||||

COPY --from=watchdog /fwatchdog /usr/bin/fwatchdog

|

||||

RUN chmod +x /usr/bin/fwatchdog

|

||||

|

||||

COPY --from=builder /go/bin/MarkdownRender /usr/bin/MarkdownRender

|

||||

ENV fprocess "/usr/bin/MarkdownRender"

|

||||

|

||||

RUN addgroup -g 1000 -S app && adduser -u 1000 -S app -G app

|

||||

USER 1000

|

||||

|

||||

CMD ["/usr/bin/fwatchdog"]

|

||||

33

sample-functions/MarkdownRender/Gopkg.lock

generated

33

sample-functions/MarkdownRender/Gopkg.lock

generated

@ -1,33 +0,0 @@

|

||||

# This file is autogenerated, do not edit; changes may be undone by the next 'dep ensure'.

|

||||

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

name = "github.com/microcosm-cc/bluemonday"

|

||||

packages = ["."]

|

||||

revision = "68fecaef60268522d2ac3f0123cec9d3bcab7b6e"

|

||||

|

||||

[[projects]]

|

||||

name = "github.com/russross/blackfriday"

|

||||

packages = ["."]

|

||||

revision = "cadec560ec52d93835bf2f15bd794700d3a2473b"

|

||||

version = "v2.0.0"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

name = "github.com/shurcooL/sanitized_anchor_name"

|

||||

packages = ["."]

|

||||

revision = "86672fcb3f950f35f2e675df2240550f2a50762f"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

name = "golang.org/x/net"

|

||||

packages = ["html","html/atom"]

|

||||

revision = "a337091b0525af65de94df2eb7e98bd9962dcbe2"

|

||||

|

||||

[solve-meta]

|

||||

analyzer-name = "dep"

|

||||

analyzer-version = 1

|

||||

inputs-digest = "501ddd966c3f040b4158a459f36eeda2818e57897613b965188a4f4b15579034"

|

||||

solver-name = "gps-cdcl"

|

||||

solver-version = 1

|

||||

@ -1,30 +0,0 @@

|

||||

|

||||

# Gopkg.toml example

|

||||

#

|

||||

# Refer to https://github.com/golang/dep/blob/master/docs/Gopkg.toml.md

|

||||

# for detailed Gopkg.toml documentation.

|

||||

#

|

||||

# required = ["github.com/user/thing/cmd/thing"]

|

||||

# ignored = ["github.com/user/project/pkgX", "bitbucket.org/user/project/pkgA/pkgY"]

|

||||

#

|

||||

# [[constraint]]

|

||||

# name = "github.com/user/project"

|

||||

# version = "1.0.0"

|

||||

#

|

||||

# [[constraint]]

|

||||

# name = "github.com/user/project2"

|

||||

# branch = "dev"

|

||||

# source = "github.com/myfork/project2"

|

||||

#

|

||||

# [[override]]

|

||||

# name = "github.com/x/y"

|

||||

# version = "2.4.0"

|

||||

|

||||

|

||||

[[constraint]]

|

||||

branch = "master"

|

||||

name = "github.com/microcosm-cc/bluemonday"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/russross/blackfriday"

|

||||

version = "2.0.0"

|

||||

@ -1,17 +0,0 @@

|

||||

package main

|

||||

|

||||

import (

|

||||

"fmt"

|

||||

"io/ioutil"

|

||||

"os"

|

||||

|

||||

"github.com/microcosm-cc/bluemonday"

|

||||

"github.com/russross/blackfriday"

|

||||

)

|

||||

|

||||

func main() {

|

||||

input, _ := ioutil.ReadAll(os.Stdin)

|

||||

unsafe := blackfriday.Run([]byte(input), blackfriday.WithNoExtensions())

|

||||

html := bluemonday.UGCPolicy().SanitizeBytes(unsafe)

|

||||

fmt.Println(string(html))

|

||||

}

|

||||

@ -1 +0,0 @@

|

||||

repo_token: x2wlA1x0X8CK45ybWpZRCVRB4g7vtkhaw

|

||||

20

sample-functions/MarkdownRender/vendor/github.com/microcosm-cc/bluemonday/.travis.yml

generated

vendored

20

sample-functions/MarkdownRender/vendor/github.com/microcosm-cc/bluemonday/.travis.yml

generated

vendored

@ -1,20 +0,0 @@

|

||||

language: go

|

||||

go:

|

||||

- 1.1

|

||||

- 1.2

|

||||

- 1.3

|

||||

- 1.4

|

||||

- 1.5

|

||||

- 1.6

|

||||

- 1.7

|

||||

- 1.8

|

||||

- 1.9

|

||||

- tip

|

||||

matrix:

|

||||

allow_failures:

|

||||

- go: tip

|

||||

fast_finish: true

|

||||

install:

|

||||

- go get golang.org/x/net/html

|

||||

script:

|

||||

- go test -v ./...

|

||||

@ -1,51 +0,0 @@

|

||||

# Contributing to bluemonday

|

||||

|

||||

Third-party patches are essential for keeping bluemonday secure and offering the features developers want. However there are a few guidelines that we need contributors to follow so that we can maintain the quality of work that developers who use bluemonday expect.

|

||||

|

||||

## Getting Started

|

||||

|

||||

* Make sure you have a [Github account](https://github.com/signup/free)

|

||||

|

||||

## Guidelines

|

||||

|

||||

1. Do not vendor dependencies. As a security package, were we to vendor dependencies the projects that then vendor bluemonday may not receive the latest security updates to the dependencies. By not vendoring dependencies the project that implements bluemonday will vendor the latest version of any dependent packages. Vendoring is a project problem, not a package problem. bluemonday will be tested against the latest version of dependencies periodically and during any PR/merge.

|

||||

|

||||

## Submitting an Issue

|

||||

|

||||

* Submit a ticket for your issue, assuming one does not already exist

|

||||

* Clearly describe the issue including the steps to reproduce (with sample input and output) if it is a bug

|

||||

|

||||

If you are reporting a security flaw, you may expect that we will provide the code to fix it for you. Otherwise you may want to submit a pull request to ensure the resolution is applied sooner rather than later:

|

||||

|

||||

* Fork the repository on Github

|

||||

* Issue a pull request containing code to resolve the issue

|

||||

|

||||

## Submitting a Pull Request

|

||||

|

||||

* Submit a ticket for your issue, assuming one does not already exist

|

||||

* Describe the reason for the pull request and if applicable show some example inputs and outputs to demonstrate what the patch does

|

||||

* Fork the repository on Github

|

||||

* Before submitting the pull request you should

|

||||

1. Include tests for your patch, 1 test should encapsulate the entire patch and should refer to the Github issue

|

||||

1. If you have added new exposed/public functionality, you should ensure it is documented appropriately

|

||||

1. If you have added new exposed/public functionality, you should consider demonstrating how to use it within one of the helpers or shipped policies if appropriate or within a test if modifying a helper or policy is not appropriate

|

||||

1. Run all of the tests `go test -v ./...` or `make test` and ensure all tests pass

|

||||

1. Run gofmt `gofmt -w ./$*` or `make fmt`

|

||||

1. Run vet `go tool vet *.go` or `make vet` and resolve any issues

|

||||

1. Install golint using `go get -u github.com/golang/lint/golint` and run vet `golint *.go` or `make lint` and resolve every warning

|

||||

* When submitting the pull request you should

|

||||

1. Note the issue(s) it resolves, i.e. `Closes #6` in the pull request comment to close issue #6 when the pull request is accepted

|

||||

|

||||

Once you have submitted a pull request, we *may* merge it without changes. If we have any comments or feedback, or need you to make changes to your pull request we will update the Github pull request or the associated issue. We expect responses from you within two weeks, and we may close the pull request is there is no activity.

|

||||

|

||||

### Contributor Licence Agreement

|

||||

|

||||

We haven't gone for the formal "Sign a Contributor Licence Agreement" thing that projects like [puppet](https://cla.puppetlabs.com/), [Mojito](https://developer.yahoo.com/cocktails/mojito/cla/) and companies like [Google](http://code.google.com/legal/individual-cla-v1.0.html) are using.

|

||||

|

||||

But we do need to know that we can accept and merge your contributions, so for now the act of contributing a pull request should be considered equivalent to agreeing to a contributor licence agreement, specifically:

|

||||

|

||||

You accept that the act of submitting code to the bluemonday project is to grant a copyright licence to the project that is perpetual, worldwide, non-exclusive, no-charge, royalty free and irrevocable.

|

||||

|

||||

You accept that all who comply with the licence of the project (BSD 3-clause) are permitted to use your contributions to the project.

|

||||

|

||||

You accept, and by submitting code do declare, that you have the legal right to grant such a licence to the project and that each of the contributions is your own original creation.

|

||||

6

sample-functions/MarkdownRender/vendor/github.com/microcosm-cc/bluemonday/CREDITS.md

generated

vendored

6

sample-functions/MarkdownRender/vendor/github.com/microcosm-cc/bluemonday/CREDITS.md

generated

vendored

@ -1,6 +0,0 @@

|

||||

|

||||

1. John Graham-Cumming http://jgc.org/

|

||||

1. Mike Samuel mikesamuel@gmail.com

|

||||

1. Dmitri Shuralyov shurcooL@gmail.com

|

||||

1. https://github.com/opennota

|

||||

1. https://github.com/Gufran

|

||||

28

sample-functions/MarkdownRender/vendor/github.com/microcosm-cc/bluemonday/LICENSE.md

generated

vendored

28