mirror of

https://github.com/openfaas/faasd.git

synced 2025-06-08 16:06:47 +00:00

Compare commits

203 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

9259344452 | ||

|

|

83e804513a | ||

|

|

f409e01aa6 | ||

|

|

e98c949019 | ||

|

|

6f0ac37e3c | ||

|

|

0defc22a3f | ||

|

|

c14d92c5c2 | ||

|

|

934d326d82 | ||

|

|

0ea1bd5ffc | ||

|

|

7f798267b0 | ||

|

|

a2254ca1ff | ||

|

|

087a299f4c | ||

|

|

6dcdab832d | ||

|

|

57163e27cf | ||

|

|

cced8eb3df | ||

|

|

0d4bfa938c | ||

|

|

120a34cfef | ||

|

|

ae4d0a97f8 | ||

|

|

ef68994a5c | ||

|

|

43e51c51bb | ||

|

|

695d80076c | ||

|

|

51f3f87ba1 | ||

|

|

e2758be25e | ||

|

|

7ad5c17e7c | ||

|

|

983dacb15c | ||

|

|

96abaaf102 | ||

|

|

d7e0bebe25 | ||

|

|

ef689d7b62 | ||

|

|

854ec5836d | ||

|

|

4ab5f60b9d | ||

|

|

a8b61f2086 | ||

|

|

68335e2016 | ||

|

|

404af1c4d9 | ||

|

|

055e57ec5f | ||

|

|

6bb1222ebb | ||

|

|

599ae5415f | ||

|

|

0d74cac072 | ||

|

|

c2b802cbf9 | ||

|

|

bd0e1d7718 | ||

|

|

bfc87ff432 | ||

|

|

032716e3e9 | ||

|

|

c813b0810b | ||

|

|

fb36d2e5aa | ||

|

|

038eb91191 | ||

|

|

5576382d96 | ||

|

|

7ca2621c98 | ||

|

|

a154fd1bc0 | ||

|

|

6b1e49a2a5 | ||

|

|

5344a32472 | ||

|

|

e59e3f0cb6 | ||

|

|

2adc1350d4 | ||

|

|

5b633cc017 | ||

|

|

1c1bfa6759 | ||

|

|

93f41ca35d | ||

|

|

0172c996b8 | ||

|

|

4162db43ff | ||

|

|

e4848cd829 | ||

|

|

7dbaeef3d8 | ||

|

|

887c18befa | ||

|

|

f6167e72a9 | ||

|

|

c12505a63f | ||

|

|

ffac4063b6 | ||

|

|

dd31784824 | ||

|

|

70b0929cf2 | ||

|

|

c61efe06f4 | ||

|

|

b05844acea | ||

|

|

fd20e69ee1 | ||

|

|

4a3fa684e2 | ||

|

|

f17a25f3e8 | ||

|

|

7ef56d8dae | ||

|

|

1cb5493f72 | ||

|

|

d85332be13 | ||

|

|

1412faffd2 | ||

|

|

2685c1db06 | ||

|

|

078043b168 | ||

|

|

5356fca4c5 | ||

|

|

99ccd75b62 | ||

|

|

aab5363e65 | ||

|

|

ba601bfc67 | ||

|

|

53670e2854 | ||

|

|

57baf34f5a | ||

|

|

c83b649301 | ||

|

|

f394b4a2f1 | ||

|

|

d0219d6697 | ||

|

|

7d073bd64b | ||

|

|

ebec399817 | ||

|

|

553054ddf5 | ||

|

|

86d2797873 | ||

|

|

0d3dc50f61 | ||

|

|

b1ea842fb1 | ||

|

|

e1d90bba60 | ||

|

|

6c8214b27e | ||

|

|

990f1a4df6 | ||

|

|

c41c2cd9fc | ||

|

|

9efd019e86 | ||

|

|

d09ab85bda | ||

|

|

c5c0fa05a2 | ||

|

|

aaf1811052 | ||

|

|

4d6b6dfdc5 | ||

|

|

b844a72067 | ||

|

|

8227285faa | ||

|

|

87773fd167 | ||

|

|

ecf82ec37b | ||

|

|

9e8c680f3f | ||

|

|

85c1082fac | ||

|

|

282b05802c | ||

|

|

7c118225b2 | ||

|

|

95792f8d58 | ||

|

|

60b724f014 | ||

|

|

e0db59d8a1 | ||

|

|

13304fa0b2 | ||

|

|

a65b989b15 | ||

|

|

6b6ff71c29 | ||

|

|

bb5b212663 | ||

|

|

9564e64980 | ||

|

|

6dbc33d045 | ||

|

|

5cedf28929 | ||

|

|

b7be42e5ec | ||

|

|

2b0cbeb25d | ||

|

|

d29f94a8d4 | ||

|

|

c5b463bee9 | ||

|

|

c0c4f2d068 | ||

|

|

886f5ba295 | ||

|

|

309310140c | ||

|

|

a88997e42c | ||

|

|

02e9b9961b | ||

|

|

fee46de596 | ||

|

|

6d297a96d6 | ||

|

|

2178d90a10 | ||

|

|

37c63a84a5 | ||

|

|

b43d2562a9 | ||

|

|

e668beef13 | ||

|

|

a574a0c06f | ||

|

|

4061b52b2a | ||

|

|

bc88d6170c | ||

|

|

fe057fbcf8 | ||

|

|

b44f57ce4d | ||

|

|

4ecc215a70 | ||

|

|

a995413971 | ||

|

|

912ac265f4 | ||

|

|

449bcf2691 | ||

|

|

1822114705 | ||

|

|

52f64dfaa2 | ||

|

|

7bd84766f3 | ||

|

|

e09f37e5cb | ||

|

|

4b132315c7 | ||

|

|

ab4708246d | ||

|

|

b807ff0725 | ||

|

|

f74f5e6a4f | ||

|

|

95c41ea758 | ||

|

|

8ac45f5379 | ||

|

|

3579061423 | ||

|

|

761d1847bf | ||

|

|

8003748b73 | ||

|

|

a2ea804d2c | ||

|

|

551e6645b7 | ||

|

|

77867f17e3 | ||

|

|

5aed707354 | ||

|

|

8fbdd1a461 | ||

|

|

8dd48b8957 | ||

|

|

6763ed6d66 | ||

|

|

acb5d0bd1c | ||

|

|

2c9eb3904e | ||

|

|

b42066d1a1 | ||

|

|

17188b8de9 | ||

|

|

0c0088e8b0 | ||

|

|

c5f167df21 | ||

|

|

d5fcc7b2ab | ||

|

|

cbfefb6fa5 | ||

|

|

ea62c1b12d | ||

|

|

8f40618a5c | ||

|

|

3fe0d8d8d3 | ||

|

|

5aa4c69e03 | ||

|

|

12b5e8ca7f | ||

|

|

195e81f595 | ||

|

|

06fbca83bf | ||

|

|

e71d2c27c5 | ||

|

|

13f4a487ce | ||

|

|

13412841aa | ||

|

|

e76d0d34ba | ||

|

|

dec02f3240 | ||

|

|

73c7349e36 | ||

|

|

b8ada0d46b | ||

|

|

5ac51663da | ||

|

|

1e9d8fffa0 | ||

|

|

57322c4947 | ||

|

|

6b840f0226 | ||

|

|

12ada59bf1 | ||

|

|

2ae8b31ac0 | ||

|

|

4c9c66812a | ||

|

|

9da2d92613 | ||

|

|

5e29516f86 | ||

|

|

9f1b5e2f7b | ||

|

|

efcae9888c | ||

|

|

2885bb0c51 | ||

|

|

a4e092b217 | ||

|

|

dca036ee51 | ||

|

|

583f5ad1b0 | ||

|

|

659f98cc0d | ||

|

|

c7d9353991 | ||

|

|

29bb5ad9cc | ||

|

|

6262ff2f4a | ||

|

|

1d86c62792 |

34

.github/ISSUE_TEMPLATE.md

vendored

34

.github/ISSUE_TEMPLATE.md

vendored

@ -1,19 +1,37 @@

|

||||

<!--- Provide a general summary of the issue in the Title above -->

|

||||

## Due diligence

|

||||

|

||||

<!-- Due dilligence -->

|

||||

## My actions before raising this issue

|

||||

Before you ask for help or support, make sure that you've [consulted the manual for faasd](https://openfaas.gumroad.com/l/serverless-for-everyone-else). We can't answer questions that are already covered by the manual.

|

||||

|

||||

|

||||

<!-- How is this affecting you? What task are you trying to accomplish? -->

|

||||

## Why do you need this?

|

||||

|

||||

<!-- Attempts to mask or hide this may result in the issue being closed -->

|

||||

## Who is this for?

|

||||

|

||||

What company is this for? Are you listed in the [ADOPTERS.md](https://github.com/openfaas/faas/blob/master/ADOPTERS.md) file?

|

||||

|

||||

<!--- Provide a general summary of the issue in the Title above -->

|

||||

## Expected Behaviour

|

||||

<!--- If you're describing a bug, tell us what should happen -->

|

||||

<!--- If you're suggesting a change/improvement, tell us how it should work -->

|

||||

|

||||

|

||||

## Current Behaviour

|

||||

<!--- If describing a bug, tell us what happens instead of the expected behavior -->

|

||||

<!--- If suggesting a change/improvement, explain the difference from current behavior -->

|

||||

|

||||

## List all Possible Solutions

|

||||

<!--- Not obligatory, but suggest a fix/reason for the bug, -->

|

||||

<!--- or ideas how to implement the addition or change -->

|

||||

|

||||

## List the one solution that you would recommend

|

||||

<!--- If you were to be on the hook for this change. -->

|

||||

## List All Possible Solutions and Workarounds

|

||||

<!--- Suggest a fix/reason for the bug, or ideas how to implement -->

|

||||

<!--- the addition or change -->

|

||||

<!--- Is there a workaround which could avoid making changes? -->

|

||||

|

||||

## Which Solution Do You Recommend?

|

||||

<!--- Pick your preferred solution, if you were to implement and maintain this change -->

|

||||

|

||||

|

||||

## Steps to Reproduce (for bugs)

|

||||

<!--- Provide a link to a live example, or an unambiguous set of steps to -->

|

||||

@ -23,10 +41,6 @@

|

||||

3.

|

||||

4.

|

||||

|

||||

## Context

|

||||

<!--- How has this issue affected you? What are you trying to accomplish? -->

|

||||

<!--- Providing context helps us come up with a solution that is most useful in the real world -->

|

||||

|

||||

## Your Environment

|

||||

|

||||

* OS and architecture:

|

||||

|

||||

1

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

1

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

@ -0,0 +1 @@

|

||||

blank_issues_enabled: false

|

||||

69

.github/ISSUE_TEMPLATE/issue.md

vendored

Normal file

69

.github/ISSUE_TEMPLATE/issue.md

vendored

Normal file

@ -0,0 +1,69 @@

|

||||

---

|

||||

name: Report an issue

|

||||

about: Create a report to help us improve

|

||||

title: ''

|

||||

labels: ''

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

## Due diligence

|

||||

|

||||

<!-- Due dilligence -->

|

||||

## My actions before raising this issue

|

||||

Before you ask for help or support, make sure that you've [consulted the manual for faasd](https://openfaas.gumroad.com/l/serverless-for-everyone-else). We can't answer questions that are already covered by the manual.

|

||||

|

||||

|

||||

<!-- How is this affecting you? What task are you trying to accomplish? -->

|

||||

## Why do you need this?

|

||||

|

||||

<!-- Attempts to mask or hide this may result in the issue being closed -->

|

||||

## Who is this for?

|

||||

|

||||

What company is this for? Are you listed in the [ADOPTERS.md](https://github.com/openfaas/faas/blob/master/ADOPTERS.md) file?

|

||||

|

||||

<!--- Provide a general summary of the issue in the Title above -->

|

||||

## Expected Behaviour

|

||||

<!--- If you're describing a bug, tell us what should happen -->

|

||||

<!--- If you're suggesting a change/improvement, tell us how it should work -->

|

||||

|

||||

|

||||

## Current Behaviour

|

||||

<!--- If describing a bug, tell us what happens instead of the expected behavior -->

|

||||

<!--- If suggesting a change/improvement, explain the difference from current behavior -->

|

||||

|

||||

|

||||

## List All Possible Solutions and Workarounds

|

||||

<!--- Suggest a fix/reason for the bug, or ideas how to implement -->

|

||||

<!--- the addition or change -->

|

||||

<!--- Is there a workaround which could avoid making changes? -->

|

||||

|

||||

## Which Solution Do You Recommend?

|

||||

<!--- Pick your preferred solution, if you were to implement and maintain this change -->

|

||||

|

||||

|

||||

## Steps to Reproduce (for bugs)

|

||||

<!--- Provide a link to a live example, or an unambiguous set of steps to -->

|

||||

<!--- reproduce this bug. Include code to reproduce, if relevant -->

|

||||

1.

|

||||

2.

|

||||

3.

|

||||

4.

|

||||

|

||||

## Your Environment

|

||||

|

||||

* OS and architecture:

|

||||

|

||||

* Versions:

|

||||

|

||||

```sh

|

||||

go version

|

||||

|

||||

containerd -version

|

||||

|

||||

uname -a

|

||||

|

||||

cat /etc/os-release

|

||||

|

||||

faasd version

|

||||

```

|

||||

10

.github/workflows/build.yaml

vendored

10

.github/workflows/build.yaml

vendored

@ -10,19 +10,15 @@ jobs:

|

||||

build:

|

||||

env:

|

||||

GO111MODULE: off

|

||||

strategy:

|

||||

matrix:

|

||||

go-version: [1.15.x]

|

||||

os: [ubuntu-latest]

|

||||

runs-on: ${{ matrix.os }}

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@master

|

||||

with:

|

||||

fetch-depth: 1

|

||||

- name: Install Go

|

||||

uses: actions/setup-go@v2

|

||||

uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: ${{ matrix.go-version }}

|

||||

go-version: 1.22.x

|

||||

|

||||

- name: test

|

||||

run: make test

|

||||

|

||||

12

.github/workflows/publish.yaml

vendored

12

.github/workflows/publish.yaml

vendored

@ -7,23 +7,19 @@ on:

|

||||

|

||||

jobs:

|

||||

publish:

|

||||

strategy:

|

||||

matrix:

|

||||

go-version: [ 1.15.x ]

|

||||

os: [ ubuntu-latest ]

|

||||

runs-on: ${{ matrix.os }}

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@master

|

||||

with:

|

||||

fetch-depth: 1

|

||||

- name: Install Go

|

||||

uses: actions/setup-go@v2

|

||||

uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: ${{ matrix.go-version }}

|

||||

go-version: 1.22.x

|

||||

- name: Make publish

|

||||

run: make publish

|

||||

- name: Upload release binaries

|

||||

uses: alexellis/upload-assets@0.2.2

|

||||

uses: alexellis/upload-assets@0.4.1

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ github.token }}

|

||||

with:

|

||||

|

||||

18

.github/workflows/verify-images.yaml

vendored

Normal file

18

.github/workflows/verify-images.yaml

vendored

Normal file

@ -0,0 +1,18 @@

|

||||

name: Verify Docker Compose Images

|

||||

|

||||

on:

|

||||

push:

|

||||

paths:

|

||||

- '**.yaml'

|

||||

|

||||

jobs:

|

||||

verifyImages:

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@master

|

||||

- uses: alexellis/setup-arkade@v3

|

||||

- name: Verify chart images

|

||||

id: verify_images

|

||||

run: |

|

||||

VERBOSE=true make verify-compose

|

||||

22

EULA.md

Normal file

22

EULA.md

Normal file

@ -0,0 +1,22 @@

|

||||

1.1 EULA Addendum for faasd Community Edition (CE). This EULA Addendum for faasd is part of the [OpenFaaS Community Edition (CE) EULA](https://github.com/openfaas/faas/blob/master/EULA.md).

|

||||

|

||||

1.2 Agreement Parties. This Agreement is between OpenFaaS Ltd (the "Licensor") and you (the "Licensee").

|

||||

|

||||

1.3 Governing Law. This Agreement shall be governed by, and construed in accordance with, the laws of England and Wales.

|

||||

|

||||

1.4 OpenFaaS Edge (faasd-pro). OpenFaaS Edge (faasd-pro) is a separate commercial product that is fully licensed under the OpenFaaS Pro EULA.

|

||||

|

||||

2.1 Grant of License for faasd CE. faasd CE may be installed once per year for a single 60-day trial period in a commercial setting for the sole purpose of evaluation and testing. This trial does not include any support or warranty, and it terminates automatically at the end of the 60-day period unless a separate commercial license is obtained.

|

||||

|

||||

2.2 Personal (Non-Commercial) Use. faasd CE may be used by an individual for personal, non-commercial projects, provided that the user is not acting on behalf of any company, corporation, or other legal entity.

|

||||

|

||||

2.3 Restrictions.

|

||||

(a) No redistribution, resale, or sublicensing of faasd CE is permitted.

|

||||

(b) The License granted under this Addendum is non-transferable.

|

||||

(c) Any continued commercial usage beyond the 60-day trial requires a separate commercial license from the Licensor.

|

||||

|

||||

2.4 Warranty Disclaimer. faasd CE is provided "as is," without warranty of any kind. No support or guarantee is included during the 60-day trial or for personal use.

|

||||

|

||||

2.5 Termination. If the terms of this Addendum are violated, the License granted hereunder terminates immediately. The Licensee must discontinue all use of faasd CE and destroy any copies in their possession.

|

||||

|

||||

2.6 Contact Information. For additional rights, commercial licenses, or support inquiries, please contact the Licensor at contact@openfaas.com.

|

||||

6

LICENSE

6

LICENSE

@ -1,8 +1,10 @@

|

||||

License for faasd contributions from OpenFaaS Ltd - 2017, 2029-2024, see: EULA.md

|

||||

|

||||

Only third-party contributions to source code are licensed MIT:

|

||||

|

||||

MIT License

|

||||

|

||||

Copyright (c) 2020 Alex Ellis

|

||||

Copyright (c) 2020 OpenFaaS Ltd

|

||||

Copyright (c) 2020 OpenFaas Author(s)

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

|

||||

27

Makefile

27

Makefile

@ -1,8 +1,8 @@

|

||||

Version := $(shell git describe --tags --dirty)

|

||||

GitCommit := $(shell git rev-parse HEAD)

|

||||

LDFLAGS := "-s -w -X main.Version=$(Version) -X main.GitCommit=$(GitCommit)"

|

||||

CONTAINERD_VER := 1.3.4

|

||||

CNI_VERSION := v0.8.6

|

||||

LDFLAGS := "-s -w -X github.com/openfaas/faasd/pkg.Version=$(Version) -X github.com/openfaas/faasd/pkg.GitCommit=$(GitCommit)"

|

||||

CONTAINERD_VER := 1.7.27

|

||||

CNI_VERSION := v0.9.1

|

||||

ARCH := amd64

|

||||

|

||||

export GO111MODULE=on

|

||||

@ -20,11 +20,14 @@ local:

|

||||

test:

|

||||

CGO_ENABLED=0 GOOS=linux go test -mod=vendor -ldflags $(LDFLAGS) ./...

|

||||

|

||||

.PHONY: dist-local

|

||||

dist-local:

|

||||

CGO_ENABLED=0 GOOS=linux go build -mod=vendor -ldflags $(LDFLAGS) -o bin/faasd

|

||||

|

||||

.PHONY: dist

|

||||

dist:

|

||||

CGO_ENABLED=0 GOOS=linux go build -mod=vendor -ldflags $(LDFLAGS) -a -installsuffix cgo -o bin/faasd

|

||||

CGO_ENABLED=0 GOOS=linux GOARCH=arm GOARM=7 go build -mod=vendor -ldflags $(LDFLAGS) -a -installsuffix cgo -o bin/faasd-armhf

|

||||

CGO_ENABLED=0 GOOS=linux GOARCH=arm64 go build -mod=vendor -ldflags $(LDFLAGS) -a -installsuffix cgo -o bin/faasd-arm64

|

||||

CGO_ENABLED=0 GOOS=linux go build -mod=vendor -ldflags $(LDFLAGS) -o bin/faasd

|

||||

CGO_ENABLED=0 GOOS=linux GOARCH=arm64 go build -mod=vendor -ldflags $(LDFLAGS) -o bin/faasd-arm64

|

||||

|

||||

.PHONY: hashgen

|

||||

hashgen:

|

||||

@ -32,8 +35,8 @@ hashgen:

|

||||

|

||||

.PHONY: prepare-test

|

||||

prepare-test:

|

||||

curl -sLSf https://github.com/containerd/containerd/releases/download/v$(CONTAINERD_VER)/containerd-$(CONTAINERD_VER).linux-amd64.tar.gz > /tmp/containerd.tar.gz && sudo tar -xvf /tmp/containerd.tar.gz -C /usr/local/bin/ --strip-components=1

|

||||

curl -SLfs https://raw.githubusercontent.com/containerd/containerd/v1.3.2/containerd.service | sudo tee /etc/systemd/system/containerd.service

|

||||

curl -sLSf https://github.com/containerd/containerd/releases/download/v$(CONTAINERD_VER)/containerd-$(CONTAINERD_VER)-linux-amd64.tar.gz > /tmp/containerd.tar.gz && sudo tar -xvf /tmp/containerd.tar.gz -C /usr/local/bin/ --strip-components=1

|

||||

curl -SLfs https://raw.githubusercontent.com/containerd/containerd/v1.7.0/containerd.service | sudo tee /etc/systemd/system/containerd.service

|

||||

sudo systemctl daemon-reload && sudo systemctl start containerd

|

||||

sudo /sbin/sysctl -w net.ipv4.conf.all.forwarding=1

|

||||

sudo mkdir -p /opt/cni/bin

|

||||

@ -66,3 +69,11 @@ test-e2e:

|

||||

|

||||

# Removed due to timing issue in CI on GitHub Actions

|

||||

# /usr/local/bin/faas-cli logs figlet --since 15m --follow=false | grep Forking

|

||||

|

||||

verify-compose:

|

||||

@echo Verifying docker-compose.yaml images in remote registries && \

|

||||

arkade chart verify --verbose=$(VERBOSE) -f ./docker-compose.yaml

|

||||

|

||||

upgrade-compose:

|

||||

@echo Checking for newer images in remote registries && \

|

||||

arkade chart upgrade -f ./docker-compose.yaml --write

|

||||

|

||||

151

README.md

151

README.md

@ -1,18 +1,38 @@

|

||||

# faasd - a lightweight & portable faas engine

|

||||

|

||||

[](https://github.com/openfaas/faasd/actions)

|

||||

[](https://opensource.org/licenses/MIT)

|

||||

[](https://www.openfaas.com)

|

||||

|

||||

# faasd - a lightweight and portable version of OpenFaaS

|

||||

|

||||

faasd is [OpenFaaS](https://github.com/openfaas/) reimagined, but without the cost and complexity of Kubernetes. It runs on a single host with very modest requirements, making it fast and easy to manage. Under the hood it uses [containerd](https://containerd.io/) and [Container Networking Interface (CNI)](https://github.com/containernetworking/cni) along with the same core OpenFaaS components from the main project.

|

||||

|

||||

|

||||

|

||||

## Use-cases and tutorials

|

||||

## Features & Benefits

|

||||

|

||||

faasd is just another way to runOpenFaaS, so many things you read in the docs or in blog posts will work the same way.

|

||||

- **Lightweight** - faasd is a single Go binary, which runs as a systemd service making it easy to manage

|

||||

- **Portable** - it runs on any Linux host with containerd and CNI, on as little as 2x vCPU and 2GB RAM - x86_64 and Arm64 supported

|

||||

- **Easy to manage** - unlike Kubernetes, its API is stable and requires little maintenance

|

||||

- **Low cost** - it's licensed per installation, so you can invoke your functions as much as you need, without additional cost

|

||||

- **Stateful containers** - faasd supports stateful containers with persistent volumes such as PostgreSQL, Grafana, Prometheus, etc

|

||||

- **Built on OpenFaaS** - uses the same containers that power OpenFaaS on Kubernetes for the Gateway, Queue-Worker, Event Connectors, Dashboards, Scale To Zero, etc

|

||||

- **Ideal for internal business use** - use it to build internal tools, automate tasks, and integrate with existing systems

|

||||

- **Deploy it for a customer** - package your functions along with OpenFaaS Edge into a VM image, and deploy it to your customers to run in their own datacenters

|

||||

|

||||

faasd does not create the same maintenance burden you'll find with installing, upgrading, and securing a Kubernetes cluster. You can deploy it and walk away, in the worst case, just deploy a new VM and deploy your functions again.

|

||||

|

||||

You can learn more about supported OpenFaaS features in the [ROADMAP.md](/docs/ROADMAP.md)

|

||||

|

||||

## Getting Started

|

||||

|

||||

There are two versions of faasd:

|

||||

|

||||

* faasd CE - for non-commercial, personal use only licensed under [the faasd CE EULA](/EULA.md)

|

||||

* OpenFaaS Edge (faasd-pro) - fully licensed for commercial use

|

||||

|

||||

You can install either edition using the instructions in the [OpenFaaS docs](https://docs.openfaas.com/deployment/edge/).

|

||||

|

||||

You can request a license for [OpenFaaS Edge using this form](https://forms.gle/g6oKLTG29mDTSk5k9)

|

||||

|

||||

## Further resources

|

||||

|

||||

There are many blog posts and documentation pages about OpenFaaS on Kubernetes, which also apply to faasd.

|

||||

|

||||

Videos and overviews:

|

||||

|

||||

@ -30,64 +50,33 @@ Use-cases and tutorials:

|

||||

|

||||

Additional resources:

|

||||

|

||||

* The official handbook - [Serverless For Everyone Else](https://gumroad.com/l/serverless-for-everyone-else)

|

||||

* The official handbook - [Serverless For Everyone Else](https://openfaas.gumroad.com/l/serverless-for-everyone-else)

|

||||

* For reference: [OpenFaaS docs](https://docs.openfaas.com)

|

||||

* For use-cases and tutorials: [OpenFaaS blog](https://openfaas.com/blog/)

|

||||

* For self-paced learning: [OpenFaaS workshop](https://github.com/openfaas/workshop/)

|

||||

|

||||

### About faasd

|

||||

### Deployment tutorials

|

||||

|

||||

* faasd is a static Golang binary

|

||||

* uses the same core components and ecosystem of OpenFaaS

|

||||

* uses containerd for its runtime and CNI for networking

|

||||

* is multi-arch, so works on Intel `x86_64` and ARM out the box

|

||||

* can run almost any other stateful container through its `docker-compose.yaml` file

|

||||

* [Use multipass on Windows, MacOS or Linux](/docs/MULTIPASS.md)

|

||||

* [Deploy to DigitalOcean with Terraform and TLS](https://www.openfaas.com/blog/faasd-tls-terraform/)

|

||||

* [Deploy to any IaaS with cloud-init](https://blog.alexellis.io/deploy-serverless-faasd-with-cloud-init/)

|

||||

* [Deploy faasd to your Raspberry Pi](https://blog.alexellis.io/faasd-for-lightweight-serverless/)

|

||||

|

||||

Most importantly, it's easy to manage so you can set it up and leave it alone to run your functions.

|

||||

Terraform scripts:

|

||||

|

||||

|

||||

* [Provision faasd on DigitalOcean with Terraform](docs/bootstrap/README.md)

|

||||

* [Provision faasd with TLS on DigitalOcean with Terraform](docs/bootstrap/digitalocean-terraform/README.md)

|

||||

|

||||

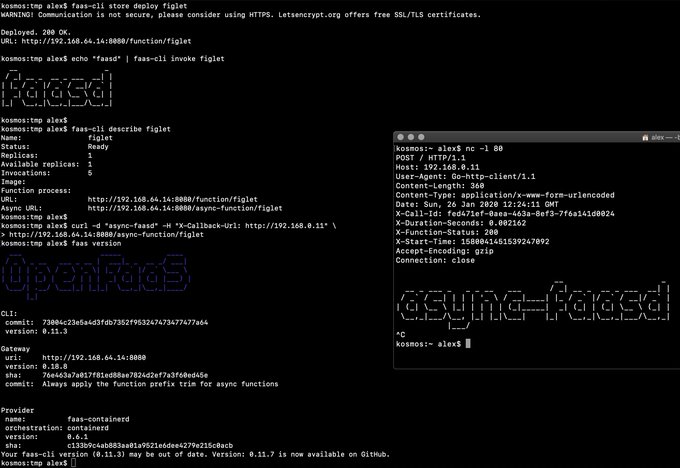

> Demo of faasd running asynchronous functions

|

||||

|

||||

Watch the video: [faasd walk-through with cloud-init and Multipass](https://www.youtube.com/watch?v=WX1tZoSXy8E)

|

||||

### Training / Handbook

|

||||

|

||||

### What does faasd deploy?

|

||||

You can find various resources to learn about faasd for free, however the official handbook is the most comprehensive guide to getting started with faasd and OpenFaaS.

|

||||

|

||||

* faasd - itself, and its [faas-provider](https://github.com/openfaas/faas-provider) for containerd - CRUD for functions and services, implements the OpenFaaS REST API

|

||||

* [Prometheus](https://github.com/prometheus/prometheus) - for monitoring of services, metrics, scaling and dashboards

|

||||

* [OpenFaaS Gateway](https://github.com/openfaas/faas/tree/master/gateway) - the UI portal, CLI, and other OpenFaaS tooling can talk to this.

|

||||

* [OpenFaaS queue-worker for NATS](https://github.com/openfaas/nats-queue-worker) - run your invocations in the background without adding any code. See also: [asynchronous invocations](https://docs.openfaas.com/reference/triggers/#async-nats-streaming)

|

||||

* [NATS](https://nats.io) for asynchronous processing and queues

|

||||

["Serverless For Everyone Else"](https://openfaas.gumroad.com/l/serverless-for-everyone-else) is the official handbook and was written to contribute funds towards the upkeep and maintenance of the project.

|

||||

|

||||

faasd relies on industry-standard tools for running containers:

|

||||

|

||||

* [CNI](https://github.com/containernetworking/plugins)

|

||||

* [containerd](https://github.com/containerd/containerd)

|

||||

* [runc](https://github.com/opencontainers/runc)

|

||||

|

||||

You can use the standard [faas-cli](https://github.com/openfaas/faas-cli) along with pre-packaged functions from *the Function Store*, or build your own using any OpenFaaS template.

|

||||

|

||||

### When should you use faasd over OpenFaaS on Kubernetes?

|

||||

|

||||

* To deploy microservices and functions that you can update and monitor remotely

|

||||

* When you don't have the bandwidth to learn or manage Kubernetes

|

||||

* To deploy embedded apps in IoT and edge use-cases

|

||||

* To distribute applications to a customer or client

|

||||

* You have a cost sensitive project - run faasd on a 1GB VM for 5-10 USD / mo or on your Raspberry Pi

|

||||

* When you just need a few functions or microservices, without the cost of a cluster

|

||||

|

||||

faasd does not create the same maintenance burden you'll find with maintaining, upgrading, and securing a Kubernetes cluster. You can deploy it and walk away, in the worst case, just deploy a new VM and deploy your functions again.

|

||||

|

||||

## Learning faasd

|

||||

|

||||

The faasd project is MIT licensed and open source, and you will find some documentation, blog posts and videos for free.

|

||||

|

||||

However, "Serverless For Everyone Else" is the official handbook and was written to contribute funds towards the upkeep and maintenance of the project.

|

||||

|

||||

### The official handbook and docs for faasd

|

||||

|

||||

<a href="https://gumroad.com/l/serverless-for-everyone-else">

|

||||

<img src="https://static-2.gumroad.com/res/gumroad/2028406193591/asset_previews/741f2ad46ff0a08e16aaf48d21810ba7/retina/social4.png" width="40%"></a>

|

||||

<a href="https://openfaas.gumroad.com/l/serverless-for-everyone-else">

|

||||

<img src="https://www.alexellis.io/serverless.png" width="40%"></a>

|

||||

|

||||

You'll learn how to deploy code in any language, lift and shift Dockerfiles, run requests in queues, write background jobs and to integrate with databases. faasd packages the same code as OpenFaaS, so you get built-in metrics for your HTTP endpoints, a user-friendly CLI, pre-packaged functions and templates from the store and a UI.

|

||||

|

||||

@ -113,58 +102,4 @@ Topics include:

|

||||

|

||||

View sample pages, reviews and testimonials on Gumroad:

|

||||

|

||||

["Serverless For Everyone Else"](https://gumroad.com/l/serverless-for-everyone-else)

|

||||

|

||||

### Deploy faasd

|

||||

|

||||

The easiest way to deploy faasd is with cloud-init, we give several examples below, and post IaaS platforms will accept "user-data" pasted into their UI, or via their API.

|

||||

|

||||

For trying it out on MacOS or Windows, we recommend using [multipass](https://multipass.run) to run faasd in a VM.

|

||||

|

||||

If you don't use cloud-init, or have already created your Linux server you can use the installation script as per below:

|

||||

|

||||

```bash

|

||||

git clone https://github.com/openfaas/faasd --depth=1

|

||||

cd faasd

|

||||

|

||||

./hack/install.sh

|

||||

```

|

||||

|

||||

> This approach also works for Raspberry Pi

|

||||

|

||||

It's recommended that you do not install Docker on the same host as faasd, since 1) they may both use different versions of containerd and 2) docker's networking rules can disrupt faasd's networking. When using faasd - make your faasd server a faasd server, and build container image on your laptop or in a CI pipeline.

|

||||

|

||||

#### Deployment tutorials

|

||||

|

||||

* [Use multipass on Windows, MacOS or Linux](/docs/MULTIPASS.md)

|

||||

* [Deploy to DigitalOcean with Terraform and TLS](https://www.openfaas.com/blog/faasd-tls-terraform/)

|

||||

* [Deploy to any IaaS with cloud-init](https://blog.alexellis.io/deploy-serverless-faasd-with-cloud-init/)

|

||||

* [Deploy faasd to your Raspberry Pi](https://blog.alexellis.io/faasd-for-lightweight-serverless/)

|

||||

|

||||

Terraform scripts:

|

||||

|

||||

* [Provision faasd on DigitalOcean with Terraform](docs/bootstrap/README.md)

|

||||

* [Provision faasd with TLS on DigitalOcean with Terraform](docs/bootstrap/digitalocean-terraform/README.md)

|

||||

|

||||

### Function and template store

|

||||

|

||||

For community functions see `faas-cli store --help`

|

||||

|

||||

For templates built by the community see: `faas-cli template store list`, you can also use the `dockerfile` template if you just want to migrate an existing service without the benefits of using a template.

|

||||

|

||||

### Community support

|

||||

|

||||

Commercial users and solo business owners should become OpenFaaS GitHub Sponsors to receive regular email updates on changes, tutorials and new features.

|

||||

|

||||

If you are learning faasd, or want to share your use-case, you can join the OpenFaaS Slack community.

|

||||

|

||||

* [Become an OpenFaaS GitHub Sponsor](https://github.com/sponsors/openfaas/)

|

||||

* [Join Slack](https://slack.openfaas.io/)

|

||||

|

||||

### Backlog, features and known issues

|

||||

|

||||

For completed features, WIP and upcoming roadmap see:

|

||||

|

||||

See [ROADMAP.md](docs/ROADMAP.md)

|

||||

|

||||

Are you looking to hack on faasd? Follow the [developer instructions](docs/DEV.md) for a manual installation, or use the `hack/install.sh` script and pick up from there.

|

||||

["Serverless For Everyone Else"](https://openfaas.gumroad.com/l/serverless-for-everyone-else)

|

||||

@ -10,17 +10,7 @@ packages:

|

||||

- git

|

||||

|

||||

runcmd:

|

||||

- curl -sLSf https://github.com/containerd/containerd/releases/download/v1.3.5/containerd-1.3.5-linux-amd64.tar.gz > /tmp/containerd.tar.gz && tar -xvf /tmp/containerd.tar.gz -C /usr/local/bin/ --strip-components=1

|

||||

- curl -SLfs https://raw.githubusercontent.com/containerd/containerd/v1.3.5/containerd.service | tee /etc/systemd/system/containerd.service

|

||||

- systemctl daemon-reload && systemctl start containerd

|

||||

- systemctl enable containerd

|

||||

- /sbin/sysctl -w net.ipv4.conf.all.forwarding=1

|

||||

- mkdir -p /opt/cni/bin

|

||||

- curl -sSL https://github.com/containernetworking/plugins/releases/download/v0.8.5/cni-plugins-linux-amd64-v0.8.5.tgz | tar -xz -C /opt/cni/bin

|

||||

- mkdir -p /go/src/github.com/openfaas/

|

||||

- cd /go/src/github.com/openfaas/ && git clone --depth 1 --branch 0.10.2 https://github.com/openfaas/faasd

|

||||

- curl -fSLs "https://github.com/openfaas/faasd/releases/download/0.10.2/faasd" --output "/usr/local/bin/faasd" && chmod a+x "/usr/local/bin/faasd"

|

||||

- cd /go/src/github.com/openfaas/faasd/ && /usr/local/bin/faasd install

|

||||

- curl -sfL https://raw.githubusercontent.com/openfaas/faasd/master/hack/install.sh | bash -s -

|

||||

- systemctl status -l containerd --no-pager

|

||||

- journalctl -u faasd-provider --no-pager

|

||||

- systemctl status -l faasd-provider --no-pager

|

||||

|

||||

@ -50,54 +50,52 @@ func runInstall(_ *cobra.Command, _ []string) error {

|

||||

return err

|

||||

}

|

||||

|

||||

err := binExists("/usr/local/bin/", "faasd")

|

||||

if err != nil {

|

||||

if err := binExists("/usr/local/bin/", "faasd"); err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

err = systemd.InstallUnit("faasd-provider", map[string]string{

|

||||

if err := systemd.InstallUnit("faasd-provider", map[string]string{

|

||||

"Cwd": faasdProviderWd,

|

||||

"SecretMountPath": path.Join(faasdwd, "secrets")})

|

||||

|

||||

if err != nil {

|

||||

"SecretMountPath": path.Join(faasdwd, "secrets")}); err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

err = systemd.InstallUnit("faasd", map[string]string{"Cwd": faasdwd})

|

||||

if err != nil {

|

||||

if err := systemd.InstallUnit("faasd", map[string]string{"Cwd": faasdwd}); err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

err = systemd.DaemonReload()

|

||||

if err != nil {

|

||||

if err := systemd.DaemonReload(); err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

err = systemd.Enable("faasd-provider")

|

||||

if err != nil {

|

||||

if err := systemd.Enable("faasd-provider"); err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

err = systemd.Enable("faasd")

|

||||

if err != nil {

|

||||

if err := systemd.Enable("faasd"); err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

err = systemd.Start("faasd-provider")

|

||||

if err != nil {

|

||||

if err := systemd.Start("faasd-provider"); err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

err = systemd.Start("faasd")

|

||||

if err != nil {

|

||||

if err := systemd.Start("faasd"); err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

fmt.Println(`Check status with:

|

||||

fmt.Println(`

|

||||

The initial setup downloads various container images, which may take a

|

||||

minute or two depending on your connection.

|

||||

|

||||

Check the status of the faasd service with:

|

||||

|

||||

sudo journalctl -u faasd --lines 100 -f

|

||||

|

||||

Login with:

|

||||

sudo cat /var/lib/faasd/secrets/basic-auth-password | faas-cli login -s`)

|

||||

sudo -E cat /var/lib/faasd/secrets/basic-auth-password | faas-cli login -s`)

|

||||

|

||||

fmt.Println("")

|

||||

|

||||

return nil

|

||||

}

|

||||

@ -109,7 +107,16 @@ func binExists(folder, name string) error {

|

||||

}

|

||||

return nil

|

||||

}

|

||||

func ensureSecretsDir(folder string) error {

|

||||

if _, err := os.Stat(folder); err != nil {

|

||||

err = os.MkdirAll(folder, secretDirPermission)

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

}

|

||||

|

||||

return nil

|

||||

}

|

||||

func ensureWorkingDir(folder string) error {

|

||||

if _, err := os.Stat(folder); err != nil {

|

||||

err = os.MkdirAll(folder, workingDirectoryPermission)

|

||||

|

||||

220

cmd/provider.go

220

cmd/provider.go

@ -1,9 +1,8 @@

|

||||

package cmd

|

||||

|

||||

import (

|

||||

"encoding/json"

|

||||

"fmt"

|

||||

"io/ioutil"

|

||||

"io"

|

||||

"log"

|

||||

"net/http"

|

||||

"os"

|

||||

@ -14,6 +13,7 @@ import (

|

||||

"github.com/openfaas/faas-provider/logs"

|

||||

"github.com/openfaas/faas-provider/proxy"

|

||||

"github.com/openfaas/faas-provider/types"

|

||||

faasd "github.com/openfaas/faasd/pkg"

|

||||

"github.com/openfaas/faasd/pkg/cninetwork"

|

||||

faasdlogs "github.com/openfaas/faasd/pkg/logs"

|

||||

"github.com/openfaas/faasd/pkg/provider/config"

|

||||

@ -21,96 +21,152 @@ import (

|

||||

"github.com/spf13/cobra"

|

||||

)

|

||||

|

||||

const secretDirPermission = 0755

|

||||

|

||||

func makeProviderCmd() *cobra.Command {

|

||||

var command = &cobra.Command{

|

||||

Use: "provider",

|

||||

Short: "Run the faasd-provider",

|

||||

}

|

||||

|

||||

command.Flags().String("pull-policy", "Always", `Set to "Always" to force a pull of images upon deployment, or "IfNotPresent" to try to use a cached image.`)

|

||||

|

||||

command.RunE = func(_ *cobra.Command, _ []string) error {

|

||||

|

||||

pullPolicy, flagErr := command.Flags().GetString("pull-policy")

|

||||

if flagErr != nil {

|

||||

return flagErr

|

||||

}

|

||||

|

||||

alwaysPull := false

|

||||

if pullPolicy == "Always" {

|

||||

alwaysPull = true

|

||||

}

|

||||

|

||||

config, providerConfig, err := config.ReadFromEnv(types.OsEnv{})

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

log.Printf("faasd-provider starting..\tService Timeout: %s\n", config.WriteTimeout.String())

|

||||

printVersion()

|

||||

|

||||

wd, err := os.Getwd()

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

writeHostsErr := ioutil.WriteFile(path.Join(wd, "hosts"),

|

||||

[]byte(`127.0.0.1 localhost`), workingDirectoryPermission)

|

||||

|

||||

if writeHostsErr != nil {

|

||||

return fmt.Errorf("cannot write hosts file: %s", writeHostsErr)

|

||||

}

|

||||

|

||||

writeResolvErr := ioutil.WriteFile(path.Join(wd, "resolv.conf"),

|

||||

[]byte(`nameserver 8.8.8.8`), workingDirectoryPermission)

|

||||

|

||||

if writeResolvErr != nil {

|

||||

return fmt.Errorf("cannot write resolv.conf file: %s", writeResolvErr)

|

||||

}

|

||||

|

||||

cni, err := cninetwork.InitNetwork()

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

client, err := containerd.New(providerConfig.Sock)

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

defer client.Close()

|

||||

|

||||

invokeResolver := handlers.NewInvokeResolver(client)

|

||||

|

||||

userSecretPath := path.Join(wd, "secrets")

|

||||

|

||||

bootstrapHandlers := types.FaaSHandlers{

|

||||

FunctionProxy: proxy.NewHandlerFunc(*config, invokeResolver),

|

||||

DeleteHandler: handlers.MakeDeleteHandler(client, cni),

|

||||

DeployHandler: handlers.MakeDeployHandler(client, cni, userSecretPath, alwaysPull),

|

||||

FunctionReader: handlers.MakeReadHandler(client),

|

||||

ReplicaReader: handlers.MakeReplicaReaderHandler(client),

|

||||

ReplicaUpdater: handlers.MakeReplicaUpdateHandler(client, cni),

|

||||

UpdateHandler: handlers.MakeUpdateHandler(client, cni, userSecretPath, alwaysPull),

|

||||

HealthHandler: func(w http.ResponseWriter, r *http.Request) {},

|

||||

InfoHandler: handlers.MakeInfoHandler(Version, GitCommit),

|

||||

ListNamespaceHandler: listNamespaces(),

|

||||

SecretHandler: handlers.MakeSecretHandler(client, userSecretPath),

|

||||

LogHandler: logs.NewLogHandlerFunc(faasdlogs.New(), config.ReadTimeout),

|

||||

}

|

||||

|

||||

log.Printf("Listening on TCP port: %d\n", *config.TCPPort)

|

||||

bootstrap.Serve(&bootstrapHandlers, config)

|

||||

return nil

|

||||

}

|

||||

command.RunE = runProviderE

|

||||

command.PreRunE = preRunE

|

||||

|

||||

return command

|

||||

}

|

||||

|

||||

func listNamespaces() func(w http.ResponseWriter, r *http.Request) {

|

||||

func runProviderE(cmd *cobra.Command, _ []string) error {

|

||||

|

||||

config, providerConfig, err := config.ReadFromEnv(types.OsEnv{})

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

log.Printf("faasd-provider starting..\tService Timeout: %s\n", config.WriteTimeout.String())

|

||||

printVersion()

|

||||

|

||||

wd, err := os.Getwd()

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

if err := os.WriteFile(path.Join(wd, "hosts"),

|

||||

[]byte(`127.0.0.1 localhost`), workingDirectoryPermission); err != nil {

|

||||

return fmt.Errorf("cannot write hosts file: %s", err)

|

||||

}

|

||||

|

||||

if err := os.WriteFile(path.Join(wd, "resolv.conf"),

|

||||

[]byte(`nameserver 8.8.8.8

|

||||

nameserver 8.8.4.4`), workingDirectoryPermission); err != nil {

|

||||

return fmt.Errorf("cannot write resolv.conf file: %s", err)

|

||||

}

|

||||

|

||||

cni, err := cninetwork.InitNetwork()

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

client, err := containerd.New(providerConfig.Sock)

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

defer client.Close()

|

||||

|

||||

invokeResolver := handlers.NewInvokeResolver(client)

|

||||

|

||||

baseUserSecretsPath := path.Join(wd, "secrets")

|

||||

if err := moveSecretsToDefaultNamespaceSecrets(

|

||||

baseUserSecretsPath,

|

||||

faasd.DefaultFunctionNamespace); err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

alwaysPull := true

|

||||

bootstrapHandlers := types.FaaSHandlers{

|

||||

FunctionProxy: httpHeaderMiddleware(proxy.NewHandlerFunc(*config, invokeResolver, false)),

|

||||

DeleteFunction: httpHeaderMiddleware(handlers.MakeDeleteHandler(client, cni)),

|

||||

DeployFunction: httpHeaderMiddleware(handlers.MakeDeployHandler(client, cni, baseUserSecretsPath, alwaysPull)),

|

||||

FunctionLister: httpHeaderMiddleware(handlers.MakeReadHandler(client)),

|

||||

FunctionStatus: httpHeaderMiddleware(handlers.MakeReplicaReaderHandler(client)),

|

||||

ScaleFunction: httpHeaderMiddleware(handlers.MakeReplicaUpdateHandler(client, cni)),

|

||||

UpdateFunction: httpHeaderMiddleware(handlers.MakeUpdateHandler(client, cni, baseUserSecretsPath, alwaysPull)),

|

||||

Health: httpHeaderMiddleware(func(w http.ResponseWriter, r *http.Request) {}),

|

||||

Info: httpHeaderMiddleware(handlers.MakeInfoHandler(faasd.Version, faasd.GitCommit)),

|

||||

ListNamespaces: httpHeaderMiddleware(handlers.MakeNamespacesLister(client)),

|

||||

Secrets: httpHeaderMiddleware(handlers.MakeSecretHandler(client.NamespaceService(), baseUserSecretsPath)),

|

||||

Logs: httpHeaderMiddleware(logs.NewLogHandlerFunc(faasdlogs.New(), config.ReadTimeout)),

|

||||

MutateNamespace: httpHeaderMiddleware(handlers.MakeMutateNamespace(client)),

|

||||

}

|

||||

|

||||

log.Printf("Listening on: 0.0.0.0:%d", *config.TCPPort)

|

||||

bootstrap.Serve(cmd.Context(), &bootstrapHandlers, config)

|

||||

return nil

|

||||

}

|

||||

|

||||

/*

|

||||

* Mutiple namespace support was added after release 0.13.0

|

||||

* Function will help users to migrate on multiple namespace support of faasd

|

||||

*/

|

||||

func moveSecretsToDefaultNamespaceSecrets(baseSecretPath string, defaultNamespace string) error {

|

||||

newSecretPath := path.Join(baseSecretPath, defaultNamespace)

|

||||

|

||||

err := ensureSecretsDir(newSecretPath)

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

files, err := os.ReadDir(baseSecretPath)

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

for _, f := range files {

|

||||

if !f.IsDir() {

|

||||

|

||||

newPath := path.Join(newSecretPath, f.Name())

|

||||

|

||||

// A non-nil error means the file wasn't found in the

|

||||

// destination path

|

||||

if _, err := os.Stat(newPath); err != nil {

|

||||

oldPath := path.Join(baseSecretPath, f.Name())

|

||||

|

||||

if err := copyFile(oldPath, newPath); err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

log.Printf("[Migration] Copied %s to %s", oldPath, newPath)

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

return nil

|

||||

}

|

||||

|

||||

func copyFile(src, dst string) error {

|

||||

inputFile, err := os.Open(src)

|

||||

if err != nil {

|

||||

return fmt.Errorf("opening %s failed %w", src, err)

|

||||

}

|

||||

defer inputFile.Close()

|

||||

|

||||

outputFile, err := os.OpenFile(dst, os.O_CREATE|os.O_WRONLY|os.O_APPEND, secretDirPermission)

|

||||

if err != nil {

|

||||

return fmt.Errorf("opening %s failed %w", dst, err)

|

||||

}

|

||||

defer outputFile.Close()

|

||||

|

||||

// Changed from os.Rename due to issue in #201

|

||||

if _, err := io.Copy(outputFile, inputFile); err != nil {

|

||||

return fmt.Errorf("writing into %s failed %w", outputFile.Name(), err)

|

||||

}

|

||||

|

||||

return nil

|

||||

}

|

||||

|

||||

func httpHeaderMiddleware(next http.HandlerFunc) http.HandlerFunc {

|

||||

return func(w http.ResponseWriter, r *http.Request) {

|

||||

list := []string{""}

|

||||

out, _ := json.Marshal(list)

|

||||

w.Write(out)

|

||||

w.Header().Set("X-OpenFaaS-EULA", "openfaas-ce")

|

||||

next.ServeHTTP(w, r)

|

||||

}

|

||||

}

|

||||

|

||||

36

cmd/root.go

36

cmd/root.go

@ -4,6 +4,7 @@ import (

|

||||

"fmt"

|

||||

|

||||

"github.com/morikuni/aec"

|

||||

"github.com/openfaas/faasd/pkg"

|

||||

"github.com/spf13/cobra"

|

||||

)

|

||||

|

||||

@ -16,25 +17,15 @@ func init() {

|

||||

rootCommand.AddCommand(installCmd)

|

||||

rootCommand.AddCommand(makeProviderCmd())

|

||||

rootCommand.AddCommand(collectCmd)

|

||||

rootCommand.AddCommand(makeServiceCmd())

|

||||

}

|

||||

|

||||

func RootCommand() *cobra.Command {

|

||||

return rootCommand

|

||||

}

|

||||

|

||||

var (

|

||||

// GitCommit Git Commit SHA

|

||||

GitCommit string

|

||||

// Version version of the CLI

|

||||

Version string

|

||||

)

|

||||

|

||||

// Execute faasd

|

||||

func Execute(version, gitCommit string) error {

|

||||

|

||||

// Get Version and GitCommit values from main.go.

|

||||

Version = version

|

||||

GitCommit = gitCommit

|

||||

func Execute() error {

|

||||

|

||||

if err := rootCommand.Execute(); err != nil {

|

||||

return err

|

||||

@ -46,7 +37,16 @@ var rootCommand = &cobra.Command{

|

||||

Use: "faasd",

|

||||

Short: "Start faasd",

|

||||

Long: `

|

||||

faasd - serverless without Kubernetes

|

||||

faasd Community Edition (CE):

|

||||

|

||||

Learn how to build, secure, and monitor functions with faasd with

|

||||

the eBook:

|

||||

|

||||

https://openfaas.gumroad.com/l/serverless-for-everyone-else

|

||||

|

||||

License: OpenFaaS CE EULA with faasd addendum:

|

||||

|

||||

https://github.com/openfaas/faasd/blob/master/EULA.md

|

||||

`,

|

||||

RunE: runRootCommand,

|

||||

SilenceUsage: true,

|

||||

@ -73,7 +73,7 @@ func parseBaseCommand(_ *cobra.Command, _ []string) {

|

||||

}

|

||||

|

||||

func printVersion() {

|

||||

fmt.Printf("faasd version: %s\tcommit: %s\n", GetVersion(), GitCommit)

|

||||

fmt.Printf("faasd Community Edition (CE) version: %s\tcommit: %s\n", pkg.GetVersion(), pkg.GitCommit)

|

||||

}

|

||||

|

||||

func printLogo() {

|

||||

@ -81,14 +81,6 @@ func printLogo() {

|

||||

fmt.Println(logoText)

|

||||

}

|

||||

|

||||

// GetVersion get latest version

|

||||

func GetVersion() string {

|

||||

if len(Version) == 0 {

|

||||

return "dev"

|

||||

}

|

||||

return Version

|

||||

}

|

||||

|

||||

// Logo for version and root command

|

||||

const Logo = ` __ _

|

||||

/ _| __ _ __ _ ___ __| |

|

||||

|

||||

22

cmd/service.go

Normal file

22

cmd/service.go

Normal file

@ -0,0 +1,22 @@

|

||||

package cmd

|

||||

|

||||

import "github.com/spf13/cobra"

|

||||

|

||||

func makeServiceCmd() *cobra.Command {

|

||||

var command = &cobra.Command{

|

||||

Use: "service",

|

||||

Short: "Manage services",

|

||||

Long: `Manage services created by faasd from the docker-compose.yml file`,

|

||||

}

|

||||

|

||||

command.RunE = runServiceE

|

||||

|

||||

command.AddCommand(makeServiceLogsCmd())

|

||||

return command

|

||||

}

|

||||

|

||||

func runServiceE(cmd *cobra.Command, args []string) error {

|

||||

|

||||

return cmd.Help()

|

||||

|

||||

}

|

||||

89

cmd/service_logs.go

Normal file

89

cmd/service_logs.go

Normal file

@ -0,0 +1,89 @@

|

||||

package cmd

|

||||

|

||||

import (

|

||||

"context"

|

||||

"errors"

|

||||

"fmt"

|

||||

"os"

|

||||

"time"

|

||||

|

||||

goexecute "github.com/alexellis/go-execute/v2"

|

||||

"github.com/spf13/cobra"

|

||||

)

|

||||

|

||||

func makeServiceLogsCmd() *cobra.Command {

|

||||

var command = &cobra.Command{

|

||||

Use: "logs",

|

||||

Short: "View logs for a service",

|

||||

Long: `View logs for a service created by faasd from the docker-compose.yml file.`,

|

||||

Example: ` ## View logs for the gateway for the last hour

|

||||

faasd service logs gateway --since 1h

|

||||

|

||||

## View logs for the cron-connector, and tail them

|

||||

faasd service logs cron-connector -f

|

||||

`,

|

||||

}

|

||||

|

||||

command.Flags().Duration("since", 10*time.Minute, "How far back in time to include logs")

|

||||

command.Flags().BoolP("follow", "f", false, "Follow the logs")

|

||||

|

||||

command.RunE = runServiceLogsE

|

||||

command.PreRunE = preRunServiceLogsE

|

||||

|

||||

return command

|

||||

}

|

||||

|

||||

func runServiceLogsE(cmd *cobra.Command, args []string) error {

|

||||

name := args[0]

|

||||

|

||||

namespace, _ := cmd.Flags().GetString("namespace")

|

||||

follow, _ := cmd.Flags().GetBool("follow")

|

||||

since, _ := cmd.Flags().GetDuration("since")

|

||||

|

||||

journalTask := goexecute.ExecTask{

|

||||

Command: "journalctl",

|

||||

Args: []string{"-o", "cat", "-t", fmt.Sprintf("%s:%s", namespace, name)},

|

||||

StreamStdio: true,

|

||||

}

|

||||

|

||||

if follow {

|

||||

journalTask.Args = append(journalTask.Args, "-f")

|

||||

}

|

||||

|

||||

if since != 0 {

|

||||

// Calculate the timestamp that is 'age' duration ago

|

||||

sinceTime := time.Now().Add(-since)

|

||||

// Format according to journalctl's expected format: "2012-10-30 18:17:16"

|

||||

formattedTime := sinceTime.Format("2006-01-02 15:04:05")

|

||||

journalTask.Args = append(journalTask.Args, fmt.Sprintf("--since=%s", formattedTime))

|

||||

}

|

||||

|

||||

res, err := journalTask.Execute(context.Background())

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

if res.ExitCode != 0 {

|

||||

return fmt.Errorf("failed to get logs for service %s: %s", name, res.Stderr)

|

||||

}

|

||||

|

||||

return nil

|

||||

}

|

||||

|

||||

func preRunServiceLogsE(cmd *cobra.Command, args []string) error {

|

||||

|

||||

if os.Geteuid() != 0 {

|

||||

return errors.New("this command must be run as root")

|

||||

}

|

||||

|

||||

if len(args) == 0 {

|

||||

return errors.New("service name is required as an argument")

|

||||

}

|

||||

|

||||

namespace, _ := cmd.Flags().GetString("namespace")

|

||||

if namespace == "" {

|

||||

return errors.New("namespace is required")

|

||||

}

|

||||

|

||||

return nil

|

||||

}

|

||||

23

cmd/up.go

23

cmd/up.go

@ -2,7 +2,6 @@ package cmd

|

||||

|

||||

import (

|

||||

"fmt"

|

||||

"io/ioutil"

|

||||

"log"

|

||||

"os"

|

||||

"os/signal"

|

||||

@ -16,6 +15,7 @@ import (

|

||||

"github.com/spf13/cobra"

|

||||

flag "github.com/spf13/pflag"

|

||||

|

||||

units "github.com/docker/go-units"

|

||||

"github.com/openfaas/faasd/pkg"

|

||||

)

|

||||

|

||||

@ -38,9 +38,10 @@ func init() {

|

||||

}

|

||||

|

||||

var upCmd = &cobra.Command{

|

||||

Use: "up",

|

||||

Short: "Start faasd",

|

||||

RunE: runUp,

|

||||

Use: "up",

|

||||

Short: "Start faasd",

|

||||

RunE: runUp,

|

||||

PreRunE: preRunE,

|

||||

}

|

||||

|

||||

func runUp(cmd *cobra.Command, _ []string) error {

|

||||

@ -68,7 +69,7 @@ func runUp(cmd *cobra.Command, _ []string) error {

|

||||

return err

|

||||

}

|

||||

|

||||

log.Printf("Supervisor created in: %s\n", time.Since(start).String())

|

||||

log.Printf("Supervisor created in: %s\n", units.HumanDuration(time.Since(start)))

|

||||

|

||||

start = time.Now()

|

||||

if err := supervisor.Start(services); err != nil {

|

||||

@ -76,7 +77,7 @@ func runUp(cmd *cobra.Command, _ []string) error {

|

||||

}

|

||||

defer supervisor.Close()

|

||||

|

||||

log.Printf("Supervisor init done in: %s\n", time.Since(start).String())

|

||||

log.Printf("Supervisor init done in: %s\n", units.HumanDuration(time.Since(start)))

|

||||

|

||||

shutdownTimeout := time.Second * 1

|

||||

timeout := time.Second * 60

|

||||

@ -165,7 +166,7 @@ func makeFile(filePath, fileContents string) error {

|

||||

return nil

|

||||

} else if os.IsNotExist(err) {

|

||||

log.Printf("Writing to: %q\n", filePath)

|

||||

return ioutil.WriteFile(filePath, []byte(fileContents), workingDirectoryPermission)

|

||||

return os.WriteFile(filePath, []byte(fileContents), workingDirectoryPermission)

|

||||

} else {

|

||||

return err

|

||||

}

|

||||

@ -203,3 +204,11 @@ func parseUpFlags(cmd *cobra.Command) (upConfig, error) {

|

||||

parsed.workingDir = faasdwd

|

||||

return parsed, err

|

||||

}

|

||||

|

||||

func preRunE(cmd *cobra.Command, _ []string) error {

|

||||

if err := pkg.ConnectivityCheck(); err != nil {

|

||||

return fmt.Errorf("the OpenFaaS CE EULA requires Internet access, upgrade to faasd Pro to continue")

|

||||

}

|

||||

|

||||

return nil

|

||||

}

|

||||

|

||||

@ -1,47 +1,45 @@

|

||||

version: "3.7"

|

||||

services:

|

||||

basic-auth-plugin:

|

||||

image: ghcr.io/openfaas/basic-auth:0.20.5

|

||||

environment:

|

||||

- port=8080

|

||||

- secret_mount_path=/run/secrets

|

||||

- user_filename=basic-auth-user

|

||||

- pass_filename=basic-auth-password

|

||||

volumes:

|

||||

# we assume cwd == /var/lib/faasd

|

||||

- type: bind

|

||||

source: ./secrets/basic-auth-password

|

||||

target: /run/secrets/basic-auth-password

|

||||

- type: bind

|

||||

source: ./secrets/basic-auth-user

|

||||

target: /run/secrets/basic-auth-user

|

||||

cap_add:

|

||||

- CAP_NET_RAW

|

||||

|

||||

nats:

|

||||

image: docker.io/library/nats-streaming:0.11.2

|

||||

image: docker.io/library/nats-streaming:0.25.6

|

||||

# nobody

|

||||

user: "65534"

|

||||

command:

|

||||

- "/nats-streaming-server"

|

||||

- "-m"

|

||||

- "8222"

|

||||

- "--store=memory"

|

||||

- "--store=file"

|

||||

- "--dir=/nats"

|

||||

- "--cluster_id=faas-cluster"

|

||||

volumes:

|

||||

# Data directory

|

||||

- type: bind

|

||||

source: ./nats

|

||||

target: /nats

|

||||

# ports:

|

||||

# - "127.0.0.1:8222:8222"

|

||||

|

||||

prometheus:

|

||||

image: docker.io/prom/prometheus:v2.14.0

|

||||

image: docker.io/prom/prometheus:v3.1.0

|

||||

# nobody

|

||||

user: "65534"

|

||||

volumes:

|

||||

# Config directory

|

||||

- type: bind

|

||||

source: ./prometheus.yml

|

||||

target: /etc/prometheus/prometheus.yml

|

||||

# Data directory

|

||||

- type: bind

|

||||

source: ./prometheus

|

||||

target: /prometheus

|

||||

cap_add:

|

||||

- CAP_NET_RAW

|

||||

ports:

|

||||

- "127.0.0.1:9090:9090"

|

||||

|

||||

gateway:

|

||||

image: ghcr.io/openfaas/gateway:0.20.8

|

||||

image: ghcr.io/openfaas/gateway:0.27.12

|

||||

environment:

|

||||

- basic_auth=true

|

||||

- functions_provider_url=http://faasd-provider:8081/

|

||||

@ -51,8 +49,6 @@ services:

|

||||

- upstream_timeout=65s

|

||||

- faas_nats_address=nats

|

||||

- faas_nats_port=4222

|

||||

- auth_proxy_url=http://basic-auth-plugin:8080/validate

|

||||

- auth_proxy_pass_body=false

|

||||

- secret_mount_path=/run/secrets

|

||||

- scale_from_zero=true

|

||||

- function_namespace=openfaas-fn

|

||||

@ -67,14 +63,13 @@ services:

|

||||

cap_add:

|

||||

- CAP_NET_RAW

|

||||

depends_on:

|

||||

- basic-auth-plugin

|

||||

- nats

|

||||

- prometheus

|

||||

ports:

|

||||

- "8080:8080"

|

||||

|

||||

queue-worker:

|

||||

image: docker.io/openfaas/queue-worker:0.11.2

|

||||

image: ghcr.io/openfaas/queue-worker:0.14.2

|

||||

environment:

|

||||

- faas_nats_address=nats

|

||||

- faas_nats_port=4222

|

||||

|

||||

65

docs/DEV.md

65

docs/DEV.md

@ -1,7 +1,11 @@

|

||||

## Instructions for hacking on faasd itself

|

||||

## Instructions for building and testing faasd locally

|

||||

|

||||