mirror of

https://github.com/openfaas/faasd.git

synced 2025-06-18 12:06:36 +00:00

Compare commits

187 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

| 0bf221b286 | |||

| e8c2eeb052 | |||

| 6c0f91e810 | |||

| 27ba86fb52 | |||

| e99c49d4e5 | |||

| 7f39890963 | |||

| bc2fe46023 | |||

| 6a865769ec | |||

| 42b831cc57 | |||

| 13b71cd478 | |||

| afaacd88a2 | |||

| abb62aedc2 | |||

| 8444f8ac38 | |||

| 795ea368ff | |||

| 621fe6b01a | |||

| 507ee0a7f7 | |||

| 8f6d2fa6ec | |||

| 0e6983b351 | |||

| 31fc597205 | |||

| d7fea9173e | |||

| 3d0adec851 | |||

| b475aa8884 | |||

| 123ce3b849 | |||

| 17d09bb185 | |||

| 789e9a29fe | |||

| b575c02338 | |||

| cd4add32e1 | |||

| e199827883 | |||

| 87f105d581 | |||

| c6b2418461 | |||

| 237a026b79 | |||

| 4e8a1d810a | |||

| d4454758d5 | |||

| 7afaa4a30b | |||

| 1aa7a2a320 | |||

| a4a33b8596 | |||

| 954a61cee1 | |||

| 294ef0f17f | |||

| 32c00f0e9e | |||

| 2533c065bf | |||

| 9c04b8dfd7 | |||

| c4936133f6 | |||

| 87f993847c | |||

| 9cdcac2c5c | |||

| a8a3d73bc0 | |||

| f33964310a | |||

| 03ad56e573 | |||

| baea3006cb | |||

| cb786d7c84 | |||

| fc02b4c6fa | |||

| ecee5d6eed | |||

| 8159fb88b7 | |||

| a7f74f5163 | |||

| baa9a1821c | |||

| 1a8e879f42 | |||

| 0d9c846117 | |||

| 8db2e2a54f | |||

| 0c790bbdae | |||

| 797ff0875c | |||

| bc859e595f | |||

| 4e9b6070c1 | |||

| 1862c0e1f5 | |||

| ae909c8df4 | |||

| 6f76a05bdf | |||

| 8f022cfb21 | |||

| ff9225d45e | |||

| 1da2763a96 | |||

| 666d6c4871 | |||

| 2248a8a071 | |||

| 908bbfda9f | |||

| b40a7cbe58 | |||

| a66f65c2b9 | |||

| ac1cc16f0c | |||

| 716ef6f51c | |||

| 92523c496b | |||

| 5561c5cc67 | |||

| 6c48911412 | |||

| 3ce724512b | |||

| 8d91895c79 | |||

| 7ca531a8b5 | |||

| 94210cc7f1 | |||

| 9e5eb84236 | |||

| b20e5614c7 | |||

| 40829bbf88 | |||

| 87f49b0289 | |||

| b817479828 | |||

| faae82aa1c | |||

| cddc10acbe | |||

| 1c8e8bb615 | |||

| 6e537d1fde | |||

| c314af4f98 | |||

| 4189cfe52c | |||

| 9e2f571cf7 | |||

| 93825e8354 | |||

| 6752a61a95 | |||

| 16a8d2ac6c | |||

| 68ac4dfecb | |||

| c2480ab30a | |||

| d978c19e23 | |||

| 038b92c5b4 | |||

| f1a1f374d9 | |||

| 24692466d8 | |||

| bdfff4e8c5 | |||

| e3589a4ed1 | |||

| b865e55c85 | |||

| 89a728db16 | |||

| 2237dfd44d | |||

| 4423a5389a | |||

| a6a4502c89 | |||

| 8b86e00128 | |||

| 3039773fbd | |||

| 5b92e7793d | |||

| 88f1aa0433 | |||

| 2b9efd29a0 | |||

| db5312158c | |||

| 26debca616 | |||

| 50de0f34bb | |||

| d64edeb648 | |||

| 42b9cc6b71 | |||

| 25c553a87c | |||

| 8bc39f752e | |||

| cbff6fa8f6 | |||

| 3e29408518 | |||

| 04f1807d92 | |||

| 35e017b526 | |||

| e54da61283 | |||

| 84353d0cae | |||

| e33a60862d | |||

| 7b67ff22e6 | |||

| 19abc9f7b9 | |||

| 480f566819 | |||

| cece6cf1ef | |||

| 22882e2643 | |||

| 667d74aaf7 | |||

| 9dcdbfb7e3 | |||

| 3a9b81200e | |||

| 734425de25 | |||

| 70e7e0d25a | |||

| be8574ecd0 | |||

| a0110b3019 | |||

| 87c71b090f | |||

| dc8667d36a | |||

| 137d199cb5 | |||

| 560c295eb0 | |||

| 93325b713e | |||

| 2307fc71c5 | |||

| 853830c018 | |||

| 262770a0b7 | |||

| 0efb6d492f | |||

| 27cfe465ca | |||

| d6c4ebaf96 | |||

| e9d1423315 | |||

| 4bca5c36a5 | |||

| 10e7a2f07c | |||

| 4775a9a77c | |||

| e07186ed5b | |||

| 2454c2a807 | |||

| 8bd2ba5334 | |||

| c379b0ebcc | |||

| 226a20c362 | |||

| 02c9dcf74d | |||

| 0b88fc232d | |||

| fcd1c9ab54 | |||

| 592f3d3cc0 | |||

| b06364c3f4 | |||

| 75fd07797c | |||

| 65c2cb0732 | |||

| 44df1cef98 | |||

| 881f5171ee | |||

| 970015ac85 | |||

| 283e8ed2c1 | |||

| d49011702b | |||

| eb369fbb16 | |||

| 040b426a19 | |||

| 251cb2d08a | |||

| 5c48ac1a70 | |||

| 7c166979c9 | |||

| 36843ad1d4 | |||

| 3bc041ba04 | |||

| dd3f9732b4 | |||

| 6c10d18f59 | |||

| 969fc566e1 | |||

| a4710db664 | |||

| df2de7ee5c | |||

| 2d8b2b1f73 | |||

| 6e5bc27d9a | |||

| 2eb1df9517 |

2

.gitattributes

vendored

Normal file

2

.gitattributes

vendored

Normal file

@ -0,0 +1,2 @@

|

||||

vendor/** linguist-generated=true

|

||||

Gopkg.lock linguist-generated=true

|

||||

7

.github/ISSUE_TEMPLATE.md

vendored

7

.github/ISSUE_TEMPLATE.md

vendored

@ -8,10 +8,13 @@

|

||||

<!--- If describing a bug, tell us what happens instead of the expected behavior -->

|

||||

<!--- If suggesting a change/improvement, explain the difference from current behavior -->

|

||||

|

||||

## Possible Solution

|

||||

## List all Possible Solutions

|

||||

<!--- Not obligatory, but suggest a fix/reason for the bug, -->

|

||||

<!--- or ideas how to implement the addition or change -->

|

||||

|

||||

## List the one solution that you would recommend

|

||||

<!--- If you were to be on the hook for this change. -->

|

||||

|

||||

## Steps to Reproduce (for bugs)

|

||||

<!--- Provide a link to a live example, or an unambiguous set of steps to -->

|

||||

<!--- reproduce this bug. Include code to reproduce, if relevant -->

|

||||

@ -38,4 +41,6 @@ containerd -version

|

||||

uname -a

|

||||

|

||||

cat /etc/os-release

|

||||

|

||||

faasd version

|

||||

```

|

||||

|

||||

36

.github/workflows/build.yaml

vendored

Normal file

36

.github/workflows/build.yaml

vendored

Normal file

@ -0,0 +1,36 @@

|

||||

name: build

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: [ master ]

|

||||

pull_request:

|

||||

branches: [ master ]

|

||||

|

||||

jobs:

|

||||

build:

|

||||

env:

|

||||

GO111MODULE: off

|

||||

strategy:

|

||||

matrix:

|

||||

go-version: [1.15.x]

|

||||

os: [ubuntu-latest]

|

||||

runs-on: ${{ matrix.os }}

|

||||

steps:

|

||||

- uses: actions/checkout@master

|

||||

with:

|

||||

fetch-depth: 1

|

||||

- name: Install Go

|

||||

uses: actions/setup-go@v2

|

||||

with:

|

||||

go-version: ${{ matrix.go-version }}

|

||||

|

||||

- name: test

|

||||

run: make test

|

||||

- name: dist

|

||||

run: make dist

|

||||

- name: prepare-test

|

||||

run: make prepare-test

|

||||

- name: test e2e

|

||||

run: make test-e2e

|

||||

|

||||

|

||||

30

.github/workflows/publish.yaml

vendored

Normal file

30

.github/workflows/publish.yaml

vendored

Normal file

@ -0,0 +1,30 @@

|

||||

name: publish

|

||||

|

||||

on:

|

||||

push:

|

||||

tags:

|

||||

- '*'

|

||||

|

||||

jobs:

|

||||

publish:

|

||||

strategy:

|

||||

matrix:

|

||||

go-version: [ 1.15.x ]

|

||||

os: [ ubuntu-latest ]

|

||||

runs-on: ${{ matrix.os }}

|

||||

steps:

|

||||

- uses: actions/checkout@master

|

||||

with:

|

||||

fetch-depth: 1

|

||||

- name: Install Go

|

||||

uses: actions/setup-go@v2

|

||||

with:

|

||||

go-version: ${{ matrix.go-version }}

|

||||

- name: Make publish

|

||||

run: make publish

|

||||

- name: Upload release binaries

|

||||

uses: alexellis/upload-assets@0.2.2

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ github.token }}

|

||||

with:

|

||||

asset_paths: '["./bin/faasd*"]'

|

||||

2

.gitignore

vendored

2

.gitignore

vendored

@ -6,3 +6,5 @@ hosts

|

||||

basic-auth-user

|

||||

basic-auth-password

|

||||

/bin

|

||||

/secrets

|

||||

.vscode

|

||||

|

||||

30

.travis.yml

30

.travis.yml

@ -1,30 +0,0 @@

|

||||

sudo: required

|

||||

language: go

|

||||

|

||||

go:

|

||||

- '1.12'

|

||||

|

||||

addons:

|

||||

apt:

|

||||

packages:

|

||||

- runc

|

||||

|

||||

script:

|

||||

- make dist

|

||||

- make prepare-test

|

||||

- make test-e2e

|

||||

|

||||

deploy:

|

||||

provider: releases

|

||||

api_key:

|

||||

secure: bccOSB+Mbk5ZJHyJfX82Xg/3/7mxiAYHx7P5m5KS1ncDuRpJBFjDV8Nx2PWYg341b5SMlCwsS3IJ9NkoGvRSKK+3YqeNfTeMabVNdKC2oL1i+4pdxGlbl57QXkzT4smqE8AykZEo4Ujk42rEr3e0gSHT2rXkV+Xt0xnoRVXn2tSRUDwsmwANnaBj6KpH2SjJ/lsfTifxrRB65uwcePaSjkqwR6htFraQtpONC9xYDdek6EoVQmoft/ONZJqi7HR+OcA1yhSt93XU6Vaf3678uLlPX9c/DxgIU9UnXRaOd0UUEiTHaMMWDe/bJSrKmgL7qY05WwbGMsXO/RdswwO1+zwrasrwf86SjdGX/P9AwobTW3eTEiBqw2J77UVbvLzDDoyJ5KrkbHRfPX8aIPO4OG9eHy/e7C3XVx4qv9bJBXQ3qD9YJtei9jmm8F/MCdPWuVYC0hEvHtuhP/xMm4esNUjFM5JUfDucvAuLL34NBYHBDP2XNuV4DkgQQPakfnlvYBd7OqyXCU6pzyWSasXpD1Rz8mD/x8aTUl2Ya4bnXQ8qAa5cnxfPqN2ADRlTw1qS7hl6LsXzNQ6r1mbuh/uFi67ybElIjBTfuMEeJOyYHkkLUHIBpooKrPyr0luAbf0By2D2N/eQQnM/RpixHNfZG/mvXx8ZCrs+wxgvG1Rm7rM=

|

||||

file:

|

||||

- ./bin/faasd

|

||||

- ./bin/faasd-armhf

|

||||

- ./bin/faasd-arm64

|

||||

skip_cleanup: true

|

||||

on:

|

||||

tags: true

|

||||

|

||||

env:

|

||||

- GO111MODULE=off

|

||||

553

Gopkg.lock

generated

553

Gopkg.lock

generated

@ -1,553 +0,0 @@

|

||||

# This file is autogenerated, do not edit; changes may be undone by the next 'dep ensure'.

|

||||

|

||||

|

||||

[[projects]]

|

||||

digest = "1:d5e752c67b445baa5b6cb6f8aa706775c2aa8e41aca95a0c651520ff2c80361a"

|

||||

name = "github.com/Microsoft/go-winio"

|

||||

packages = [

|

||||

".",

|

||||

"pkg/guid",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "6c72808b55902eae4c5943626030429ff20f3b63"

|

||||

version = "v0.4.14"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:b28f788c0be42a6d26f07b282c5ff5f814ab7ad5833810ef0bc5f56fb9bedf11"

|

||||

name = "github.com/Microsoft/hcsshim"

|

||||

packages = [

|

||||

".",

|

||||

"internal/cow",

|

||||

"internal/hcs",

|

||||

"internal/hcserror",

|

||||

"internal/hns",

|

||||

"internal/interop",

|

||||

"internal/log",

|

||||

"internal/logfields",

|

||||

"internal/longpath",

|

||||

"internal/mergemaps",

|

||||

"internal/oc",

|

||||

"internal/safefile",

|

||||

"internal/schema1",

|

||||

"internal/schema2",

|

||||

"internal/timeout",

|

||||

"internal/vmcompute",

|

||||

"internal/wclayer",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "9e921883ac929bbe515b39793ece99ce3a9d7706"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:74860eb071d52337d67e9ffd6893b29affebd026505aa917ec23131576a91a77"

|

||||

name = "github.com/alexellis/go-execute"

|

||||

packages = ["pkg/v1"]

|

||||

pruneopts = "UT"

|

||||

revision = "961405ea754427780f2151adff607fa740d377f7"

|

||||

version = "0.3.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:6076d857867a70e87dd1994407deb142f27436f1293b13e75cc053192d14eb0c"

|

||||

name = "github.com/alexellis/k3sup"

|

||||

packages = ["pkg/env"]

|

||||

pruneopts = "UT"

|

||||

revision = "f9a4adddc732742a9ee7962609408fb0999f2d7b"

|

||||

version = "0.7.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:386ca0ac781cc1b630b3ed21725759770174140164b3faf3810e6ed6366a970b"

|

||||

name = "github.com/containerd/containerd"

|

||||

packages = [

|

||||

".",

|

||||

"api/services/containers/v1",

|

||||

"api/services/content/v1",

|

||||

"api/services/diff/v1",

|

||||

"api/services/events/v1",

|

||||

"api/services/images/v1",

|

||||

"api/services/introspection/v1",

|

||||

"api/services/leases/v1",

|

||||

"api/services/namespaces/v1",

|

||||

"api/services/snapshots/v1",

|

||||

"api/services/tasks/v1",

|

||||

"api/services/version/v1",

|

||||

"api/types",

|

||||

"api/types/task",

|

||||

"archive",

|

||||

"archive/compression",

|

||||

"cio",

|

||||

"containers",

|

||||

"content",

|

||||

"content/proxy",

|

||||

"defaults",

|

||||

"diff",

|

||||

"errdefs",

|

||||

"events",

|

||||

"events/exchange",

|

||||

"filters",

|

||||

"identifiers",

|

||||

"images",

|

||||

"images/archive",

|

||||

"labels",

|

||||

"leases",

|

||||

"leases/proxy",

|

||||

"log",

|

||||

"mount",

|

||||

"namespaces",

|

||||

"oci",

|

||||

"pkg/dialer",

|

||||

"platforms",

|

||||

"plugin",

|

||||

"reference",

|

||||

"remotes",

|

||||

"remotes/docker",

|

||||

"remotes/docker/schema1",

|

||||

"rootfs",

|

||||

"runtime/linux/runctypes",

|

||||

"runtime/v2/runc/options",

|

||||

"snapshots",

|

||||

"snapshots/proxy",

|

||||

"sys",

|

||||

"version",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "ff48f57fc83a8c44cf4ad5d672424a98ba37ded6"

|

||||

version = "v1.3.2"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:7e9da25c7a952c63e31ed367a88eede43224b0663b58eb452870787d8ddb6c70"

|

||||

name = "github.com/containerd/continuity"

|

||||

packages = [

|

||||

"fs",

|

||||

"syscallx",

|

||||

"sysx",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "f2a389ac0a02ce21c09edd7344677a601970f41c"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:1b9a7426259b5333d575785e21e1bd0decf18208f5bfb6424d24a50d5ddf83d0"

|

||||

name = "github.com/containerd/fifo"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "bda0ff6ed73c67bfb5e62bc9c697f146b7fd7f13"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:2301a9a859e3b0946e2ddd6961ba6faf6857e6e68bc9293db758dbe3b17cc35e"

|

||||

name = "github.com/containerd/go-cni"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "c154a49e2c754b83ebfb12ebf1362213b94d23e6"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:6d66a41dbbc6819902f1589d0550bc01c18032c0a598a7cd656731e6df73861b"

|

||||

name = "github.com/containerd/ttrpc"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "92c8520ef9f86600c650dd540266a007bf03670f"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:7b4683388adabc709dbb082c13ba35967f072379c85b4acde997c1ca75af5981"

|

||||

name = "github.com/containerd/typeurl"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "a93fcdb778cd272c6e9b3028b2f42d813e785d40"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:1a07bbfee1d0534e8dda4773948e6dcd3a061ea7ab047ce04619476946226483"

|

||||

name = "github.com/containernetworking/cni"

|

||||

packages = [

|

||||

"libcni",

|

||||

"pkg/invoke",

|

||||

"pkg/types",

|

||||

"pkg/types/020",

|

||||

"pkg/types/current",

|

||||

"pkg/version",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "4cfb7b568922a3c79a23e438dc52fe537fc9687e"

|

||||

version = "v0.7.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:e495f9f1fb2bae55daeb76e099292054fe1f734947274b3cfc403ccda595d55a"

|

||||

name = "github.com/docker/distribution"

|

||||

packages = [

|

||||

"digestset",

|

||||

"reference",

|

||||

"registry/api/errcode",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "0d3efadf0154c2b8a4e7b6621fff9809655cc580"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:0938aba6e09d72d48db029d44dcfa304851f52e2d67cda920436794248e92793"

|

||||

name = "github.com/docker/go-events"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "9461782956ad83b30282bf90e31fa6a70c255ba9"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:fa6faf4a2977dc7643de38ae599a95424d82f8ffc184045510737010a82c4ecd"

|

||||

name = "github.com/gogo/googleapis"

|

||||

packages = ["google/rpc"]

|

||||

pruneopts = "UT"

|

||||

revision = "d31c731455cb061f42baff3bda55bad0118b126b"

|

||||

version = "v1.2.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:4107f4e81e8fd2e80386b4ed56b05e3a1fe26ecc7275fe80bb9c3a80a7344ff4"

|

||||

name = "github.com/gogo/protobuf"

|

||||

packages = [

|

||||

"proto",

|

||||

"sortkeys",

|

||||

"types",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "ba06b47c162d49f2af050fb4c75bcbc86a159d5c"

|

||||

version = "v1.2.1"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:b7cb6054d3dff43b38ad2e92492f220f57ae6087ee797dca298139776749ace8"

|

||||

name = "github.com/golang/groupcache"

|

||||

packages = ["lru"]

|

||||

pruneopts = "UT"

|

||||

revision = "611e8accdfc92c4187d399e95ce826046d4c8d73"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:f5ce1529abc1204444ec73779f44f94e2fa8fcdb7aca3c355b0c95947e4005c6"

|

||||

name = "github.com/golang/protobuf"

|

||||

packages = [

|

||||

"proto",

|

||||

"ptypes",

|

||||

"ptypes/any",

|

||||

"ptypes/duration",

|

||||

"ptypes/timestamp",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "6c65a5562fc06764971b7c5d05c76c75e84bdbf7"

|

||||

version = "v1.3.2"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:582b704bebaa06b48c29b0cec224a6058a09c86883aaddabde889cd1a5f73e1b"

|

||||

name = "github.com/google/uuid"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "0cd6bf5da1e1c83f8b45653022c74f71af0538a4"

|

||||

version = "v1.1.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:cbec35fe4d5a4fba369a656a8cd65e244ea2c743007d8f6c1ccb132acf9d1296"

|

||||

name = "github.com/gorilla/mux"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "00bdffe0f3c77e27d2cf6f5c70232a2d3e4d9c15"

|

||||

version = "v1.7.3"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:870d441fe217b8e689d7949fef6e43efbc787e50f200cb1e70dbca9204a1d6be"

|

||||

name = "github.com/inconshreveable/mousetrap"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "76626ae9c91c4f2a10f34cad8ce83ea42c93bb75"

|

||||

version = "v1.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:31e761d97c76151dde79e9d28964a812c46efc5baee4085b86f68f0c654450de"

|

||||

name = "github.com/konsorten/go-windows-terminal-sequences"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "f55edac94c9bbba5d6182a4be46d86a2c9b5b50e"

|

||||

version = "v1.0.2"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:906eb1ca3c8455e447b99a45237b2b9615b665608fd07ad12cce847dd9a1ec43"

|

||||

name = "github.com/morikuni/aec"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "39771216ff4c63d11f5e604076f9c45e8be1067b"

|

||||

version = "v1.0.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:bc62c2c038cc8ae51b68f6d52570501a763bb71e78736a9f65d60762429864a9"

|

||||

name = "github.com/opencontainers/go-digest"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "c9281466c8b2f606084ac71339773efd177436e7"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:70711188c19c53147099d106169d6a81941ed5c2658651432de564a7d60fd288"

|

||||

name = "github.com/opencontainers/image-spec"

|

||||

packages = [

|

||||

"identity",

|

||||

"specs-go",

|

||||

"specs-go/v1",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "d60099175f88c47cd379c4738d158884749ed235"

|

||||

version = "v1.0.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:18d6ebfbabffccba7318a4e26028b0d41f23ff359df3dc07a53b37a9f3a4a994"

|

||||

name = "github.com/opencontainers/runc"

|

||||

packages = [

|

||||

"libcontainer/system",

|

||||

"libcontainer/user",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "d736ef14f0288d6993a1845745d6756cfc9ddd5a"

|

||||

version = "v1.0.0-rc9"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:7a58202c5cdf3d2c1eb0621fe369315561cea7f036ad10f0f0479ac36bcc95eb"

|

||||

name = "github.com/opencontainers/runtime-spec"

|

||||

packages = ["specs-go"]

|

||||

pruneopts = "UT"

|

||||

revision = "29686dbc5559d93fb1ef402eeda3e35c38d75af4"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:cdf3df431e70077f94e14a99305808e3d13e96262b4686154970f448f7248842"

|

||||

name = "github.com/openfaas/faas"

|

||||

packages = ["gateway/requests"]

|

||||

pruneopts = "UT"

|

||||

revision = "80b6976c106370a7081b2f8e9099a6ea9638e1f3"

|

||||

version = "0.18.10"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:6f21508bd38feec0d440ca862f5adcb4c955713f3eb4e075b9af731e6ef258ba"

|

||||

name = "github.com/openfaas/faas-provider"

|

||||

packages = [

|

||||

".",

|

||||

"auth",

|

||||

"httputil",

|

||||

"proxy",

|

||||

"types",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "8f7c35975e1b2bf8286c2f90ee51633eec427491"

|

||||

version = "0.14.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:cf31692c14422fa27c83a05292eb5cbe0fb2775972e8f1f8446a71549bd8980b"

|

||||

name = "github.com/pkg/errors"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "ba968bfe8b2f7e042a574c888954fccecfa385b4"

|

||||

version = "v0.8.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:044c51736e2688a3e4f28f72537f8a7b3f9c188fab4477d5334d92dfe2c07ed5"

|

||||

name = "github.com/sethvargo/go-password"

|

||||

packages = ["password"]

|

||||

pruneopts = "UT"

|

||||

revision = "07c3d521e892540e71469bb0312866130714c038"

|

||||

version = "v0.1.3"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:fd61cf4ae1953d55df708acb6b91492d538f49c305b364a014049914495db426"

|

||||

name = "github.com/sirupsen/logrus"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "8bdbc7bcc01dcbb8ec23dc8a28e332258d25251f"

|

||||

version = "v1.4.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:e096613fb7cf34743d49af87d197663cfccd61876e2219853005a57baedfa562"

|

||||

name = "github.com/spf13/cobra"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "f2b07da1e2c38d5f12845a4f607e2e1018cbb1f5"

|

||||

version = "v0.0.5"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:524b71991fc7d9246cc7dc2d9e0886ccb97648091c63e30eef619e6862c955dd"

|

||||

name = "github.com/spf13/pflag"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "2e9d26c8c37aae03e3f9d4e90b7116f5accb7cab"

|

||||

version = "v1.0.5"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:e14e467ed00ab98665623c5060fa17e3d7079be560ffc33cabafd05d35894f05"

|

||||

name = "github.com/syndtr/gocapability"

|

||||

packages = ["capability"]

|

||||

pruneopts = "UT"

|

||||

revision = "d98352740cb2c55f81556b63d4a1ec64c5a319c2"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:2d9d06cb9d46dacfdbb45f8575b39fc0126d083841a29d4fbf8d97708f43107e"

|

||||

name = "github.com/vishvananda/netlink"

|

||||

packages = [

|

||||

".",

|

||||

"nl",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "a2ad57a690f3caf3015351d2d6e1c0b95c349752"

|

||||

version = "v1.0.0"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:975cb0c04431bf92e60b636a15897c4a3faba9f7dc04da505646630ac91d29d3"

|

||||

name = "github.com/vishvananda/netns"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "0a2b9b5464df8343199164a0321edf3313202f7e"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:aed53a5fa03c1270457e331cf8b7e210e3088a2278fec552c5c5d29c1664e161"

|

||||

name = "go.opencensus.io"

|

||||

packages = [

|

||||

".",

|

||||

"internal",

|

||||

"trace",

|

||||

"trace/internal",

|

||||

"trace/tracestate",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "aad2c527c5defcf89b5afab7f37274304195a6b2"

|

||||

version = "v0.22.2"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:676f320d34ccfa88bfa6d04bdf388ed7062af175355c805ef57ccda1a3f13432"

|

||||

name = "golang.org/x/net"

|

||||

packages = [

|

||||

"context",

|

||||

"context/ctxhttp",

|

||||

"http/httpguts",

|

||||

"http2",

|

||||

"http2/hpack",

|

||||

"idna",

|

||||

"internal/timeseries",

|

||||

"trace",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "c0dbc17a35534bf2e581d7a942408dc936316da4"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:d6b0cfc5ae30841c4b116ac589629f56f8add0955a39f11d8c0d06ca67f5b3d5"

|

||||

name = "golang.org/x/sync"

|

||||

packages = [

|

||||

"errgroup",

|

||||

"semaphore",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "42b317875d0fa942474b76e1b46a6060d720ae6e"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:a76bac71eb452a046b47f82336ba792d8de988688a912f3fd0e8ec8e57fe1bb4"

|

||||

name = "golang.org/x/sys"

|

||||

packages = [

|

||||

"unix",

|

||||

"windows",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "af0d71d358abe0ba3594483a5d519f429dbae3e9"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:8d8faad6b12a3a4c819a3f9618cb6ee1fa1cfc33253abeeea8b55336721e3405"

|

||||

name = "golang.org/x/text"

|

||||

packages = [

|

||||

"collate",

|

||||

"collate/build",

|

||||

"internal/colltab",

|

||||

"internal/gen",

|

||||

"internal/language",

|

||||

"internal/language/compact",

|

||||

"internal/tag",

|

||||

"internal/triegen",

|

||||

"internal/ucd",

|

||||

"language",

|

||||

"secure/bidirule",

|

||||

"transform",

|

||||

"unicode/bidi",

|

||||

"unicode/cldr",

|

||||

"unicode/norm",

|

||||

"unicode/rangetable",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "342b2e1fbaa52c93f31447ad2c6abc048c63e475"

|

||||

version = "v0.3.2"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:583a0c80f5e3a9343d33aea4aead1e1afcc0043db66fdf961ddd1fe8cd3a4faf"

|

||||

name = "google.golang.org/genproto"

|

||||

packages = ["googleapis/rpc/status"]

|

||||

pruneopts = "UT"

|

||||

revision = "b31c10ee225f87dbb9f5f878ead9d64f34f5cbbb"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:48eafc052e46b4ebbc7882553873cf6198203e528627cefc94dcaf8553addd19"

|

||||

name = "google.golang.org/grpc"

|

||||

packages = [

|

||||

".",

|

||||

"balancer",

|

||||

"balancer/base",

|

||||

"balancer/roundrobin",

|

||||

"binarylog/grpc_binarylog_v1",

|

||||

"codes",

|

||||

"connectivity",

|

||||

"credentials",

|

||||

"credentials/internal",

|

||||

"encoding",

|

||||

"encoding/proto",

|

||||

"grpclog",

|

||||

"health/grpc_health_v1",

|

||||

"internal",

|

||||

"internal/backoff",

|

||||

"internal/balancerload",

|

||||

"internal/binarylog",

|

||||

"internal/channelz",

|

||||

"internal/envconfig",

|

||||

"internal/grpcrand",

|

||||

"internal/grpcsync",

|

||||

"internal/syscall",

|

||||

"internal/transport",

|

||||

"keepalive",

|

||||

"metadata",

|

||||

"naming",

|

||||

"peer",

|

||||

"resolver",

|

||||

"resolver/dns",

|

||||

"resolver/passthrough",

|

||||

"serviceconfig",

|

||||

"stats",

|

||||

"status",

|

||||

"tap",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "6eaf6f47437a6b4e2153a190160ef39a92c7eceb"

|

||||

version = "v1.23.0"

|

||||

|

||||

[solve-meta]

|

||||

analyzer-name = "dep"

|

||||

analyzer-version = 1

|

||||

input-imports = [

|

||||

"github.com/alexellis/go-execute/pkg/v1",

|

||||

"github.com/alexellis/k3sup/pkg/env",

|

||||

"github.com/containerd/containerd",

|

||||

"github.com/containerd/containerd/cio",

|

||||

"github.com/containerd/containerd/containers",

|

||||

"github.com/containerd/containerd/errdefs",

|

||||

"github.com/containerd/containerd/namespaces",

|

||||

"github.com/containerd/containerd/oci",

|

||||

"github.com/containerd/go-cni",

|

||||

"github.com/google/uuid",

|

||||

"github.com/gorilla/mux",

|

||||

"github.com/morikuni/aec",

|

||||

"github.com/opencontainers/runtime-spec/specs-go",

|

||||

"github.com/openfaas/faas-provider",

|

||||

"github.com/openfaas/faas-provider/proxy",

|

||||

"github.com/openfaas/faas-provider/types",

|

||||

"github.com/openfaas/faas/gateway/requests",

|

||||

"github.com/pkg/errors",

|

||||

"github.com/sethvargo/go-password/password",

|

||||

"github.com/spf13/cobra",

|

||||

"github.com/vishvananda/netlink",

|

||||

"github.com/vishvananda/netns",

|

||||

"golang.org/x/sys/unix",

|

||||

]

|

||||

solver-name = "gps-cdcl"

|

||||

solver-version = 1

|

||||

47

Gopkg.toml

47

Gopkg.toml

@ -1,47 +0,0 @@

|

||||

[prune]

|

||||

go-tests = true

|

||||

unused-packages = true

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/containerd/containerd"

|

||||

version = "1.3.2"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/morikuni/aec"

|

||||

version = "1.0.0"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/spf13/cobra"

|

||||

version = "0.0.5"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/alexellis/k3sup"

|

||||

version = "0.7.1"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/alexellis/go-execute"

|

||||

version = "0.3.0"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/gorilla/mux"

|

||||

version = "1.7.3"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/openfaas/faas"

|

||||

version = "0.18.7"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/sethvargo/go-password"

|

||||

version = "0.1.3"

|

||||

|

||||

[[constraint]]

|

||||

branch = "master"

|

||||

name = "github.com/containerd/go-cni"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/openfaas/faas-provider"

|

||||

version = "0.14.0"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/google/uuid"

|

||||

version = "1.1.1"

|

||||

4

LICENSE

4

LICENSE

@ -1,6 +1,8 @@

|

||||

MIT License

|

||||

|

||||

Copyright (c) 2019 Alex Ellis

|

||||

Copyright (c) 2020 Alex Ellis

|

||||

Copyright (c) 2020 OpenFaaS Ltd

|

||||

Copyright (c) 2020 OpenFaas Author(s)

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

|

||||

44

Makefile

44

Makefile

@ -1,46 +1,59 @@

|

||||

Version := $(shell git describe --tags --dirty)

|

||||

GitCommit := $(shell git rev-parse HEAD)

|

||||

LDFLAGS := "-s -w -X main.Version=$(Version) -X main.GitCommit=$(GitCommit)"

|

||||

CONTAINERD_VER := 1.3.2

|

||||

CNI_VERSION := v0.8.4

|

||||

CONTAINERD_VER := 1.3.4

|

||||

CNI_VERSION := v0.8.6

|

||||

ARCH := amd64

|

||||

|

||||

export GO111MODULE=on

|

||||

|

||||

.PHONY: all

|

||||

all: local

|

||||

all: test dist hashgen

|

||||

|

||||

.PHONY: publish

|

||||

publish: dist hashgen

|

||||

|

||||

local:

|

||||

CGO_ENABLED=0 GOOS=linux go build -o bin/faasd

|

||||

CGO_ENABLED=0 GOOS=linux go build -mod=vendor -o bin/faasd

|

||||

|

||||

.PHONY: test

|

||||

test:

|

||||

CGO_ENABLED=0 GOOS=linux go test -mod=vendor -ldflags $(LDFLAGS) ./...

|

||||

|

||||

.PHONY: dist

|

||||

dist:

|

||||

CGO_ENABLED=0 GOOS=linux go build -ldflags $(LDFLAGS) -a -installsuffix cgo -o bin/faasd

|

||||

CGO_ENABLED=0 GOOS=linux GOARCH=arm GOARM=7 go build -ldflags $(LDFLAGS) -a -installsuffix cgo -o bin/faasd-armhf

|

||||

CGO_ENABLED=0 GOOS=linux GOARCH=arm64 go build -ldflags $(LDFLAGS) -a -installsuffix cgo -o bin/faasd-arm64

|

||||

CGO_ENABLED=0 GOOS=linux go build -mod=vendor -ldflags $(LDFLAGS) -a -installsuffix cgo -o bin/faasd

|

||||

CGO_ENABLED=0 GOOS=linux GOARCH=arm GOARM=7 go build -mod=vendor -ldflags $(LDFLAGS) -a -installsuffix cgo -o bin/faasd-armhf

|

||||

CGO_ENABLED=0 GOOS=linux GOARCH=arm64 go build -mod=vendor -ldflags $(LDFLAGS) -a -installsuffix cgo -o bin/faasd-arm64

|

||||

|

||||

.PHONY: hashgen

|

||||

hashgen:

|

||||

for f in bin/faasd*; do shasum -a 256 $$f > $$f.sha256; done

|

||||

|

||||

.PHONY: prepare-test

|

||||

prepare-test:

|

||||

curl -sLSf https://github.com/containerd/containerd/releases/download/v$(CONTAINERD_VER)/containerd-$(CONTAINERD_VER).linux-amd64.tar.gz > /tmp/containerd.tar.gz && sudo tar -xvf /tmp/containerd.tar.gz -C /usr/local/bin/ --strip-components=1

|

||||

curl -SLfs https://raw.githubusercontent.com/containerd/containerd/v1.3.2/containerd.service | sudo tee /etc/systemd/system/containerd.service

|

||||

sudo systemctl daemon-reload && sudo systemctl start containerd

|

||||

sudo curl -fSLs "https://github.com/genuinetools/netns/releases/download/v0.5.3/netns-linux-amd64" --output "/usr/local/bin/netns" && sudo chmod a+x "/usr/local/bin/netns"

|

||||

sudo /sbin/sysctl -w net.ipv4.conf.all.forwarding=1

|

||||

sudo mkdir -p /opt/cni/bin

|

||||

curl -sSL https://github.com/containernetworking/plugins/releases/download/$(CNI_VERSION)/cni-plugins-linux-$(ARCH)-$(CNI_VERSION).tgz | sudo tar -xz -C /opt/cni/bin

|

||||

sudo cp $(GOPATH)/src/github.com/alexellis/faasd/bin/faasd /usr/local/bin/

|

||||

cd $(GOPATH)/src/github.com/alexellis/faasd/ && sudo /usr/local/bin/faasd install

|

||||

sudo cp bin/faasd /usr/local/bin/

|

||||

sudo /usr/local/bin/faasd install

|

||||

sudo systemctl status -l containerd --no-pager

|

||||

sudo journalctl -u faasd-provider --no-pager

|

||||

sudo systemctl status -l faasd-provider --no-pager

|

||||

sudo systemctl status -l faasd --no-pager

|

||||

curl -sSLf https://cli.openfaas.com | sudo sh

|

||||

sleep 120 && sudo journalctl -u faasd --no-pager

|

||||

echo "Sleeping for 2m" && sleep 120 && sudo journalctl -u faasd --no-pager

|

||||

|

||||

.PHONY: test-e2e

|

||||

test-e2e:

|

||||

sudo cat /var/lib/faasd/secrets/basic-auth-password | /usr/local/bin/faas-cli login --password-stdin

|

||||

/usr/local/bin/faas-cli store deploy figlet --env write_timeout=1s --env read_timeout=1s

|

||||

sleep 2

|

||||

/usr/local/bin/faas-cli store deploy figlet --env write_timeout=1s --env read_timeout=1s --label testing=true

|

||||

sleep 5

|

||||

/usr/local/bin/faas-cli list -v

|

||||

/usr/local/bin/faas-cli describe figlet | grep testing

|

||||

uname | /usr/local/bin/faas-cli invoke figlet

|

||||

uname | /usr/local/bin/faas-cli invoke figlet --async

|

||||

sleep 10

|

||||

@ -48,3 +61,8 @@ test-e2e:

|

||||

/usr/local/bin/faas-cli remove figlet

|

||||

sleep 3

|

||||

/usr/local/bin/faas-cli list

|

||||

sleep 3

|

||||

journalctl -t openfaas-fn:figlet --no-pager

|

||||

|

||||

# Removed due to timing issue in CI on GitHub Actions

|

||||

# /usr/local/bin/faas-cli logs figlet --since 15m --follow=false | grep Forking

|

||||

|

||||

249

README.md

249

README.md

@ -1,181 +1,170 @@

|

||||

# faasd - serverless with containerd

|

||||

# faasd - a lightweight & portable faas engine

|

||||

|

||||

[](https://travis-ci.com/alexellis/faasd)

|

||||

[](https://github.com/openfaas/faasd/actions)

|

||||

[](https://opensource.org/licenses/MIT)

|

||||

[](https://www.openfaas.com)

|

||||

|

||||

|

||||

faasd is a Golang supervisor that bundles OpenFaaS for use with containerd instead of a container orchestrator like Kubernetes or Docker Swarm.

|

||||

faasd is [OpenFaaS](https://github.com/openfaas/) reimagined, but without the cost and complexity of Kubernetes. It runs on a single host with very modest requirements, making it fast and easy to manage. Under the hood it uses [containerd](https://containerd.io/) and [Container Networking Interface (CNI)](https://github.com/containernetworking/cni) along with the same core OpenFaaS components from the main project.

|

||||

|

||||

## About faasd:

|

||||

|

||||

|

||||

* faasd is a single Golang binary

|

||||

* faasd is multi-arch, so works on `x86_64`, armhf and arm64

|

||||

* faasd downloads, starts and supervises the core components to run OpenFaaS

|

||||

## Use-cases and tutorials

|

||||

|

||||

## What does faasd deploy?

|

||||

faasd is just another way to runOpenFaaS, so many things you read in the docs or in blog posts will work the same way.

|

||||

|

||||

* faasd - itself, and its [faas-provider](https://github.com/openfaas/faas-provider)

|

||||

* [Prometheus](https://github.com/prometheus/prometheus)

|

||||

* [the OpenFaaS gateway](https://github.com/openfaas/faas/tree/master/gateway)

|

||||

|

||||

You can use the standard [faas-cli](https://github.com/openfaas/faas-cli) with faasd along with pre-packaged functions in the Function Store, or build your own with the template store.

|

||||

Videos and overviews:

|

||||

|

||||

### faasd supports:

|

||||

* [Exploring of serverless use-cases from commercial and personal users (YouTube)](https://www.youtube.com/watch?v=mzuXVuccaqI)

|

||||

* [Meet faasd. Look Ma’ No Kubernetes! (YouTube)](https://www.youtube.com/watch?v=ZnZJXI377ak)

|

||||

|

||||

* `faas list`

|

||||

* `faas describe`

|

||||

* `faas deploy --update=true --replace=false`

|

||||

* `faas invoke`

|

||||

* `faas rm`

|

||||

* `faas login`

|

||||

* `faas store list/deploy/inspect`

|

||||

* `faas up`

|

||||

* `faas version`

|

||||

* `faas invoke --async`

|

||||

* `faas namespace`

|

||||

Use-cases and tutorials:

|

||||

|

||||

Scale from and to zero is also supported. On a Dell XPS with a small, pre-pulled image unpausing an existing task took 0.19s and starting a task for a killed function took 0.39s. There may be further optimizations to be gained.

|

||||

* [Serverless Node.js that you can run anywhere](https://www.openfaas.com/blog/serverless-nodejs/)

|

||||

* [Simple Serverless with Golang Functions and Microservices](https://www.openfaas.com/blog/golang-serverless/)

|

||||

* [Build a Flask microservice with OpenFaaS](https://www.openfaas.com/blog/openfaas-flask/)

|

||||

* [Get started with Java 11 and Vert.x on Kubernetes with OpenFaaS](https://www.openfaas.com/blog/get-started-with-java-openjdk11/)

|

||||

* [Deploy to faasd via GitHub Actions](https://www.openfaas.com/blog/openfaas-functions-with-github-actions/)

|

||||

* [Scrape and automate websites with Puppeteer](https://www.openfaas.com/blog/puppeteer-scraping/)

|

||||

|

||||

Other operations are pending development in the provider such as:

|

||||

Additional resources:

|

||||

|

||||

* `faas logs`

|

||||

* `faas secret`

|

||||

* `faas auth`

|

||||

* The official handbook - [Serverless For Everyone Else](https://gumroad.com/l/serverless-for-everyone-else)

|

||||

* For reference: [OpenFaaS docs](https://docs.openfaas.com)

|

||||

* For use-cases and tutorials: [OpenFaaS blog](https://openfaas.com/blog/)

|

||||

* For self-paced learning: [OpenFaaS workshop](https://github.com/openfaas/workshop/)

|

||||

|

||||

### Pre-reqs

|

||||

### About faasd

|

||||

|

||||

* Linux

|

||||

* faasd is a static Golang binary

|

||||

* uses the same core components and ecosystem of OpenFaaS

|

||||

* uses containerd for its runtime and CNI for networking

|

||||

* is multi-arch, so works on Intel `x86_64` and ARM out the box

|

||||

* can run almost any other stateful container through its `docker-compose.yaml` file

|

||||

|

||||

PC / Cloud - any Linux that containerd works on should be fair game, but faasd is tested with Ubuntu 18.04

|

||||

Most importantly, it's easy to manage so you can set it up and leave it alone to run your functions.

|

||||

|

||||

For Raspberry Pi Raspbian Stretch or newer also works fine

|

||||

|

||||

|

||||

For MacOS users try [multipass.run](https://multipass.run) or [Vagrant](https://www.vagrantup.com/)

|

||||

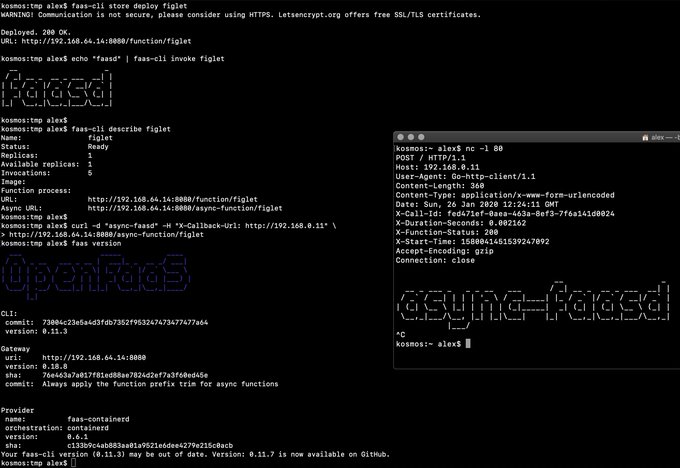

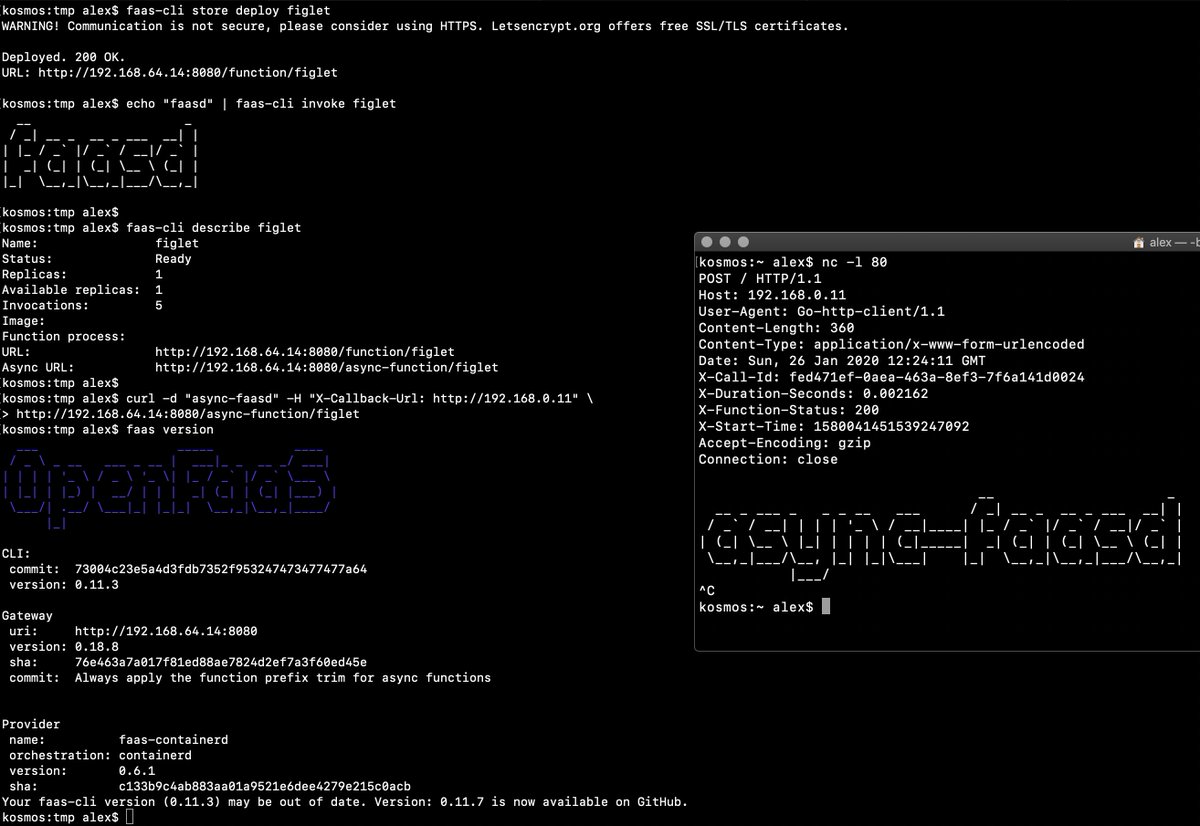

> Demo of faasd running asynchronous functions

|

||||

|

||||

For Windows users, install [Git Bash](https://git-scm.com/downloads) along with multipass or vagrant. You can also use WSL1 or WSL2 which provides a Linux environment.

|

||||

Watch the video: [faasd walk-through with cloud-init and Multipass](https://www.youtube.com/watch?v=WX1tZoSXy8E)

|

||||

|

||||

You will also need [containerd v1.3.2](https://github.com/containerd/containerd) and the [CNI plugins v0.8.4](https://github.com/containernetworking/plugins)

|

||||

### What does faasd deploy?

|

||||

|

||||

[faas-cli](https://github.com/openfaas/faas-cli) is optional, but recommended.

|

||||

* faasd - itself, and its [faas-provider](https://github.com/openfaas/faas-provider) for containerd - CRUD for functions and services, implements the OpenFaaS REST API

|

||||

* [Prometheus](https://github.com/prometheus/prometheus) - for monitoring of services, metrics, scaling and dashboards

|

||||

* [OpenFaaS Gateway](https://github.com/openfaas/faas/tree/master/gateway) - the UI portal, CLI, and other OpenFaaS tooling can talk to this.

|

||||

* [OpenFaaS queue-worker for NATS](https://github.com/openfaas/nats-queue-worker) - run your invocations in the background without adding any code. See also: [asynchronous invocations](https://docs.openfaas.com/reference/triggers/#async-nats-streaming)

|

||||

* [NATS](https://nats.io) for asynchronous processing and queues

|

||||

|

||||

## Backlog

|

||||

faasd relies on industry-standard tools for running containers:

|

||||

|

||||

Pending:

|

||||

* [CNI](https://github.com/containernetworking/plugins)

|

||||

* [containerd](https://github.com/containerd/containerd)

|

||||

* [runc](https://github.com/opencontainers/runc)

|

||||

|

||||

* [ ] Monitor and restart any of the core components at runtime if the container stops

|

||||

* [ ] Bundle/package/automate installation of containerd - [see bootstrap from k3s](https://github.com/rancher/k3s)

|

||||

* [ ] Provide ufw rules / example for blocking access to everything but a reverse proxy to the gateway container

|

||||

* [ ] Provide [simple Caddyfile example](https://blog.alexellis.io/https-inlets-local-endpoints/) in the README showing how to expose the faasd proxy on port 80/443 with TLS

|

||||

You can use the standard [faas-cli](https://github.com/openfaas/faas-cli) along with pre-packaged functions from *the Function Store*, or build your own using any OpenFaaS template.

|

||||

|

||||

Done:

|

||||

### When should you use faasd over OpenFaaS on Kubernetes?

|

||||

|

||||

* [x] Inject / manage IPs between core components for service to service communication - i.e. so Prometheus can scrape the OpenFaaS gateway - done via `/etc/hosts` mount

|

||||

* [x] Add queue-worker and NATS

|

||||

* [x] Create faasd.service and faasd-provider.service

|

||||

* [x] Self-install / create systemd service via `faasd install`

|

||||

* [x] Restart containers upon restart of faasd

|

||||

* [x] Clear / remove containers and tasks with SIGTERM / SIGINT

|

||||

* [x] Determine armhf/arm64 containers to run for gateway

|

||||

* [x] Configure `basic_auth` to protect the OpenFaaS gateway and faasd-provider HTTP API

|

||||

* [x] Setup custom working directory for faasd `/var/lib/faasd/`

|

||||

* [x] Use CNI to create network namespaces and adapters

|

||||

* To deploy microservices and functions that you can update and monitor remotely

|

||||

* When you don't have the bandwidth to learn or manage Kubernetes

|

||||

* To deploy embedded apps in IoT and edge use-cases

|

||||

* To distribute applications to a customer or client

|

||||

* You have a cost sensitive project - run faasd on a 1GB VM for 5-10 USD / mo or on your Raspberry Pi

|

||||

* When you just need a few functions or microservices, without the cost of a cluster

|

||||

|

||||

## Tutorial: Get started on armhf / Raspberry Pi

|

||||

faasd does not create the same maintenance burden you'll find with maintaining, upgrading, and securing a Kubernetes cluster. You can deploy it and walk away, in the worst case, just deploy a new VM and deploy your functions again.

|

||||

|

||||

You can run this tutorial on your Raspberry Pi, or adapt the steps for a regular Linux VM/VPS host.

|

||||

## Learning faasd

|

||||

|

||||

* [faasd - lightweight Serverless for your Raspberry Pi](https://blog.alexellis.io/faasd-for-lightweight-serverless/)

|

||||

The faasd project is MIT licensed and open source, and you will find some documentation, blog posts and videos for free.

|

||||

|

||||

## Hacking (build from source)

|

||||

However, "Serverless For Everyone Else" is the official handbook and was written to contribute funds towards the upkeep and maintenance of the project.

|

||||

|

||||

Install the CNI plugins:

|

||||

### The official handbook and docs for faasd

|

||||

|

||||

* For PC run `export ARCH=amd64`

|

||||

* For RPi/armhf run `export ARCH=arm`

|

||||

* For arm64 run `export ARCH=arm64`

|

||||

<a href="https://gumroad.com/l/serverless-for-everyone-else">

|

||||

<img src="https://static-2.gumroad.com/res/gumroad/2028406193591/asset_previews/741f2ad46ff0a08e16aaf48d21810ba7/retina/social4.png" width="40%"></a>

|

||||

|

||||

Then run:

|

||||

You'll learn how to deploy code in any language, lift and shift Dockerfiles, run requests in queues, write background jobs and to integrate with databases. faasd packages the same code as OpenFaaS, so you get built-in metrics for your HTTP endpoints, a user-friendly CLI, pre-packaged functions and templates from the store and a UI.

|

||||

|

||||

```sh

|

||||

export CNI_VERSION=v0.8.4

|

||||

sudo mkdir -p /opt/cni/bin

|

||||

curl -sSL https://github.com/containernetworking/plugins/releases/download/${CNI_VERSION}/cni-plugins-linux-${ARCH}-${CNI_VERSION}.tgz | sudo tar -xz -C /opt/cni/bin

|

||||

Topics include:

|

||||

|

||||

* Should you deploy to a VPS or Raspberry Pi?

|

||||

* Deploying your server with bash, cloud-init or terraform

|

||||

* Using a private container registry

|

||||

* Finding functions in the store

|

||||

* Building your first function with Node.js

|

||||

* Using environment variables for configuration

|

||||

* Using secrets from functions, and enabling authentication tokens

|

||||

* Customising templates

|

||||

* Monitoring your functions with Grafana and Prometheus

|

||||

* Scheduling invocations and background jobs

|

||||

* Tuning timeouts, parallelism, running tasks in the background

|

||||

* Adding TLS to faasd and custom domains for functions

|

||||

* Self-hosting on your Raspberry Pi

|

||||

* Adding a database for storage with InfluxDB and Postgresql

|

||||

* Troubleshooting and logs

|

||||

* CI/CD with GitHub Actions and multi-arch

|

||||

* Taking things further, community and case-studies

|

||||

|

||||

View sample pages, reviews and testimonials on Gumroad:

|

||||

|

||||

["Serverless For Everyone Else"](https://gumroad.com/l/serverless-for-everyone-else)

|

||||

|

||||

### Deploy faasd

|

||||

|

||||

The easiest way to deploy faasd is with cloud-init, we give several examples below, and post IaaS platforms will accept "user-data" pasted into their UI, or via their API.

|

||||

|

||||

For trying it out on MacOS or Windows, we recommend using [multipass](https://multipass.run) to run faasd in a VM.

|

||||

|

||||

If you don't use cloud-init, or have already created your Linux server you can use the installation script as per below:

|

||||

|

||||

```bash

|

||||

git clone https://github.com/openfaas/faasd --depth=1

|

||||

cd faasd

|

||||

|

||||

./hack/install.sh

|

||||

```

|

||||

|

||||

Run or install faasd, which brings up the gateway and Prometheus as containers

|

||||

> This approach also works for Raspberry Pi

|

||||

|

||||

```sh

|

||||

cd $GOPATH/src/github.com/alexellis/faasd

|

||||

go build

|

||||

It's recommended that you do not install Docker on the same host as faasd, since 1) they may both use different versions of containerd and 2) docker's networking rules can disrupt faasd's networking. When using faasd - make your faasd server a faasd server, and build container image on your laptop or in a CI pipeline.

|

||||

|

||||

# Install with systemd

|

||||

# sudo ./faasd install

|

||||

#### Deployment tutorials

|

||||

|

||||

# Or run interactively

|

||||

# sudo ./faasd up

|

||||

```

|

||||

* [Use multipass on Windows, MacOS or Linux](/docs/MULTIPASS.md)

|

||||

* [Deploy to DigitalOcean with Terraform and TLS](https://www.openfaas.com/blog/faasd-tls-terraform/)

|

||||

* [Deploy to any IaaS with cloud-init](https://blog.alexellis.io/deploy-serverless-faasd-with-cloud-init/)

|

||||

* [Deploy faasd to your Raspberry Pi](https://blog.alexellis.io/faasd-for-lightweight-serverless/)

|

||||

|

||||

### Build and run (binaries)

|

||||

Terraform scripts:

|

||||

|

||||

```sh

|

||||

# For x86_64

|

||||

sudo curl -fSLs "https://github.com/alexellis/faasd/releases/download/0.4.4/faasd" \

|

||||

-o "/usr/local/bin/faasd" \

|

||||

&& sudo chmod a+x "/usr/local/bin/faasd"

|

||||

* [Provision faasd on DigitalOcean with Terraform](docs/bootstrap/README.md)

|

||||

* [Provision faasd with TLS on DigitalOcean with Terraform](docs/bootstrap/digitalocean-terraform/README.md)

|

||||

|

||||

# armhf

|

||||

sudo curl -fSLs "https://github.com/alexellis/faasd/releases/download/0.4.4/faasd-armhf" \

|

||||

-o "/usr/local/bin/faasd" \

|

||||

&& sudo chmod a+x "/usr/local/bin/faasd"

|

||||

### Function and template store

|

||||

|

||||

# arm64

|

||||

sudo curl -fSLs "https://github.com/alexellis/faasd/releases/download/0.4.4/faasd-arm64" \

|

||||

-o "/usr/local/bin/faasd" \

|

||||

&& sudo chmod a+x "/usr/local/bin/faasd"

|

||||

```

|

||||

For community functions see `faas-cli store --help`

|

||||

|

||||

### At run-time

|

||||

For templates built by the community see: `faas-cli template store list`, you can also use the `dockerfile` template if you just want to migrate an existing service without the benefits of using a template.

|

||||

|

||||

Look in `hosts` in the current working folder or in `/var/lib/faasd/` to get the IP for the gateway or Prometheus

|

||||

### Community support

|

||||

|

||||

```sh

|

||||

127.0.0.1 localhost

|

||||

10.62.0.1 faasd-provider

|

||||

Commercial users and solo business owners should become OpenFaaS GitHub Sponsors to receive regular email updates on changes, tutorials and new features.

|

||||

|

||||

10.62.0.2 prometheus

|

||||

10.62.0.3 gateway

|

||||

10.62.0.4 nats

|

||||

10.62.0.5 queue-worker

|

||||

```

|

||||

If you are learning faasd, or want to share your use-case, you can join the OpenFaaS Slack community.

|

||||

|

||||

The IP addresses are dynamic and may change on every launch.

|

||||

* [Become an OpenFaaS GitHub Sponsor](https://github.com/sponsors/openfaas/)

|

||||

* [Join Slack](https://slack.openfaas.io/)

|

||||

|

||||

Since faasd-provider uses containerd heavily it is not running as a container, but as a stand-alone process. Its port is available via the bridge interface, i.e. openfaas0.

|

||||

### Backlog, features and known issues

|

||||

|

||||

* Prometheus will run on the Prometheus IP plus port 8080 i.e. http://[prometheus_ip]:9090/targets

|

||||

For completed features, WIP and upcoming roadmap see:

|

||||

|

||||

* faasd-provider runs on 10.62.0.1:8081, i.e. directly on the host, and accessible via the bridge interface from CNI.

|

||||

|

||||

* Now go to the gateway's IP address as shown above on port 8080, i.e. http://[gateway_ip]:8080 - you can also use this address to deploy OpenFaaS Functions via the `faas-cli`.

|

||||

|

||||

* basic-auth

|

||||

|

||||

You will then need to get the basic-auth password, it is written to `/var/lib/faasd/secrets/basic-auth-password` if you followed the above instructions.

|

||||

The default Basic Auth username is `admin`, which is written to `/var/lib/faasd/secrets/basic-auth-user`, if you wish to use a non-standard user then create this file and add your username (no newlines or other characters)

|

||||

|

||||

#### Installation with systemd

|

||||

|

||||

* `faasd install` - install faasd and containerd with systemd, this must be run from `$GOPATH/src/github.com/alexellis/faasd`

|

||||

* `journalctl -u faasd -f` - faasd service logs

|

||||

* `journalctl -u faasd-provider -f` - faasd-provider service logs

|

||||

|

||||

### Appendix

|

||||

|

||||

#### Links

|

||||

|

||||

https://github.com/renatofq/ctrofb/blob/31968e4b4893f3603e9998f21933c4131523bb5d/cmd/network.go

|

||||

|

||||

https://github.com/renatofq/catraia/blob/c4f62c86bddbfadbead38cd2bfe6d920fba26dce/catraia-net/network.go

|

||||

|

||||

https://github.com/containernetworking/plugins

|

||||

|

||||

https://github.com/containerd/go-cni

|

||||

See [ROADMAP.md](docs/ROADMAP.md)

|

||||

|

||||

Are you looking to hack on faasd? Follow the [developer instructions](docs/DEV.md) for a manual installation, or use the `hack/install.sh` script and pick up from there.

|

||||

|

||||

30

cloud-config.txt

Normal file

30

cloud-config.txt

Normal file

@ -0,0 +1,30 @@

|

||||

#cloud-config

|

||||

ssh_authorized_keys:

|

||||

## Note: Replace with your own public key

|

||||

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC8Q/aUYUr3P1XKVucnO9mlWxOjJm+K01lHJR90MkHC9zbfTqlp8P7C3J26zKAuzHXOeF+VFxETRr6YedQKW9zp5oP7sN+F2gr/pO7GV3VmOqHMV7uKfyUQfq7H1aVzLfCcI7FwN2Zekv3yB7kj35pbsMa1Za58aF6oHRctZU6UWgXXbRxP+B04DoVU7jTstQ4GMoOCaqYhgPHyjEAS3DW0kkPW6HzsvJHkxvVcVlZ/wNJa1Ie/yGpzOzWIN0Ol0t2QT/RSWOhfzO1A2P0XbPuZ04NmriBonO9zR7T1fMNmmtTuK7WazKjQT3inmYRAqU6pe8wfX8WIWNV7OowUjUsv alex@alexr.local

|

||||

|

||||

package_update: true

|

||||

|

||||

packages:

|

||||

- runc

|

||||

- git

|

||||

|

||||

runcmd:

|

||||

- curl -sLSf https://github.com/containerd/containerd/releases/download/v1.3.5/containerd-1.3.5-linux-amd64.tar.gz > /tmp/containerd.tar.gz && tar -xvf /tmp/containerd.tar.gz -C /usr/local/bin/ --strip-components=1

|

||||

- curl -SLfs https://raw.githubusercontent.com/containerd/containerd/v1.3.5/containerd.service | tee /etc/systemd/system/containerd.service

|

||||

- systemctl daemon-reload && systemctl start containerd

|

||||

- systemctl enable containerd

|

||||

- /sbin/sysctl -w net.ipv4.conf.all.forwarding=1

|

||||

- mkdir -p /opt/cni/bin

|

||||

- curl -sSL https://github.com/containernetworking/plugins/releases/download/v0.8.5/cni-plugins-linux-amd64-v0.8.5.tgz | tar -xz -C /opt/cni/bin

|

||||

- mkdir -p /go/src/github.com/openfaas/

|

||||

- cd /go/src/github.com/openfaas/ && git clone --depth 1 --branch 0.10.2 https://github.com/openfaas/faasd

|

||||

- curl -fSLs "https://github.com/openfaas/faasd/releases/download/0.10.2/faasd" --output "/usr/local/bin/faasd" && chmod a+x "/usr/local/bin/faasd"

|

||||

- cd /go/src/github.com/openfaas/faasd/ && /usr/local/bin/faasd install

|

||||

- systemctl status -l containerd --no-pager

|

||||

- journalctl -u faasd-provider --no-pager

|

||||

- systemctl status -l faasd-provider --no-pager

|

||||

- systemctl status -l faasd --no-pager

|

||||

- curl -sSLf https://cli.openfaas.com | sh

|

||||

- sleep 60 && journalctl -u faasd --no-pager

|

||||

- cat /var/lib/faasd/secrets/basic-auth-password | /usr/local/bin/faas-cli login --password-stdin

|

||||

60

cmd/collect.go

Normal file

60

cmd/collect.go

Normal file

@ -0,0 +1,60 @@

|

||||

package cmd

|

||||

|

||||

import (

|

||||

"bufio"

|

||||

"context"

|

||||

"fmt"

|

||||

"io"

|

||||

"sync"

|

||||

|

||||

"github.com/containerd/containerd/runtime/v2/logging"

|

||||

"github.com/coreos/go-systemd/journal"

|

||||

"github.com/spf13/cobra"

|

||||

)

|

||||

|

||||

func CollectCommand() *cobra.Command {

|

||||

return collectCmd

|

||||

}

|

||||

|

||||

var collectCmd = &cobra.Command{

|

||||

Use: "collect",

|

||||

Short: "Collect logs to the journal",

|

||||

RunE: runCollect,

|

||||

}

|

||||

|

||||

func runCollect(_ *cobra.Command, _ []string) error {

|

||||

logging.Run(logStdio)

|

||||

return nil

|

||||

}

|

||||

|

||||

// logStdio copied from

|

||||

// https://github.com/containerd/containerd/pull/3085

|

||||

// https://github.com/stellarproject/orbit

|

||||

func logStdio(ctx context.Context, config *logging.Config, ready func() error) error {

|

||||

// construct any log metadata for the container

|

||||

vars := map[string]string{

|

||||

"SYSLOG_IDENTIFIER": fmt.Sprintf("%s:%s", config.Namespace, config.ID),

|

||||

}

|

||||

var wg sync.WaitGroup

|

||||

wg.Add(2)

|

||||

// forward both stdout and stderr to the journal

|

||||

go copy(&wg, config.Stdout, journal.PriInfo, vars)

|

||||

go copy(&wg, config.Stderr, journal.PriErr, vars)

|

||||

// signal that we are ready and setup for the container to be started

|

||||

if err := ready(); err != nil {

|

||||

return err

|

||||

}

|

||||

wg.Wait()

|

||||

return nil

|

||||

}

|

||||

|

||||

func copy(wg *sync.WaitGroup, r io.Reader, pri journal.Priority, vars map[string]string) {

|

||||

defer wg.Done()

|

||||

s := bufio.NewScanner(r)

|

||||

for s.Scan() {

|

||||

if s.Err() != nil {

|

||||

return

|

||||

}

|

||||

journal.Send(s.Text(), pri, vars)

|

||||

}

|

||||

}

|

||||

@ -6,7 +6,7 @@ import (

|

||||

"os"

|

||||

"path"

|

||||

|

||||

systemd "github.com/alexellis/faasd/pkg/systemd"

|

||||

systemd "github.com/openfaas/faasd/pkg/systemd"

|

||||

"github.com/pkg/errors"

|

||||

|

||||

"github.com/spf13/cobra"

|

||||

@ -18,7 +18,10 @@ var installCmd = &cobra.Command{

|

||||

RunE: runInstall,

|

||||

}

|

||||

|

||||

const workingDirectoryPermission = 0644

|

||||

|

||||

const faasdwd = "/var/lib/faasd"

|

||||

|

||||

const faasdProviderWd = "/var/lib/faasd-provider"

|

||||

|

||||

func runInstall(_ *cobra.Command, _ []string) error {

|

||||

@ -35,6 +38,10 @@ func runInstall(_ *cobra.Command, _ []string) error {

|

||||

return errors.Wrap(basicAuthErr, "cannot create basic-auth-* files")

|

||||

}

|

||||

|

||||

if err := cp("docker-compose.yaml", faasdwd); err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

if err := cp("prometheus.yml", faasdwd); err != nil {

|

||||

return err

|

||||

}

|

||||

@ -86,7 +93,10 @@ func runInstall(_ *cobra.Command, _ []string) error {

|

||||

return err

|

||||

}

|

||||

|

||||