mirror of

https://github.com/openfaas/faasd.git

synced 2025-06-18 12:06:36 +00:00

Compare commits

186 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

| 4e9b6070c1 | |||

| 1862c0e1f5 | |||

| ae909c8df4 | |||

| 6f76a05bdf | |||

| 8f022cfb21 | |||

| ff9225d45e | |||

| 1da2763a96 | |||

| 666d6c4871 | |||

| 2248a8a071 | |||

| 908bbfda9f | |||

| b40a7cbe58 | |||

| a66f65c2b9 | |||

| ac1cc16f0c | |||

| 716ef6f51c | |||

| 92523c496b | |||

| 5561c5cc67 | |||

| 6c48911412 | |||

| 3ce724512b | |||

| 8d91895c79 | |||

| 7ca531a8b5 | |||

| 94210cc7f1 | |||

| 9e5eb84236 | |||

| b20e5614c7 | |||

| 40829bbf88 | |||

| 87f49b0289 | |||

| b817479828 | |||

| faae82aa1c | |||

| cddc10acbe | |||

| 1c8e8bb615 | |||

| 6e537d1fde | |||

| c314af4f98 | |||

| 4189cfe52c | |||

| 9e2f571cf7 | |||

| 93825e8354 | |||

| 6752a61a95 | |||

| 16a8d2ac6c | |||

| 68ac4dfecb | |||

| c2480ab30a | |||

| d978c19e23 | |||

| 038b92c5b4 | |||

| f1a1f374d9 | |||

| 24692466d8 | |||

| bdfff4e8c5 | |||

| e3589a4ed1 | |||

| b865e55c85 | |||

| 89a728db16 | |||

| 2237dfd44d | |||

| 4423a5389a | |||

| a6a4502c89 | |||

| 8b86e00128 | |||

| 3039773fbd | |||

| 5b92e7793d | |||

| 88f1aa0433 | |||

| 2b9efd29a0 | |||

| db5312158c | |||

| 26debca616 | |||

| 50de0f34bb | |||

| d64edeb648 | |||

| 42b9cc6b71 | |||

| 25c553a87c | |||

| 8bc39f752e | |||

| cbff6fa8f6 | |||

| 3e29408518 | |||

| 04f1807d92 | |||

| 35e017b526 | |||

| e54da61283 | |||

| 84353d0cae | |||

| e33a60862d | |||

| 7b67ff22e6 | |||

| 19abc9f7b9 | |||

| 480f566819 | |||

| cece6cf1ef | |||

| 22882e2643 | |||

| 667d74aaf7 | |||

| 9dcdbfb7e3 | |||

| 3a9b81200e | |||

| 734425de25 | |||

| 70e7e0d25a | |||

| be8574ecd0 | |||

| a0110b3019 | |||

| 87c71b090f | |||

| dc8667d36a | |||

| 137d199cb5 | |||

| 560c295eb0 | |||

| 93325b713e | |||

| 2307fc71c5 | |||

| 853830c018 | |||

| 262770a0b7 | |||

| 0efb6d492f | |||

| 27cfe465ca | |||

| d6c4ebaf96 | |||

| e9d1423315 | |||

| 4bca5c36a5 | |||

| 10e7a2f07c | |||

| 4775a9a77c | |||

| e07186ed5b | |||

| 2454c2a807 | |||

| 8bd2ba5334 | |||

| c379b0ebcc | |||

| 226a20c362 | |||

| 02c9dcf74d | |||

| 0b88fc232d | |||

| fcd1c9ab54 | |||

| 592f3d3cc0 | |||

| b06364c3f4 | |||

| 75fd07797c | |||

| 65c2cb0732 | |||

| 44df1cef98 | |||

| 881f5171ee | |||

| 970015ac85 | |||

| 283e8ed2c1 | |||

| d49011702b | |||

| eb369fbb16 | |||

| 040b426a19 | |||

| 251cb2d08a | |||

| 5c48ac1a70 | |||

| 7c166979c9 | |||

| 36843ad1d4 | |||

| 3bc041ba04 | |||

| dd3f9732b4 | |||

| 6c10d18f59 | |||

| 969fc566e1 | |||

| a4710db664 | |||

| df2de7ee5c | |||

| 2d8b2b1f73 | |||

| 6e5bc27d9a | |||

| 2eb1df9517 | |||

| c133b9c4ab | |||

| f09028e451 | |||

| bacf8ebad5 | |||

| d551721649 | |||

| 42e9c91ee9 | |||

| cda1fe78b1 | |||

| a3392634a7 | |||

| 95e278b29a | |||

| d802ba70c1 | |||

| cd76ff3ebc | |||

| ed5110de30 | |||

| 2f3ba1335c | |||

| 24e065965f | |||

| fd2ee55f9f | |||

| d135999d3b | |||

| 3068d03279 | |||

| fd4f53fe15 | |||

| 4b93ccba3f | |||

| e6b814fd60 | |||

| 06890cddb9 | |||

| 40da0a35c3 | |||

| 0c0d05b2ea | |||

| af46f34003 | |||

| 2f7269fc97 | |||

| 1f56c39675 | |||

| 7152b170bb | |||

| 47955954eb | |||

| 7f672f006a | |||

| 20ec76bf74 | |||

| 04d1688bfb | |||

| a8f514f7d6 | |||

| 502d3fdecc | |||

| 5d098c9cb7 | |||

| 0935dc6867 | |||

| e77d05ec94 | |||

| 7ab69b5317 | |||

| 098baba7cc | |||

| 9d688b9ea6 | |||

| 1458a2f2fb | |||

| c18a038062 | |||

| af0555a85b | |||

| 2ff8646669 | |||

| d785bebf4c | |||

| 17845457e2 | |||

| 300d8b082a | |||

| 17a5e2c625 | |||

| f0172e618a | |||

| 61e2d16c3e | |||

| ae0753a6d9 | |||

| 19a769b7da | |||

| 48237e0b3c | |||

| 306313ed9a | |||

| ff0cccf0dc | |||

| 52baca9d17 | |||

| f76432f60a | |||

| 38f26b213f | |||

| 6c3fe813fd | |||

| 13d28bd2db | |||

| f3f6225674 |

2

.gitattributes

vendored

Normal file

2

.gitattributes

vendored

Normal file

@ -0,0 +1,2 @@

|

||||

vendor/** linguist-generated=true

|

||||

Gopkg.lock linguist-generated=true

|

||||

1

.github/CODEOWNERS

vendored

Normal file

1

.github/CODEOWNERS

vendored

Normal file

@ -0,0 +1 @@

|

||||

@alexellis

|

||||

41

.github/ISSUE_TEMPLATE.md

vendored

Normal file

41

.github/ISSUE_TEMPLATE.md

vendored

Normal file

@ -0,0 +1,41 @@

|

||||

<!--- Provide a general summary of the issue in the Title above -->

|

||||

|

||||

## Expected Behaviour

|

||||

<!--- If you're describing a bug, tell us what should happen -->

|

||||

<!--- If you're suggesting a change/improvement, tell us how it should work -->

|

||||

|

||||

## Current Behaviour

|

||||

<!--- If describing a bug, tell us what happens instead of the expected behavior -->

|

||||

<!--- If suggesting a change/improvement, explain the difference from current behavior -->

|

||||

|

||||

## Possible Solution

|

||||

<!--- Not obligatory, but suggest a fix/reason for the bug, -->

|

||||

<!--- or ideas how to implement the addition or change -->

|

||||

|

||||

## Steps to Reproduce (for bugs)

|

||||

<!--- Provide a link to a live example, or an unambiguous set of steps to -->

|

||||

<!--- reproduce this bug. Include code to reproduce, if relevant -->

|

||||

1.

|

||||

2.

|

||||

3.

|

||||

4.

|

||||

|

||||

## Context

|

||||

<!--- How has this issue affected you? What are you trying to accomplish? -->

|

||||

<!--- Providing context helps us come up with a solution that is most useful in the real world -->

|

||||

|

||||

## Your Environment

|

||||

|

||||

* OS and architecture:

|

||||

|

||||

* Versions:

|

||||

|

||||

```sh

|

||||

go version

|

||||

|

||||

containerd -version

|

||||

|

||||

uname -a

|

||||

|

||||

cat /etc/os-release

|

||||

```

|

||||

40

.github/PULL_REQUEST_TEMPLATE.md

vendored

Normal file

40

.github/PULL_REQUEST_TEMPLATE.md

vendored

Normal file

@ -0,0 +1,40 @@

|

||||

<!--- Provide a general summary of your changes in the Title above -->

|

||||

|

||||

## Description

|

||||

<!--- Describe your changes in detail -->

|

||||

|

||||

## Motivation and Context

|

||||

<!--- Why is this change required? What problem does it solve? -->

|

||||

<!--- If it fixes an open issue, please link to the issue here. -->

|

||||

- [ ] I have raised an issue to propose this change **this is required**

|

||||

|

||||

|

||||

## How Has This Been Tested?

|

||||

<!--- Please describe in detail how you tested your changes. -->

|

||||

<!--- Include details of your testing environment, and the tests you ran to -->

|

||||

<!--- see how your change affects other areas of the code, etc. -->

|

||||

|

||||

|

||||

## Types of changes

|

||||

<!--- What types of changes does your code introduce? Put an `x` in all the boxes that apply: -->

|

||||

- [ ] Bug fix (non-breaking change which fixes an issue)

|

||||

- [ ] New feature (non-breaking change which adds functionality)

|

||||

- [ ] Breaking change (fix or feature that would cause existing functionality to change)

|

||||

|

||||

## Checklist:

|

||||

|

||||

Commits:

|

||||

|

||||

- [ ] I've read the [CONTRIBUTION](https://github.com/openfaas/faas/blob/master/CONTRIBUTING.md) guide

|

||||

- [ ] My commit message has a body and describe how this was tested and why it is required.

|

||||

- [ ] I have signed-off my commits with `git commit -s` for the Developer Certificate of Origin (DCO)

|

||||

|

||||

Code:

|

||||

|

||||

- [ ] My code follows the code style of this project.

|

||||

- [ ] I have added tests to cover my changes.

|

||||

|

||||

Docs:

|

||||

|

||||

- [ ] My change requires a change to the documentation.

|

||||

- [ ] I have updated the documentation accordingly.

|

||||

36

.github/workflows/build.yaml

vendored

Normal file

36

.github/workflows/build.yaml

vendored

Normal file

@ -0,0 +1,36 @@

|

||||

name: build

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: [ master ]

|

||||

pull_request:

|

||||

branches: [ master ]

|

||||

|

||||

jobs:

|

||||

build:

|

||||

env:

|

||||

GO111MODULE: off

|

||||

strategy:

|

||||

matrix:

|

||||

go-version: [1.13.x]

|

||||

os: [ubuntu-latest]

|

||||

runs-on: ${{ matrix.os }}

|

||||

steps:

|

||||

- uses: actions/checkout@master

|

||||

with:

|

||||

fetch-depth: 1

|

||||

- name: Install Go

|

||||

uses: actions/setup-go@v2

|

||||

with:

|

||||

go-version: ${{ matrix.go-version }}

|

||||

|

||||

- name: test

|

||||

run: make test

|

||||

- name: dist

|

||||

run: make dist

|

||||

- name: prepare-test

|

||||

run: make prepare-test

|

||||

- name: test e2e

|

||||

run: make test-e2e

|

||||

|

||||

|

||||

96

.github/workflows/publish.yaml

vendored

Normal file

96

.github/workflows/publish.yaml

vendored

Normal file

@ -0,0 +1,96 @@

|

||||

name: publish

|

||||

|

||||

on:

|

||||

push:

|

||||

tags:

|

||||

- '*'

|

||||

|

||||

jobs:

|

||||

publish:

|

||||

env:

|

||||

GO111MODULE: off

|

||||

strategy:

|

||||

matrix:

|

||||

go-version: [1.13.x]

|

||||

os: [ubuntu-latest]

|

||||

runs-on: ${{ matrix.os }}

|

||||

steps:

|

||||

- uses: actions/checkout@master

|

||||

with:

|

||||

fetch-depth: 1

|

||||

- name: Install Go

|

||||

uses: actions/setup-go@v2

|

||||

with:

|

||||

go-version: ${{ matrix.go-version }}

|

||||

- name: test

|

||||

run: make test

|

||||

- name: dist

|

||||

run: make dist

|

||||

- name: hashgen

|

||||

run: make hashgen

|

||||

|

||||

- name: Create Release

|

||||

id: create_release

|

||||

uses: actions/create-release@v1

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

with:

|

||||

tag_name: ${{ github.ref }}

|

||||

release_name: Release ${{ github.ref }}

|

||||

draft: false

|

||||

prerelease: false

|

||||

|

||||

- name: Upload Release faasd

|

||||

uses: actions/upload-release-asset@v1

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

with:

|

||||

upload_url: ${{ steps.create_release.outputs.upload_url }} # This pulls from the CREATE RELEASE step above, referencing it's ID to get its outputs object, which include a `upload_url`. See this blog post for more info: https://jasonet.co/posts/new-features-of-github-actions/#passing-data-to-future-steps

|

||||

asset_path: ./bin/faasd

|

||||

asset_name: faasd

|

||||

asset_content_type: application/binary

|

||||

- name: Upload Release faasd-armhf

|

||||

uses: actions/upload-release-asset@v1

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

with:

|

||||

upload_url: ${{ steps.create_release.outputs.upload_url }} # This pulls from the CREATE RELEASE step above, referencing it's ID to get its outputs object, which include a `upload_url`. See this blog post for more info: https://jasonet.co/posts/new-features-of-github-actions/#passing-data-to-future-steps

|

||||

asset_path: ./bin/faasd-armhf

|

||||

asset_name: faasd-armhf

|

||||

asset_content_type: application/binary

|

||||

- name: Upload Release faasd-arm64

|

||||

uses: actions/upload-release-asset@v1

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

with:

|

||||

upload_url: ${{ steps.create_release.outputs.upload_url }} # This pulls from the CREATE RELEASE step above, referencing it's ID to get its outputs object, which include a `upload_url`. See this blog post for more info: https://jasonet.co/posts/new-features-of-github-actions/#passing-data-to-future-steps

|

||||

asset_path: ./bin/faasd-arm64

|

||||

asset_name: faasd-arm64

|

||||

asset_content_type: application/binary

|

||||

- name: Upload Release faasd.sha256

|

||||

uses: actions/upload-release-asset@v1

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

with:

|

||||

upload_url: ${{ steps.create_release.outputs.upload_url }} # This pulls from the CREATE RELEASE step above, referencing it's ID to get its outputs object, which include a `upload_url`. See this blog post for more info: https://jasonet.co/posts/new-features-of-github-actions/#passing-data-to-future-steps

|

||||

asset_path: ./bin/faasd.sha256

|

||||

asset_name: faasd.sha256

|

||||

asset_content_type: text/plain

|

||||

- name: Upload Release faasd-armhf.sha256

|

||||

uses: actions/upload-release-asset@v1

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

with:

|

||||

upload_url: ${{ steps.create_release.outputs.upload_url }} # This pulls from the CREATE RELEASE step above, referencing it's ID to get its outputs object, which include a `upload_url`. See this blog post for more info: https://jasonet.co/posts/new-features-of-github-actions/#passing-data-to-future-steps

|

||||

asset_path: ./bin/faasd-armhf.sha256

|

||||

asset_name: faasd-armhf.sha256

|

||||

asset_content_type: text/plain

|

||||

- name: Upload Release faasd-arm64.sha256

|

||||

uses: actions/upload-release-asset@v1

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

with:

|

||||

upload_url: ${{ steps.create_release.outputs.upload_url }} # This pulls from the CREATE RELEASE step above, referencing it's ID to get its outputs object, which include a `upload_url`. See this blog post for more info: https://jasonet.co/posts/new-features-of-github-actions/#passing-data-to-future-steps

|

||||

asset_path: ./bin/faasd-arm64.sha256

|

||||

asset_name: faasd-arm64.sha256

|

||||

asset_content_type: text/plain

|

||||

7

.gitignore

vendored

7

.gitignore

vendored

@ -1,3 +1,10 @@

|

||||

/faasd

|

||||

hosts

|

||||

/resolv.conf

|

||||

.idea/

|

||||

|

||||

basic-auth-user

|

||||

basic-auth-password

|

||||

/bin

|

||||

/secrets

|

||||

.vscode

|

||||

|

||||

20

.travis.yml

20

.travis.yml

@ -1,20 +0,0 @@

|

||||

sudo: required

|

||||

language: go

|

||||

go:

|

||||

- '1.12'

|

||||

script:

|

||||

- make dist

|

||||

deploy:

|

||||

provider: releases

|

||||

api_key:

|

||||

secure: bccOSB+Mbk5ZJHyJfX82Xg/3/7mxiAYHx7P5m5KS1ncDuRpJBFjDV8Nx2PWYg341b5SMlCwsS3IJ9NkoGvRSKK+3YqeNfTeMabVNdKC2oL1i+4pdxGlbl57QXkzT4smqE8AykZEo4Ujk42rEr3e0gSHT2rXkV+Xt0xnoRVXn2tSRUDwsmwANnaBj6KpH2SjJ/lsfTifxrRB65uwcePaSjkqwR6htFraQtpONC9xYDdek6EoVQmoft/ONZJqi7HR+OcA1yhSt93XU6Vaf3678uLlPX9c/DxgIU9UnXRaOd0UUEiTHaMMWDe/bJSrKmgL7qY05WwbGMsXO/RdswwO1+zwrasrwf86SjdGX/P9AwobTW3eTEiBqw2J77UVbvLzDDoyJ5KrkbHRfPX8aIPO4OG9eHy/e7C3XVx4qv9bJBXQ3qD9YJtei9jmm8F/MCdPWuVYC0hEvHtuhP/xMm4esNUjFM5JUfDucvAuLL34NBYHBDP2XNuV4DkgQQPakfnlvYBd7OqyXCU6pzyWSasXpD1Rz8mD/x8aTUl2Ya4bnXQ8qAa5cnxfPqN2ADRlTw1qS7hl6LsXzNQ6r1mbuh/uFi67ybElIjBTfuMEeJOyYHkkLUHIBpooKrPyr0luAbf0By2D2N/eQQnM/RpixHNfZG/mvXx8ZCrs+wxgvG1Rm7rM=

|

||||

file:

|

||||

- ./bin/faasd

|

||||

- ./bin/faasd-armhf

|

||||

- ./bin/faasd-arm64

|

||||

skip_cleanup: true

|

||||

on:

|

||||

tags: true

|

||||

|

||||

env:

|

||||

- GO111MODULE=off

|

||||

475

Gopkg.lock

generated

475

Gopkg.lock

generated

@ -1,475 +0,0 @@

|

||||

# This file is autogenerated, do not edit; changes may be undone by the next 'dep ensure'.

|

||||

|

||||

|

||||

[[projects]]

|

||||

digest = "1:d5e752c67b445baa5b6cb6f8aa706775c2aa8e41aca95a0c651520ff2c80361a"

|

||||

name = "github.com/Microsoft/go-winio"

|

||||

packages = [

|

||||

".",

|

||||

"pkg/guid",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "6c72808b55902eae4c5943626030429ff20f3b63"

|

||||

version = "v0.4.14"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:b28f788c0be42a6d26f07b282c5ff5f814ab7ad5833810ef0bc5f56fb9bedf11"

|

||||

name = "github.com/Microsoft/hcsshim"

|

||||

packages = [

|

||||

".",

|

||||

"internal/cow",

|

||||

"internal/hcs",

|

||||

"internal/hcserror",

|

||||

"internal/hns",

|

||||

"internal/interop",

|

||||

"internal/log",

|

||||

"internal/logfields",

|

||||

"internal/longpath",

|

||||

"internal/mergemaps",

|

||||

"internal/oc",

|

||||

"internal/safefile",

|

||||

"internal/schema1",

|

||||

"internal/schema2",

|

||||

"internal/timeout",

|

||||

"internal/vmcompute",

|

||||

"internal/wclayer",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "9e921883ac929bbe515b39793ece99ce3a9d7706"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:74860eb071d52337d67e9ffd6893b29affebd026505aa917ec23131576a91a77"

|

||||

name = "github.com/alexellis/go-execute"

|

||||

packages = ["pkg/v1"]

|

||||

pruneopts = "UT"

|

||||

revision = "961405ea754427780f2151adff607fa740d377f7"

|

||||

version = "0.3.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:6076d857867a70e87dd1994407deb142f27436f1293b13e75cc053192d14eb0c"

|

||||

name = "github.com/alexellis/k3sup"

|

||||

packages = ["pkg/env"]

|

||||

pruneopts = "UT"

|

||||

revision = "f9a4adddc732742a9ee7962609408fb0999f2d7b"

|

||||

version = "0.7.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:386ca0ac781cc1b630b3ed21725759770174140164b3faf3810e6ed6366a970b"

|

||||

name = "github.com/containerd/containerd"

|

||||

packages = [

|

||||

".",

|

||||

"api/services/containers/v1",

|

||||

"api/services/content/v1",

|

||||

"api/services/diff/v1",

|

||||

"api/services/events/v1",

|

||||

"api/services/images/v1",

|

||||

"api/services/introspection/v1",

|

||||

"api/services/leases/v1",

|

||||

"api/services/namespaces/v1",

|

||||

"api/services/snapshots/v1",

|

||||

"api/services/tasks/v1",

|

||||

"api/services/version/v1",

|

||||

"api/types",

|

||||

"api/types/task",

|

||||

"archive",

|

||||

"archive/compression",

|

||||

"cio",

|

||||

"containers",

|

||||

"content",

|

||||

"content/proxy",

|

||||

"defaults",

|

||||

"diff",

|

||||

"errdefs",

|

||||

"events",

|

||||

"events/exchange",

|

||||

"filters",

|

||||

"identifiers",

|

||||

"images",

|

||||

"images/archive",

|

||||

"labels",

|

||||

"leases",

|

||||

"leases/proxy",

|

||||

"log",

|

||||

"mount",

|

||||

"namespaces",

|

||||

"oci",

|

||||

"pkg/dialer",

|

||||

"platforms",

|

||||

"plugin",

|

||||

"reference",

|

||||

"remotes",

|

||||

"remotes/docker",

|

||||

"remotes/docker/schema1",

|

||||

"rootfs",

|

||||

"runtime/linux/runctypes",

|

||||

"runtime/v2/runc/options",

|

||||

"snapshots",

|

||||

"snapshots/proxy",

|

||||

"sys",

|

||||

"version",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "ff48f57fc83a8c44cf4ad5d672424a98ba37ded6"

|

||||

version = "v1.3.2"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:7e9da25c7a952c63e31ed367a88eede43224b0663b58eb452870787d8ddb6c70"

|

||||

name = "github.com/containerd/continuity"

|

||||

packages = [

|

||||

"fs",

|

||||

"syscallx",

|

||||

"sysx",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "f2a389ac0a02ce21c09edd7344677a601970f41c"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:1b9a7426259b5333d575785e21e1bd0decf18208f5bfb6424d24a50d5ddf83d0"

|

||||

name = "github.com/containerd/fifo"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "bda0ff6ed73c67bfb5e62bc9c697f146b7fd7f13"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:6d66a41dbbc6819902f1589d0550bc01c18032c0a598a7cd656731e6df73861b"

|

||||

name = "github.com/containerd/ttrpc"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "92c8520ef9f86600c650dd540266a007bf03670f"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:7b4683388adabc709dbb082c13ba35967f072379c85b4acde997c1ca75af5981"

|

||||

name = "github.com/containerd/typeurl"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "a93fcdb778cd272c6e9b3028b2f42d813e785d40"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:e495f9f1fb2bae55daeb76e099292054fe1f734947274b3cfc403ccda595d55a"

|

||||

name = "github.com/docker/distribution"

|

||||

packages = [

|

||||

"digestset",

|

||||

"reference",

|

||||

"registry/api/errcode",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "0d3efadf0154c2b8a4e7b6621fff9809655cc580"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:0938aba6e09d72d48db029d44dcfa304851f52e2d67cda920436794248e92793"

|

||||

name = "github.com/docker/go-events"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "9461782956ad83b30282bf90e31fa6a70c255ba9"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:fa6faf4a2977dc7643de38ae599a95424d82f8ffc184045510737010a82c4ecd"

|

||||

name = "github.com/gogo/googleapis"

|

||||

packages = ["google/rpc"]

|

||||

pruneopts = "UT"

|

||||

revision = "d31c731455cb061f42baff3bda55bad0118b126b"

|

||||

version = "v1.2.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:4107f4e81e8fd2e80386b4ed56b05e3a1fe26ecc7275fe80bb9c3a80a7344ff4"

|

||||

name = "github.com/gogo/protobuf"

|

||||

packages = [

|

||||

"proto",

|

||||

"sortkeys",

|

||||

"types",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "ba06b47c162d49f2af050fb4c75bcbc86a159d5c"

|

||||

version = "v1.2.1"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:b7cb6054d3dff43b38ad2e92492f220f57ae6087ee797dca298139776749ace8"

|

||||

name = "github.com/golang/groupcache"

|

||||

packages = ["lru"]

|

||||

pruneopts = "UT"

|

||||

revision = "611e8accdfc92c4187d399e95ce826046d4c8d73"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:f5ce1529abc1204444ec73779f44f94e2fa8fcdb7aca3c355b0c95947e4005c6"

|

||||

name = "github.com/golang/protobuf"

|

||||

packages = [

|

||||

"proto",

|

||||

"ptypes",

|

||||

"ptypes/any",

|

||||

"ptypes/duration",

|

||||

"ptypes/timestamp",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "6c65a5562fc06764971b7c5d05c76c75e84bdbf7"

|

||||

version = "v1.3.2"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:870d441fe217b8e689d7949fef6e43efbc787e50f200cb1e70dbca9204a1d6be"

|

||||

name = "github.com/inconshreveable/mousetrap"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "76626ae9c91c4f2a10f34cad8ce83ea42c93bb75"

|

||||

version = "v1.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:31e761d97c76151dde79e9d28964a812c46efc5baee4085b86f68f0c654450de"

|

||||

name = "github.com/konsorten/go-windows-terminal-sequences"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "f55edac94c9bbba5d6182a4be46d86a2c9b5b50e"

|

||||

version = "v1.0.2"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:906eb1ca3c8455e447b99a45237b2b9615b665608fd07ad12cce847dd9a1ec43"

|

||||

name = "github.com/morikuni/aec"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "39771216ff4c63d11f5e604076f9c45e8be1067b"

|

||||

version = "v1.0.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:bc62c2c038cc8ae51b68f6d52570501a763bb71e78736a9f65d60762429864a9"

|

||||

name = "github.com/opencontainers/go-digest"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "c9281466c8b2f606084ac71339773efd177436e7"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:70711188c19c53147099d106169d6a81941ed5c2658651432de564a7d60fd288"

|

||||

name = "github.com/opencontainers/image-spec"

|

||||

packages = [

|

||||

"identity",

|

||||

"specs-go",

|

||||

"specs-go/v1",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "d60099175f88c47cd379c4738d158884749ed235"

|

||||

version = "v1.0.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:18d6ebfbabffccba7318a4e26028b0d41f23ff359df3dc07a53b37a9f3a4a994"

|

||||

name = "github.com/opencontainers/runc"

|

||||

packages = [

|

||||

"libcontainer/system",

|

||||

"libcontainer/user",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "d736ef14f0288d6993a1845745d6756cfc9ddd5a"

|

||||

version = "v1.0.0-rc9"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:7a58202c5cdf3d2c1eb0621fe369315561cea7f036ad10f0f0479ac36bcc95eb"

|

||||

name = "github.com/opencontainers/runtime-spec"

|

||||

packages = ["specs-go"]

|

||||

pruneopts = "UT"

|

||||

revision = "29686dbc5559d93fb1ef402eeda3e35c38d75af4"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:cf31692c14422fa27c83a05292eb5cbe0fb2775972e8f1f8446a71549bd8980b"

|

||||

name = "github.com/pkg/errors"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "ba968bfe8b2f7e042a574c888954fccecfa385b4"

|

||||

version = "v0.8.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:fd61cf4ae1953d55df708acb6b91492d538f49c305b364a014049914495db426"

|

||||

name = "github.com/sirupsen/logrus"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "8bdbc7bcc01dcbb8ec23dc8a28e332258d25251f"

|

||||

version = "v1.4.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:e096613fb7cf34743d49af87d197663cfccd61876e2219853005a57baedfa562"

|

||||

name = "github.com/spf13/cobra"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "f2b07da1e2c38d5f12845a4f607e2e1018cbb1f5"

|

||||

version = "v0.0.5"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:524b71991fc7d9246cc7dc2d9e0886ccb97648091c63e30eef619e6862c955dd"

|

||||

name = "github.com/spf13/pflag"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "2e9d26c8c37aae03e3f9d4e90b7116f5accb7cab"

|

||||

version = "v1.0.5"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:e14e467ed00ab98665623c5060fa17e3d7079be560ffc33cabafd05d35894f05"

|

||||

name = "github.com/syndtr/gocapability"

|

||||

packages = ["capability"]

|

||||

pruneopts = "UT"

|

||||

revision = "d98352740cb2c55f81556b63d4a1ec64c5a319c2"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:2d9d06cb9d46dacfdbb45f8575b39fc0126d083841a29d4fbf8d97708f43107e"

|

||||

name = "github.com/vishvananda/netlink"

|

||||

packages = [

|

||||

".",

|

||||

"nl",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "a2ad57a690f3caf3015351d2d6e1c0b95c349752"

|

||||

version = "v1.0.0"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:975cb0c04431bf92e60b636a15897c4a3faba9f7dc04da505646630ac91d29d3"

|

||||

name = "github.com/vishvananda/netns"

|

||||

packages = ["."]

|

||||

pruneopts = "UT"

|

||||

revision = "0a2b9b5464df8343199164a0321edf3313202f7e"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:aed53a5fa03c1270457e331cf8b7e210e3088a2278fec552c5c5d29c1664e161"

|

||||

name = "go.opencensus.io"

|

||||

packages = [

|

||||

".",

|

||||

"internal",

|

||||

"trace",

|

||||

"trace/internal",

|

||||

"trace/tracestate",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "aad2c527c5defcf89b5afab7f37274304195a6b2"

|

||||

version = "v0.22.2"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:676f320d34ccfa88bfa6d04bdf388ed7062af175355c805ef57ccda1a3f13432"

|

||||

name = "golang.org/x/net"

|

||||

packages = [

|

||||

"context",

|

||||

"context/ctxhttp",

|

||||

"http/httpguts",

|

||||

"http2",

|

||||

"http2/hpack",

|

||||

"idna",

|

||||

"internal/timeseries",

|

||||

"trace",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "c0dbc17a35534bf2e581d7a942408dc936316da4"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:d6b0cfc5ae30841c4b116ac589629f56f8add0955a39f11d8c0d06ca67f5b3d5"

|

||||

name = "golang.org/x/sync"

|

||||

packages = [

|

||||

"errgroup",

|

||||

"semaphore",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "42b317875d0fa942474b76e1b46a6060d720ae6e"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:a76bac71eb452a046b47f82336ba792d8de988688a912f3fd0e8ec8e57fe1bb4"

|

||||

name = "golang.org/x/sys"

|

||||

packages = [

|

||||

"unix",

|

||||

"windows",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "af0d71d358abe0ba3594483a5d519f429dbae3e9"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:8d8faad6b12a3a4c819a3f9618cb6ee1fa1cfc33253abeeea8b55336721e3405"

|

||||

name = "golang.org/x/text"

|

||||

packages = [

|

||||

"collate",

|

||||

"collate/build",

|

||||

"internal/colltab",

|

||||

"internal/gen",

|

||||

"internal/language",

|

||||

"internal/language/compact",

|

||||

"internal/tag",

|

||||

"internal/triegen",

|

||||

"internal/ucd",

|

||||

"language",

|

||||

"secure/bidirule",

|

||||

"transform",

|

||||

"unicode/bidi",

|

||||

"unicode/cldr",

|

||||

"unicode/norm",

|

||||

"unicode/rangetable",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "342b2e1fbaa52c93f31447ad2c6abc048c63e475"

|

||||

version = "v0.3.2"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:583a0c80f5e3a9343d33aea4aead1e1afcc0043db66fdf961ddd1fe8cd3a4faf"

|

||||

name = "google.golang.org/genproto"

|

||||

packages = ["googleapis/rpc/status"]

|

||||

pruneopts = "UT"

|

||||

revision = "b31c10ee225f87dbb9f5f878ead9d64f34f5cbbb"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:48eafc052e46b4ebbc7882553873cf6198203e528627cefc94dcaf8553addd19"

|

||||

name = "google.golang.org/grpc"

|

||||

packages = [

|

||||

".",

|

||||

"balancer",

|

||||

"balancer/base",

|

||||

"balancer/roundrobin",

|

||||

"binarylog/grpc_binarylog_v1",

|

||||

"codes",

|

||||

"connectivity",

|

||||

"credentials",

|

||||

"credentials/internal",

|

||||

"encoding",

|

||||

"encoding/proto",

|

||||

"grpclog",

|

||||

"health/grpc_health_v1",

|

||||

"internal",

|

||||

"internal/backoff",

|

||||

"internal/balancerload",

|

||||

"internal/binarylog",

|

||||

"internal/channelz",

|

||||

"internal/envconfig",

|

||||

"internal/grpcrand",

|

||||

"internal/grpcsync",

|

||||

"internal/syscall",

|

||||

"internal/transport",

|

||||

"keepalive",

|

||||

"metadata",

|

||||

"naming",

|

||||

"peer",

|

||||

"resolver",

|

||||

"resolver/dns",

|

||||

"resolver/passthrough",

|

||||

"serviceconfig",

|

||||

"stats",

|

||||

"status",

|

||||

"tap",

|

||||

]

|

||||

pruneopts = "UT"

|

||||

revision = "6eaf6f47437a6b4e2153a190160ef39a92c7eceb"

|

||||

version = "v1.23.0"

|

||||

|

||||

[solve-meta]

|

||||

analyzer-name = "dep"

|

||||

analyzer-version = 1

|

||||

input-imports = [

|

||||

"github.com/alexellis/go-execute/pkg/v1",

|

||||

"github.com/alexellis/k3sup/pkg/env",

|

||||

"github.com/containerd/containerd",

|

||||

"github.com/containerd/containerd/cio",

|

||||

"github.com/containerd/containerd/containers",

|

||||

"github.com/containerd/containerd/errdefs",

|

||||

"github.com/containerd/containerd/namespaces",

|

||||

"github.com/containerd/containerd/oci",

|

||||

"github.com/morikuni/aec",

|

||||

"github.com/opencontainers/runtime-spec/specs-go",

|

||||

"github.com/spf13/cobra",

|

||||

"github.com/vishvananda/netlink",

|

||||

"github.com/vishvananda/netns",

|

||||

"golang.org/x/sys/unix",

|

||||

]

|

||||

solver-name = "gps-cdcl"

|

||||

solver-version = 1

|

||||

23

Gopkg.toml

23

Gopkg.toml

@ -1,23 +0,0 @@

|

||||

[[constraint]]

|

||||

name = "github.com/containerd/containerd"

|

||||

version = "1.3.2"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/morikuni/aec"

|

||||

version = "1.0.0"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/spf13/cobra"

|

||||

version = "0.0.5"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/alexellis/k3sup"

|

||||

version = "0.7.1"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/alexellis/go-execute"

|

||||

version = "0.3.0"

|

||||

|

||||

[prune]

|

||||

go-tests = true

|

||||

unused-packages = true

|

||||

4

LICENSE

4

LICENSE

@ -1,6 +1,8 @@

|

||||

MIT License

|

||||

|

||||

Copyright (c) 2019 Alex Ellis

|

||||

Copyright (c) 2020 Alex Ellis

|

||||

Copyright (c) 2020 OpenFaaS Ltd

|

||||

Copyright (c) 2020 OpenFaas Author(s)

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

|

||||

57

Makefile

57

Makefile

@ -1,15 +1,62 @@

|

||||

Version := $(shell git describe --tags --dirty)

|

||||

GitCommit := $(shell git rev-parse HEAD)

|

||||

LDFLAGS := "-s -w -X pkg.Version=$(Version) -X pkg.GitCommit=$(GitCommit)"

|

||||

LDFLAGS := "-s -w -X main.Version=$(Version) -X main.GitCommit=$(GitCommit)"

|

||||

CONTAINERD_VER := 1.3.4

|

||||

CNI_VERSION := v0.8.6

|

||||

ARCH := amd64

|

||||

|

||||

export GO111MODULE=on

|

||||

|

||||

.PHONY: all

|

||||

all: local

|

||||

|

||||

local:

|

||||

CGO_ENABLED=0 GOOS=linux go build -o bin/faasd

|

||||

CGO_ENABLED=0 GOOS=linux go build -mod=vendor -o bin/faasd

|

||||

|

||||

.PHONY: test

|

||||

test:

|

||||

CGO_ENABLED=0 GOOS=linux go test -mod=vendor -ldflags $(LDFLAGS) ./...

|

||||

|

||||

.PHONY: dist

|

||||

dist:

|

||||

CGO_ENABLED=0 GOOS=linux go build -ldflags $(LDFLAGS) -a -installsuffix cgo -o bin/faasd

|

||||

CGO_ENABLED=0 GOOS=linux GOARCH=arm GOARM=7 go build -ldflags $(LDFLAGS) -a -installsuffix cgo -o bin/faasd-armhf

|

||||

CGO_ENABLED=0 GOOS=linux GOARCH=arm64 go build -ldflags $(LDFLAGS) -a -installsuffix cgo -o bin/faasd-arm64

|

||||

CGO_ENABLED=0 GOOS=linux go build -mod=vendor -ldflags $(LDFLAGS) -a -installsuffix cgo -o bin/faasd

|

||||

CGO_ENABLED=0 GOOS=linux GOARCH=arm GOARM=7 go build -mod=vendor -ldflags $(LDFLAGS) -a -installsuffix cgo -o bin/faasd-armhf

|

||||

CGO_ENABLED=0 GOOS=linux GOARCH=arm64 go build -mod=vendor -ldflags $(LDFLAGS) -a -installsuffix cgo -o bin/faasd-arm64

|

||||

|

||||

.PHONY: hashgen

|

||||

hashgen:

|

||||

for f in bin/faasd*; do shasum -a 256 $$f > $$f.sha256; done

|

||||

|

||||

.PHONY: prepare-test

|

||||

prepare-test:

|

||||

curl -sLSf https://github.com/containerd/containerd/releases/download/v$(CONTAINERD_VER)/containerd-$(CONTAINERD_VER).linux-amd64.tar.gz > /tmp/containerd.tar.gz && sudo tar -xvf /tmp/containerd.tar.gz -C /usr/local/bin/ --strip-components=1

|

||||

curl -SLfs https://raw.githubusercontent.com/containerd/containerd/v1.3.2/containerd.service | sudo tee /etc/systemd/system/containerd.service

|

||||

sudo systemctl daemon-reload && sudo systemctl start containerd

|

||||

sudo /sbin/sysctl -w net.ipv4.conf.all.forwarding=1

|

||||

sudo mkdir -p /opt/cni/bin

|

||||

curl -sSL https://github.com/containernetworking/plugins/releases/download/$(CNI_VERSION)/cni-plugins-linux-$(ARCH)-$(CNI_VERSION).tgz | sudo tar -xz -C /opt/cni/bin

|

||||

sudo cp bin/faasd /usr/local/bin/

|

||||

sudo /usr/local/bin/faasd install

|

||||

sudo systemctl status -l containerd --no-pager

|

||||

sudo journalctl -u faasd-provider --no-pager

|

||||

sudo systemctl status -l faasd-provider --no-pager

|

||||

sudo systemctl status -l faasd --no-pager

|

||||

curl -sSLf https://cli.openfaas.com | sudo sh

|

||||

echo "Sleeping for 2m" && sleep 120 && sudo journalctl -u faasd --no-pager

|

||||

|

||||

.PHONY: test-e2e

|

||||

test-e2e:

|

||||

sudo cat /var/lib/faasd/secrets/basic-auth-password | /usr/local/bin/faas-cli login --password-stdin

|

||||

/usr/local/bin/faas-cli store deploy figlet --env write_timeout=1s --env read_timeout=1s --label testing=true

|

||||

sleep 5

|

||||

/usr/local/bin/faas-cli list -v

|

||||

/usr/local/bin/faas-cli describe figlet | grep testing

|

||||

uname | /usr/local/bin/faas-cli invoke figlet

|

||||

uname | /usr/local/bin/faas-cli invoke figlet --async

|

||||

sleep 10

|

||||

/usr/local/bin/faas-cli list -v

|

||||

/usr/local/bin/faas-cli remove figlet

|

||||

sleep 3

|

||||

/usr/local/bin/faas-cli list

|

||||

sleep 3

|

||||

/usr/local/bin/faas-cli logs figlet --follow=false | grep Forking

|

||||

|

||||

371

README.md

371

README.md

@ -1,153 +1,278 @@

|

||||

# faasd - serverless with containerd

|

||||

# faasd - a lightweight & portable faas engine

|

||||

|

||||

[](https://travis-ci.com/alexellis/faasd)

|

||||

[](https://github.com/openfaas/faasd/actions)

|

||||

[](https://opensource.org/licenses/MIT)

|

||||

[](https://www.openfaas.com)

|

||||

|

||||

|

||||

faasd is a Golang supervisor that bundles OpenFaaS for use with containerd instead of a container orchestrator like Kubernetes or Docker Swarm.

|

||||

faasd is [OpenFaaS](https://github.com/openfaas/) reimagined, but without the cost and complexity of Kubernetes. It runs on a single host with very modest requirements, making it fast and easy to manage. Under the hood it uses [containerd](https://containerd.io/) and [Container Networking Interface (CNI)](https://github.com/containernetworking/cni) along with the same core OpenFaaS components from the main project.

|

||||

|

||||

## About faasd:

|

||||

## When should you use faasd over OpenFaaS on Kubernetes?

|

||||

|

||||

* faasd is a single Golang binary

|

||||

* faasd is multi-arch, so works on `x86_64`, armhf and arm64

|

||||

* faasd downloads, starts and supervises the core components to run OpenFaaS

|

||||

* You have a cost sensitive project - run faasd on a 5-10 USD VPS or on your Raspberry Pi

|

||||

* When you just need a few functions or microservices, without the cost of a cluster

|

||||

* When you don't have the bandwidth to learn or manage Kubernetes

|

||||

* To deploy embedded apps in IoT and edge use-cases

|

||||

* To shrink-wrap applications for use with a customer or client

|

||||

|

||||

faasd does not create the same maintenance burden you'll find with maintaining, upgrading, and securing a Kubernetes cluster. You can deploy it and walk away, in the worst case, just deploy a new VM and deploy your functions again.

|

||||

|

||||

## About faasd

|

||||

|

||||

* is a single Golang binary

|

||||

* uses the same core components and ecosystem of OpenFaaS

|

||||

* is multi-arch, so works on Intel `x86_64` and ARM out the box

|

||||

* can be set-up and left alone to run your applications

|

||||

|

||||

|

||||

|

||||

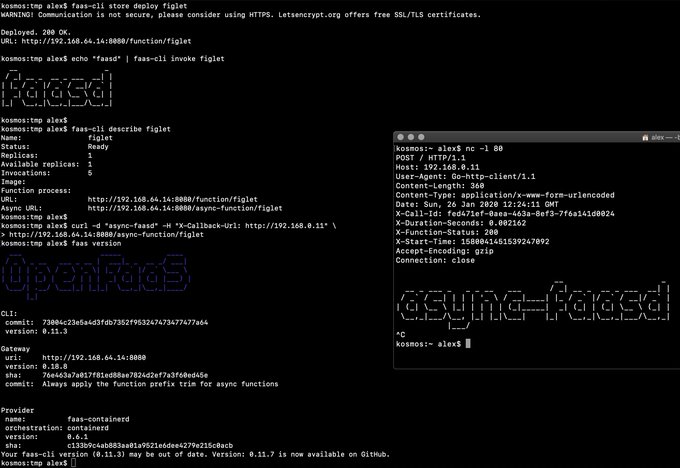

> Demo of faasd running in KVM

|

||||

|

||||

## Tutorials

|

||||

|

||||

### Get started on DigitalOcean, or any other IaaS

|

||||

|

||||

If your IaaS supports `user_data` aka "cloud-init", then this guide is for you. If not, then checkout the approach and feel free to run each step manually.

|

||||

|

||||

* [Build a Serverless appliance with faasd](https://blog.alexellis.io/deploy-serverless-faasd-with-cloud-init/)

|

||||

|

||||

### Run locally on MacOS, Linux, or Windows with multipass

|

||||

|

||||

* [Get up and running with your own faasd installation on your Mac/Ubuntu or Windows with cloud-config](/docs/MULTIPASS.md)

|

||||

|

||||

### Get started on armhf / Raspberry Pi

|

||||

|

||||

You can run this tutorial on your Raspberry Pi, or adapt the steps for a regular Linux VM/VPS host.

|

||||

|

||||

* [faasd - lightweight Serverless for your Raspberry Pi](https://blog.alexellis.io/faasd-for-lightweight-serverless/)

|

||||

|

||||

### Terraform for DigitalOcean

|

||||

|

||||

Automate everything within < 60 seconds and get a public URL and IP address back. Customise as required, or adapt to your preferred cloud such as AWS EC2.

|

||||

|

||||

* [Provision faasd 0.9.5 on DigitalOcean with Terraform 0.12.0](docs/bootstrap/README.md)

|

||||

|

||||

* [Provision faasd on DigitalOcean with built-in TLS support](docs/bootstrap/digitalocean-terraform/README.md)

|

||||

|

||||

## Operational concerns

|

||||

|

||||

### A note on private repos / registries

|

||||

|

||||

To use private image repos, `~/.docker/config.json` needs to be copied to `/var/lib/faasd/.docker/config.json`.

|

||||

|

||||

If you'd like to set up your own private registry, [see this tutorial](https://blog.alexellis.io/get-a-tls-enabled-docker-registry-in-5-minutes/).

|

||||

|

||||

Beware that running `docker login` on MacOS and Windows may create an empty file with your credentials stored in the system helper.

|

||||

|

||||

Alternatively, use you can use the `registry-login` command from the OpenFaaS Cloud bootstrap tool (ofc-bootstrap):

|

||||

|

||||

```bash

|

||||

curl -sLSf https://raw.githubusercontent.com/openfaas-incubator/ofc-bootstrap/master/get.sh | sudo sh

|

||||

|

||||

ofc-bootstrap registry-login --username <your-registry-username> --password-stdin

|

||||

# (the enter your password and hit return)

|

||||

```

|

||||

The file will be created in `./credentials/`

|

||||

|

||||

> Note for the GitHub container registry, you should use `ghcr.io` Container Registry and not the previous generation of "Docker Package Registry". [See notes on migrating](https://docs.github.com/en/free-pro-team@latest/packages/getting-started-with-github-container-registry/migrating-to-github-container-registry-for-docker-images)

|

||||

|

||||

### Logs for functions

|

||||

|

||||

You can view the logs of functions using `journalctl`:

|

||||

|

||||

```bash

|

||||

journalctl -t openfaas-fn:FUNCTION_NAME

|

||||

|

||||

|

||||

faas-cli store deploy figlet

|

||||

journalctl -t openfaas-fn:figlet -f &

|

||||

echo logs | faas-cli invoke figlet

|

||||

```

|

||||

|

||||

### Logs for the core services

|

||||

|

||||

Core services as defined in the docker-compose.yaml file are deployed as containers by faasd.

|

||||

|

||||

View the logs for a component by giving its NAME:

|

||||

|

||||

```bash

|

||||

journalctl -t default:NAME

|

||||

|

||||

journalctl -t default:gateway

|

||||

|

||||

journalctl -t default:queue-worker

|

||||

```

|

||||

|

||||

You can also use `-f` to follow the logs, or `--lines` to tail a number of lines, or `--since` to give a timeframe.

|

||||

|

||||

### Exposing core services

|

||||

|

||||

The OpenFaaS stack is made up of several core services including NATS and Prometheus. You can expose these through the `docker-compose.yaml` file located at `/var/lib/faasd`.

|

||||

|

||||

Expose the gateway to all adapters:

|

||||

|

||||

```yaml

|

||||

gateway:

|

||||

ports:

|

||||

- "8080:8080"

|

||||

```

|

||||

|

||||

Expose Prometheus only to 127.0.0.1:

|

||||

|

||||

```yaml

|

||||

prometheus:

|

||||

ports:

|

||||

- "127.0.0.1:9090:9090"

|

||||

```

|

||||

|

||||

### Upgrading faasd

|

||||

|

||||

To upgrade `faasd` either re-create your VM using Terraform, or simply replace the faasd binary with a newer one.

|

||||

|

||||

```bash

|

||||

systemctl stop faasd-provider

|

||||

systemctl stop faasd

|

||||

|

||||

# Replace /usr/local/bin/faasd with the desired release

|

||||

|

||||

# Replace /var/lib/faasd/docker-compose.yaml with the matching version for

|

||||

# that release.

|

||||

# Remember to keep any custom patches you make such as exposing additional

|

||||

# ports, or updating timeout values

|

||||

|

||||

systemctl start faasd

|

||||

systemctl start faasd-provider

|

||||

```

|

||||

|

||||

You could also perform this task over SSH, or use a configuration management tool.

|

||||

|

||||

> Note: if you are using Caddy or Let's Encrypt for free SSL certificates, that you may hit rate-limits for generating new certificates if you do this too often within a given week.

|

||||

|

||||

### Memory limits for functions

|

||||

|

||||

Memory limits for functions are supported. When the limit is exceeded the function will be killed.

|

||||

|

||||

Example:

|

||||

|

||||

```yaml

|

||||

functions:

|

||||

figlet:

|

||||

skip_build: true

|

||||

image: functions/figlet:latest

|

||||

limits:

|

||||

memory: 20Mi

|

||||

```

|

||||

|

||||

## What does faasd deploy?

|

||||

|

||||

* [faas-containerd](https://github.com/alexellis/faas-containerd/)

|

||||

* [Prometheus](https://github.com/prometheus/prometheus)

|

||||

* [the OpenFaaS gateway](https://github.com/openfaas/faas/tree/master/gateway)

|

||||

* faasd - itself, and its [faas-provider](https://github.com/openfaas/faas-provider) for containerd - CRUD for functions and services, implements the OpenFaaS REST API

|

||||

* [Prometheus](https://github.com/prometheus/prometheus) - for monitoring of services, metrics, scaling and dashboards

|

||||

* [OpenFaaS Gateway](https://github.com/openfaas/faas/tree/master/gateway) - the UI portal, CLI, and other OpenFaaS tooling can talk to this.

|

||||

* [OpenFaaS queue-worker for NATS](https://github.com/openfaas/nats-queue-worker) - run your invocations in the background without adding any code. See also: [asynchronous invocations](https://docs.openfaas.com/reference/triggers/#async-nats-streaming)

|

||||

* [NATS](https://nats.io) for asynchronous processing and queues

|

||||

|

||||

You can use the standard [faas-cli](https://github.com/openfaas/faas-cli) with faasd along with pre-packaged functions in the Function Store, or build your own with the template store.

|

||||

You'll also need:

|

||||

|

||||

### faas-containerd supports:

|

||||

* [CNI](https://github.com/containernetworking/plugins)

|

||||

* [containerd](https://github.com/containerd/containerd)

|

||||

* [runc](https://github.com/opencontainers/runc)

|

||||

|

||||

You can use the standard [faas-cli](https://github.com/openfaas/faas-cli) along with pre-packaged functions from *the Function Store*, or build your own using any OpenFaaS template.

|

||||

|

||||

### Manual / developer instructions

|

||||

|

||||

See [here for manual / developer instructions](docs/DEV.md)

|

||||

|

||||

## Getting help

|

||||

|

||||

### Docs

|

||||

|

||||

The [OpenFaaS docs](https://docs.openfaas.com/) provide a wealth of information and are kept up to date with new features.

|

||||

|

||||

### Function and template store

|

||||

|

||||

For community functions see `faas-cli store --help`

|

||||

|

||||

For templates built by the community see: `faas-cli template store list`, you can also use the `dockerfile` template if you just want to migrate an existing service without the benefits of using a template.

|

||||

|

||||

### Training and courses

|

||||

|

||||

#### LinuxFoundation training course

|

||||

|

||||

The founder of faasd and OpenFaaS has written a training course for the LinuxFoundation which also covers how to use OpenFaaS on Kubernetes. Much of the same concepts can be applied to faasd, and the course is free:

|

||||

|

||||

* [Introduction to Serverless on Kubernetes](https://www.edx.org/course/introduction-to-serverless-on-kubernetes)

|

||||

|

||||

#### Community workshop

|

||||

|

||||

[The OpenFaaS workshop](https://github.com/openfaas/workshop/) is a set of 12 self-paced labs and provides a great starting point for learning the features of openfaas. Not all features will be available or usable with faasd.

|

||||

|

||||

### Community support

|

||||

|

||||

An active community of almost 3000 users awaits you on Slack. Over 250 of those users are also contributors and help maintain the code.

|

||||

|

||||

* [Join Slack](https://slack.openfaas.io/)

|

||||

|

||||

## Roadmap

|

||||

|

||||

### Supported operations

|

||||

|

||||

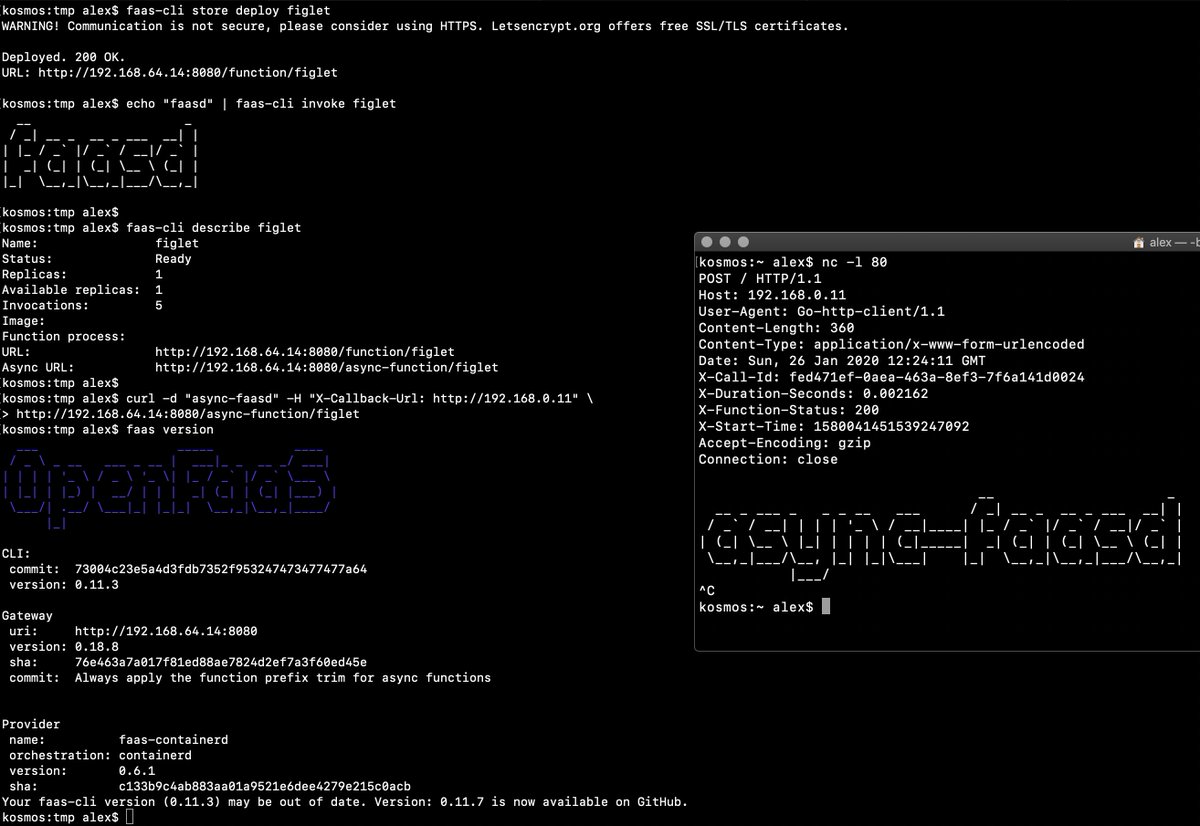

* `faas login`

|

||||

* `faas up`

|

||||

* `faas list`

|

||||

* `faas describe`

|

||||

* `faas describe`

|

||||

* `faas deploy --update=true --replace=false`

|

||||

* `faas invoke`

|

||||

* `faas invoke --async`

|

||||

* `faas invoke`

|

||||

* `faas rm`

|

||||

* `faas store list/deploy/inspect`

|

||||

* `faas version`

|

||||

* `faas namespace`

|

||||

* `faas secret`

|

||||

* `faas logs`

|

||||

|

||||

Other operations are pending development in the provider.

|

||||

Scale from and to zero is also supported. On a Dell XPS with a small, pre-pulled image unpausing an existing task took 0.19s and starting a task for a killed function took 0.39s. There may be further optimizations to be gained.

|

||||

|

||||

### Pre-reqs

|

||||

Other operations are pending development in the provider such as:

|

||||

|

||||

* Linux - ideally Ubuntu, which is used for testing

|

||||

* Installation steps as per [faas-containerd](https://github.com/alexellis/faas-containerd) for building and for development

|

||||

* [netns](https://github.com/genuinetools/netns/releases) binary in `$PATH`

|

||||

* [containerd v1.3.2](https://github.com/containerd/containerd)

|

||||

* [faas-cli](https://github.com/openfaas/faas-cli) (optional)

|

||||

* `faas auth` - supported for Basic Authentication, but OAuth2 & OIDC require a patch

|

||||

|

||||

## Backlog

|

||||

### Backlog

|

||||

|

||||

Pending:

|

||||

|

||||

* [ ] Configure `basic_auth` to protect the OpenFaaS gateway and faas-containerd HTTP API

|

||||

* [ ] Use CNI to create network namespaces and adapters

|

||||

* [ ] [Store and retrieve annotations in function spec](https://github.com/openfaas/faasd/pull/86) - in progress

|

||||

* [ ] Offer live rolling-updates, with zero downtime - requires moving to IDs vs. names for function containers

|

||||

* [ ] An installer for faasd and dependencies - runc, containerd

|

||||

* [ ] Monitor and restart any of the core components at runtime if the container stops

|

||||

* [ ] Bundle/package/automate installation of containerd - [see bootstrap from k3s](https://github.com/rancher/k3s)

|

||||

* [ ] Provide ufw rules / example for blocking access to everything but a reverse proxy to the gateway container

|

||||

* [ ] Multiple replicas per function

|

||||

|

||||

Done:

|

||||

### Known-issues

|

||||

|

||||

### Completed

|

||||

|

||||

* [x] Provide a cloud-init configuration for faasd bootstrap

|

||||

* [x] Configure core services from a docker-compose.yaml file

|

||||

* [x] Store and fetch logs from the journal

|

||||

* [x] Add support for using container images in third-party public registries

|

||||

* [x] Add support for using container images in private third-party registries

|

||||

* [x] Provide a cloud-config.txt file for automated deployments of `faasd`

|

||||

* [x] Inject / manage IPs between core components for service to service communication - i.e. so Prometheus can scrape the OpenFaaS gateway - done via `/etc/hosts` mount

|

||||

* [x] Add queue-worker and NATS

|

||||

* [x] Create faasd.service and faas-containerd.service

|

||||

* [x] Create faasd.service and faasd-provider.service

|

||||

* [x] Self-install / create systemd service via `faasd install`

|

||||

* [x] Restart containers upon restart of faasd

|

||||

* [x] Clear / remove containers and tasks with SIGTERM / SIGINT

|

||||

* [x] Determine armhf/arm64 containers to run for gateway

|

||||

* [x] Configure `basic_auth` to protect the OpenFaaS gateway and faasd-provider HTTP API

|

||||

* [x] Setup custom working directory for faasd `/var/lib/faasd/`

|

||||

* [x] Use CNI to create network namespaces and adapters

|

||||

* [x] Optionally expose core services from the docker-compose.yaml file, locally or to all adapters.

|

||||

* [x] ~~[containerd can't pull image from Github Docker Package Registry](https://github.com/containerd/containerd/issues/3291)~~ ghcr.io support

|

||||

* [x] Provide [simple Caddyfile example](https://blog.alexellis.io/https-inlets-local-endpoints/) in the README showing how to expose the faasd proxy on port 80/443 with TLS

|

||||

* [x] Annotation support

|

||||

* [x] Hard memory limits for functions

|

||||

* [ ] Terraform for DigitalOcean

|

||||

|

||||

## Hacking (build from source)

|

||||

|

||||

First run faas-containerd

|

||||

|

||||

```sh

|

||||

cd $GOPATH/src/github.com/alexellis/faas-containerd

|

||||

|

||||

# You'll need to install containerd and its pre-reqs first

|

||||

# https://github.com/alexellis/faas-containerd/

|

||||

|

||||

sudo ./faas-containerd

|

||||

```

|

||||

|

||||

Then run faasd, which brings up the gateway and Prometheus as containers

|

||||

|

||||

```sh

|

||||

cd $GOPATH/src/github.com/alexellis/faasd

|

||||

go build

|

||||

|

||||

# Install with systemd

|

||||

# sudo ./faasd install

|

||||

|

||||

# Or run interactively

|

||||

# sudo ./faasd up

|

||||

```

|

||||

|

||||

### Build and run (binaries)

|

||||

|

||||

```sh

|

||||

# For x86_64

|

||||

sudo curl -fSLs "https://github.com/alexellis/faasd/releases/download/0.2.4/faasd" \

|

||||

-o "/usr/local/bin/faasd" \

|

||||

&& sudo chmod a+x "/usr/local/bin/faasd"

|

||||

|

||||

# armhf

|

||||

sudo curl -fSLs "https://github.com/alexellis/faasd/releases/download/0.2.4/faasd-armhf" \

|

||||

-o "/usr/local/bin/faasd" \

|

||||

&& sudo chmod a+x "/usr/local/bin/faasd"

|

||||

|

||||

# arm64

|

||||

sudo curl -fSLs "https://github.com/alexellis/faasd/releases/download/0.2.4/faasd-arm64" \

|

||||

-o "/usr/local/bin/faasd" \

|

||||

&& sudo chmod a+x "/usr/local/bin/faasd"

|

||||

```

|

||||

|

||||

### At run-time

|

||||

|

||||

Look in `hosts` in the current working folder to get the IP for the gateway or Prometheus

|

||||

|

||||

```sh

|

||||

127.0.0.1 localhost

|

||||

172.19.0.1 faas-containerd

|

||||

172.19.0.2 prometheus

|

||||

|

||||

172.19.0.3 gateway

|

||||

172.19.0.4 nats

|

||||

172.19.0.5 queue-worker

|

||||

```

|

||||

|

||||

Since faas-containerd uses containerd heavily it is not running as a container, but as a stand-alone process. Its port is available via the bridge interface, i.e. netns0.

|

||||

|

||||

* Prometheus will run on the Prometheus IP plus port 8080 i.e. http://172.19.0.2:9090/targets

|

||||

|

||||

* faas-containerd runs on 172.19.0.1:8081

|

||||

|

||||

* Now go to the gateway's IP address as shown above on port 8080, i.e. http://172.19.0.3:8080 - you can also use this address to deploy OpenFaaS Functions via the `faas-cli`.

|

||||

|

||||

#### Installation with systemd

|

||||

|

||||

* `faasd install` - install faasd and containerd with systemd, run in `$GOPATH/src/github.com/alexellis/faasd`

|

||||

* `journalctl -u faasd` - faasd systemd logs

|

||||

* `journalctl -u faas-containerd` - faas-containerd systemd logs

|

||||

|

||||

### Appendix

|

||||

|

||||

Removing containers:

|

||||

|

||||

```sh

|

||||

echo faas-containerd gateway prometheus | xargs sudo ctr task rm -f

|

||||

|

||||

echo faas-containerd gateway prometheus | xargs sudo ctr container rm

|

||||

|

||||

echo faas-containerd gateway prometheus | xargs sudo ctr snapshot rm

|

||||

```

|

||||

|

||||

## Links

|

||||

|

||||

https://github.com/renatofq/ctrofb/blob/31968e4b4893f3603e9998f21933c4131523bb5d/cmd/network.go

|

||||

|

||||

https://github.com/renatofq/catraia/blob/c4f62c86bddbfadbead38cd2bfe6d920fba26dce/catraia-net/network.go

|

||||

|

||||

https://github.com/containernetworking/plugins

|

||||

|

||||

https://github.com/containerd/go-cni

|

||||

WIP:

|

||||

|

||||

* [ ] Terraform for AWS

|

||||

|

||||

29

cloud-config.txt

Normal file

29

cloud-config.txt

Normal file

@ -0,0 +1,29 @@

|

||||

#cloud-config

|

||||

ssh_authorized_keys:

|

||||

## Note: Replace with your own public key

|

||||

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC8Q/aUYUr3P1XKVucnO9mlWxOjJm+K01lHJR90MkHC9zbfTqlp8P7C3J26zKAuzHXOeF+VFxETRr6YedQKW9zp5oP7sN+F2gr/pO7GV3VmOqHMV7uKfyUQfq7H1aVzLfCcI7FwN2Zekv3yB7kj35pbsMa1Za58aF6oHRctZU6UWgXXbRxP+B04DoVU7jTstQ4GMoOCaqYhgPHyjEAS3DW0kkPW6HzsvJHkxvVcVlZ/wNJa1Ie/yGpzOzWIN0Ol0t2QT/RSWOhfzO1A2P0XbPuZ04NmriBonO9zR7T1fMNmmtTuK7WazKjQT3inmYRAqU6pe8wfX8WIWNV7OowUjUsv alex@alexr.local

|

||||

|

||||

package_update: true

|

||||

|

||||

packages:

|

||||

- runc

|

||||

|

||||

runcmd:

|

||||

- curl -sLSf https://github.com/containerd/containerd/releases/download/v1.3.5/containerd-1.3.5-linux-amd64.tar.gz > /tmp/containerd.tar.gz && tar -xvf /tmp/containerd.tar.gz -C /usr/local/bin/ --strip-components=1

|

||||

- curl -SLfs https://raw.githubusercontent.com/containerd/containerd/v1.3.5/containerd.service | tee /etc/systemd/system/containerd.service

|

||||

- systemctl daemon-reload && systemctl start containerd

|

||||

- systemctl enable containerd

|

||||

- /sbin/sysctl -w net.ipv4.conf.all.forwarding=1

|

||||

- mkdir -p /opt/cni/bin

|

||||

- curl -sSL https://github.com/containernetworking/plugins/releases/download/v0.8.5/cni-plugins-linux-amd64-v0.8.5.tgz | tar -xz -C /opt/cni/bin

|

||||

- mkdir -p /go/src/github.com/openfaas/

|

||||

- cd /go/src/github.com/openfaas/ && git clone --depth 1 --branch 0.9.5 https://github.com/openfaas/faasd

|

||||

- curl -fSLs "https://github.com/openfaas/faasd/releases/download/0.9.5/faasd" --output "/usr/local/bin/faasd" && chmod a+x "/usr/local/bin/faasd"

|

||||

- cd /go/src/github.com/openfaas/faasd/ && /usr/local/bin/faasd install

|

||||

- systemctl status -l containerd --no-pager

|

||||

- journalctl -u faasd-provider --no-pager

|

||||

- systemctl status -l faasd-provider --no-pager

|

||||

- systemctl status -l faasd --no-pager

|

||||

- curl -sSLf https://cli.openfaas.com | sh

|

||||

- sleep 60 && journalctl -u faasd --no-pager

|

||||